The Script Evaluators in StreamSets Data Collector (SDC) allow you to manipulate data in pretty much any way you please. I’ve already written about how you can call external Java code from your scripts – compiled Java code has great performance, but sometimes the code you need isn’t available in a JAR. Today I’ll show you how to call an external JavaScript library from the JavaScript Evaluator.

The Script Evaluators in StreamSets Data Collector (SDC) allow you to manipulate data in pretty much any way you please. I’ve already written about how you can call external Java code from your scripts – compiled Java code has great performance, but sometimes the code you need isn’t available in a JAR. Today I’ll show you how to call an external JavaScript library from the JavaScript Evaluator.

JavaScript in the JVM

SDC uses the Nashorn JavaScript engine to run user-written JavaScript. Nashorn includes a set of extensions to the JavaScript syntax and APIs, including the load() function. The load() function loads and evaluates scripts from local files and URLs. Let’s look at a simple example.

Minifying HTML in SDC

Let’s imagine your pipeline reads HTML, and you want to minify the HTML to remove whitespace, comments, etc, reducing its size while preserving its functionality. There are a variety of JavaScript libraries that can do the job – I used Juriy Zaytsev‘s HTMLMinifier.

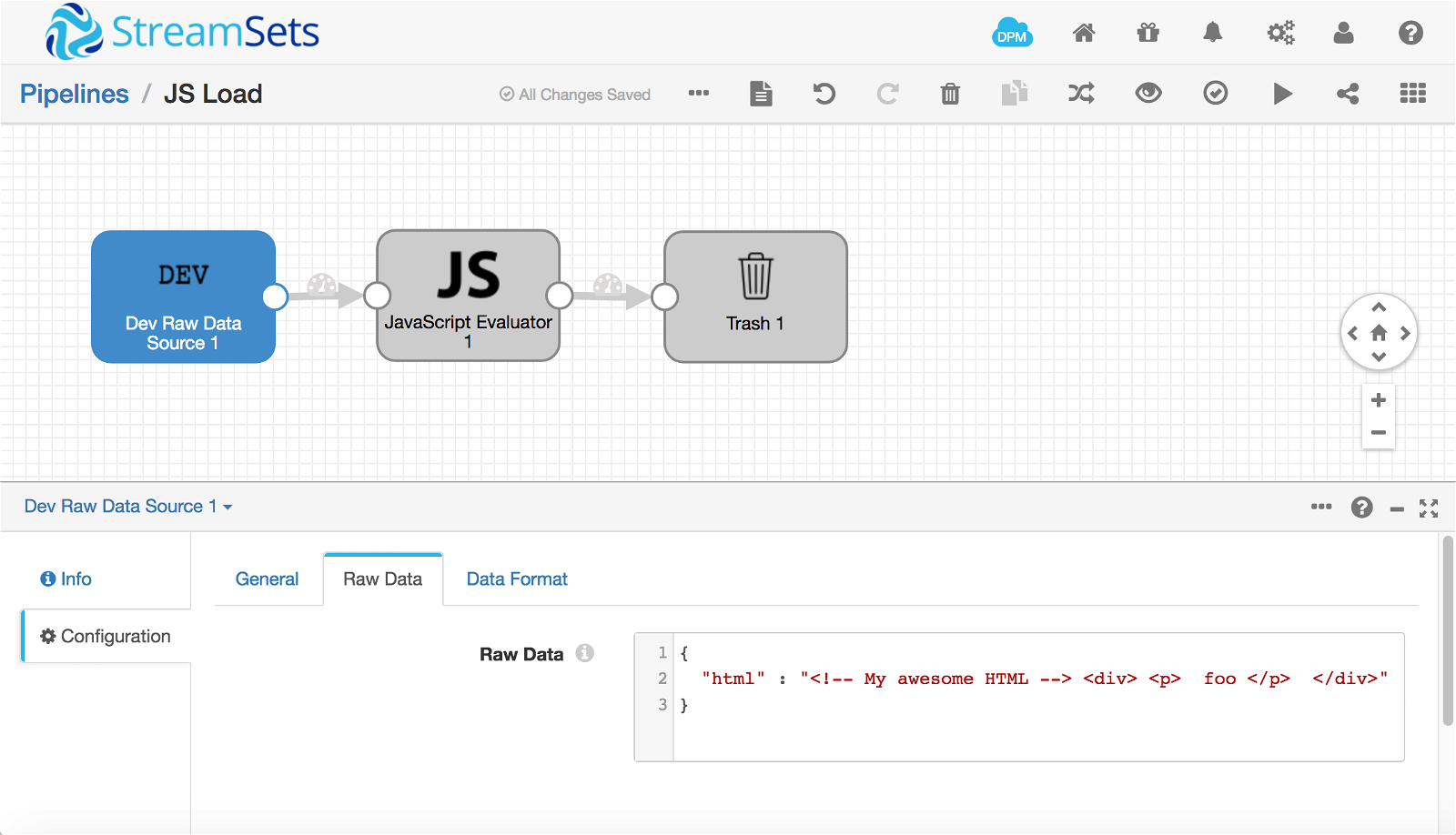

After downloading htmlminifier.js, I put it in a local directory, and created a simple test pipeline:

As you can see in the screenshot, I used the Dev Raw Data Source, configured with JSON raw data:

{

"html" : "<!-- My awesome HTML --> <div> <p> foo </p> </div>"

}

Since SDC 2.5.0.0 the Script Evaluators allow you to define an Init Script that runs just once, before processing any records. It’s here that we load the HTMLMinifier library, and save a reference to its minify() function in the state object:

load('file:///Users/pat/Downloads/scripts/htmlminifier.js');

state.minify = require('html-minifier').minify;

Now, in the main script, we can simply replace the HTML with its minified version:

for(var i = 0; i < records.length; i++) {

try {

records[i].value.html = state.minify(records[i].value.html, {

collapseWhitespace: true,

removeComments: true

});

output.write(records[i]);

} catch (e) {

// Send record to error

error.write(records[i], e);

}

}

There’s one more thing we need to do before we can run the pipeline. By default, scripts are prevented from reading the local disk. We need to add a security policy to be able to load a script file. I added the following to $SDC_CONF/sdc-security.policy :

// Set global perm so that JS can load scripts from this directory

// Note - this means any code in the JVM can read this dir!

grant {

permission java.io.FilePermission "/Users/pat/Downloads/scripts/-", "read";

};

Unfortunately, since we load the script in memory, it is not possible to specify a narrower permission, so be aware that any code in SDC can read from the configured script directory.

If you build and configure the sample pipeline, you’ll probably need to increase the preview timeout, since reading, parsing and loading 1.1MB of JavaScript can take a few seconds. 15000 milliseconds worked well on my MacBook Pro.

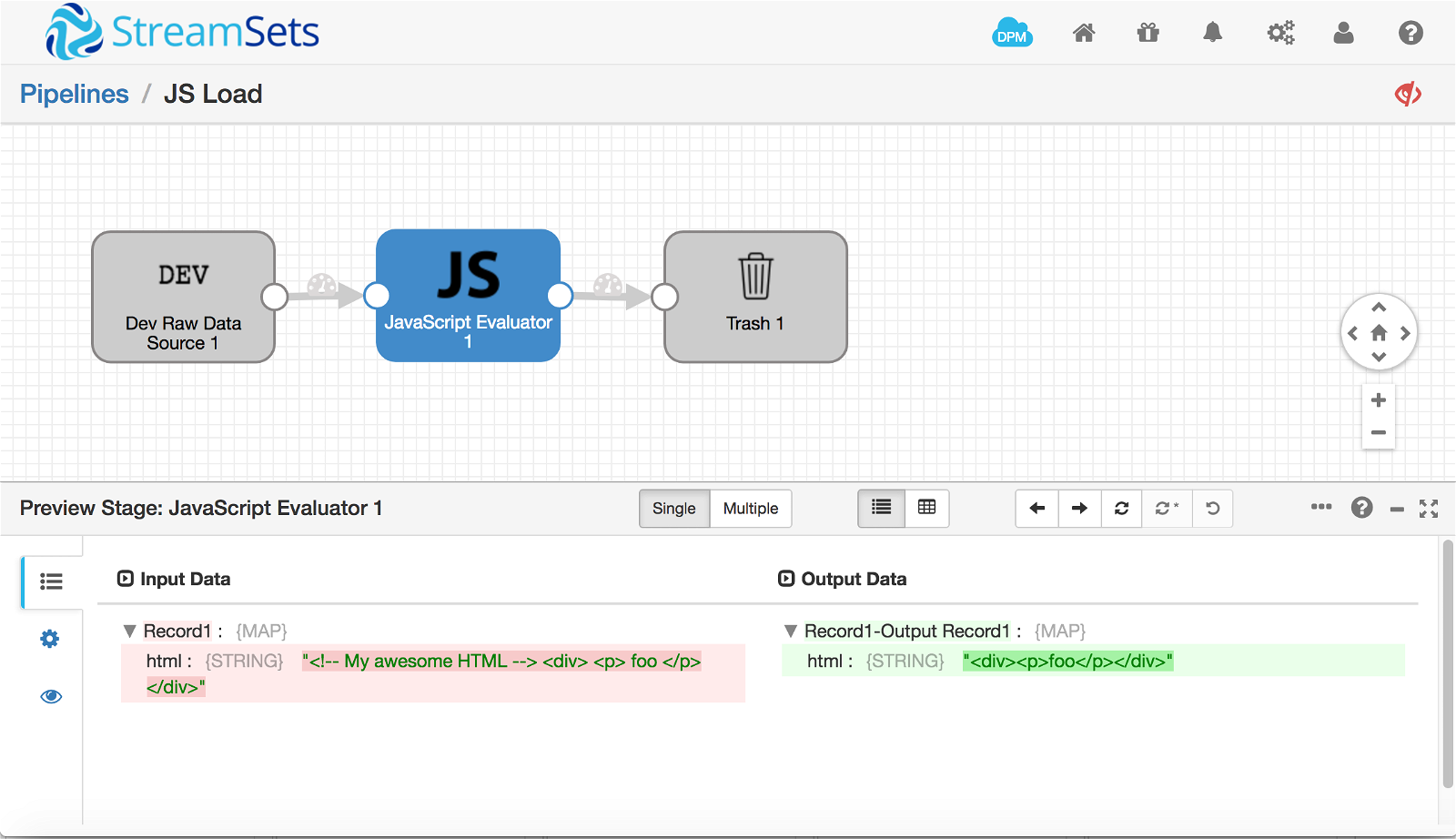

Here’s the pipeline in preview:

Success! Running the pipeline, again on my MacBook Pro, for a few minutes showed that the few seconds spent loading the JavaScript library was a one-time cost – after a couple of minutes it was processing over 500 records/second. Note – this is not a real benchmark – the test pipeline is processing a single record per batch, on a well-loaded laptop – pretty much a worst case scenario in terms of performance!

Conclusion

Using Nashorn’s load() function and configuring a permission to load scripts from local disk, we were able to run JavaScript code from an external library. What use cases do you solve using Script Evaluators in StreamSets Data Collector? Let us know in the comments!