Azure Data Lake Store (ADLS) is Microsoft’s cloud repository for big data analytic workloads, designed to capture data for operational and exploratory analytics. StreamSets Data Collector (SDC) version 2.3.0.0 included an Azure Data Lake Store destination, so you can create pipelines to read data from any supported data source and write it to ADLS.

Azure Data Lake Store (ADLS) is Microsoft’s cloud repository for big data analytic workloads, designed to capture data for operational and exploratory analytics. StreamSets Data Collector (SDC) version 2.3.0.0 included an Azure Data Lake Store destination, so you can create pipelines to read data from any supported data source and write it to ADLS.

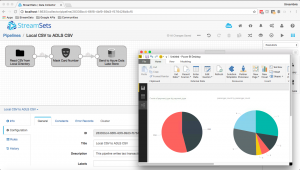

Since configuring the ADLS destination is a multi-step process; our new tutorial, Ingesting Local Data into Azure Data Lake Store, walks you through the process of adding SDC as an application in Azure Active Directory, creating a Data Lake Store, building a simple data ingest pipeline, and then configuring the ADLS destination with credentials to write to an ADLS directory.

The sample pipeline reads CSV-formatted transaction data, masks credit card numbers, and writes JSON records to files in ADLS, but, once you have mastered the basics, you’ll be able to build more complex pipelines and write a variety of data formats. If you don’t already use Azure, you can create a free Azure account, including $200 free credit and 30 days of Azure services. This is more than enough to complete the tutorial – I think I’ve used $0.10 of the allowance so far!

In this short video, I show how I combined the taxi data tutorial pipeline with the ADLS destination, then used Microsoft Power BI to visualize the data, reading it directly from ADLS (this Microsoft blog entry explains how to create the visualization).

Once you have your data in Azure Data Lake Store, you can use StreamSets Data Collector for HDInsight on Microsoft’s fully-managed cloud Hadoop service. Watch for a future tutorial focusing on ingesting data from ADLS to Azure SQL Data Warehouse on HDInsight!