Today’s complex retail applications have changed dramatically and in order to compete, enterprises must adopt new strategies for working with data. Big data and Hadoop enable retailers to connect with customers through multiple channels at new levels by leveraging traditional and real-time data sources for stream processing and analytics.

These data sources often have the characteristics of varying volumes, velocity and veracity. For example, time of day or seasonality may vary the volume of transactions. Some data might change infrequently (e.g. master data) and can be batched, while some data may need to be processed at a very high rate (e.g. order fulfillment) and is usually delivered real-time or streamed. Changes to complex applications running retail operations may cause unanticipated changes to the data that could negate previous assumptions and cause failures. Here is one way to build a real-time retail analytics solution.

StreamSets Data Collector is a uniquely differentiated data movement tool that can easily ingest a wide variety of data in batch or streaming mode. It provides an elegant paradigm to gracefully handle data drift — unexpected changes to the schema or semantics of the data.

In this post, we will use StreamSets Data Collector to graphically build a set of pipelines that reflect the needs of modern retail operations using MapR Converged Data Platform and MapR Streams for Real-Time Analytics.

StreamSets is an open source, Apache-licensed system for building and managing continuous ingestion pipelines. StreamSets Data Collector provides ETL-upon-ingest capabilities while enabling custom-code-free integration between a wide variety of origin data systems (such as relational databases, Amazon S3, or flat files) and destination systems within the Hadoop ecosystem. StreamSets is easy to install via downloadable tarball, RPM package or docker image.

MapR Streams is the event-oriented service in the MapR Platform, enabling events to be ingested, moved, and processed as they happen. Combined with the rest of the MapR Platform, MapR Streams allows organizations to create a centralized, secure, and multi-tenant data architecture, unifying files, database tables, and message topics.

This centralized architecture provides real-time access to streaming data for batch or interactive processing on a global scale including enterprise features such as secure access-control, encryption, cross data center replication, multi-tenancy, and utility-grade uptime.

A common use case with many enterprises is replicating master data from relational databases into Hadoop. Master data records – such as information on pricing, customers, vendors, products, etc. – are characteristically low frequency, low volume and don’t change very often.

In a retail operation, customer purchases may happen from point of sale systems in physical storefronts or online either through the retailer’s website click streams or their mobile shopping app. Retailers can analyze this data to generate insights about individual consumer behaviors and preferences, and offer personalized recommendations in real time. Key to this is the ability to optimize merchandise selections and pricing that are tailored to individual consumer’s likes and dislikes.

Getting Started with StreamSets

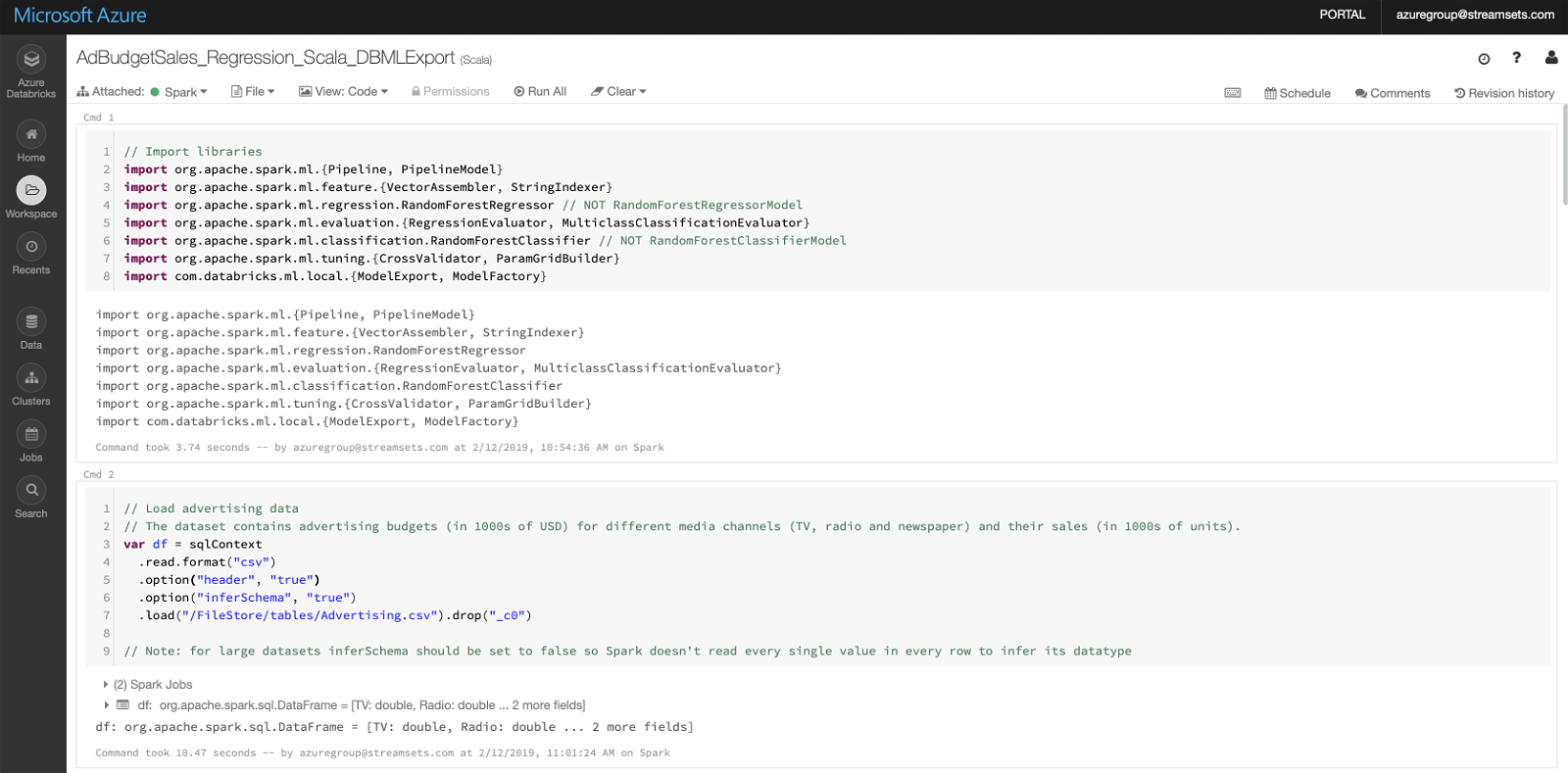

StreamSets Data Collector lets you design data flow pipelines without writing a single line of code. You can choose from a wide variety of origins to source your data, use the processor stages to transform it and write to one or more target destinations.

Upon reading data from any origin, the system automatically converts it into an internal record format that is optimized to run in-memory. Pipelines support a one-to-many architecture and guarantee ordered delivery to destinations.

A core design principle of StreamSets Data Collector is that it is schema-agnostic — the pipeline does not need to know the schema of the incoming data to function properly. This is an important differentiation from many older generation ETL tools. Such an architecture allows you to be very agile in getting up and running with new data sets and, more importantly, lets you adapt to changing data without having to recode and redefine your pipeline.

To start we’ll use the JDBC Consumer origin to connect to a relational database and bring the data into a MapR FS directory. JDBC Consumer can be set up to read data based on a query and can poll the database at a pre-configured interval.

MapR Streams is a publish-subscribe event streaming system that connects producers and consumers in real time. StreamSets provides an easy way to create event pipelines that produce data for and consume data from MapR Streams without writing any code.

In our example, the retail applications (point of sale, mobile or web) publish live transaction data directly to Kafka topics in Streams. This app-generated data is in JSON format. With Data Collector, we can use a MapR Streams Consumer stage to drain this transaction data and make a few transformations before writing it to MapR FS.

The MapR Streams Consumer origin has built in support for JSON and a number of other data formats such as XML, Avro and Protobuf. Configuring it is as simple as specifying the topic name and choosing JSON from the menu.

Our transaction data contains credit card info as well as order details. The pipeline is set up to route the data flow so that we can store credit card data in one set of directories and send the rest of the order details into another set of directories.

Typically when handling credit card numbers or other sensitive data, you will want to make sure PII does not end up in data stores that are not certified to handle sensitive or restricted data. StreamSets Data Collector has a handy Field Masker processor to let you mask sensitive data while it is in transit.

Our downstream analytics job for credit card data requires nested JSON data to be flattened. For this or any other custom transformations, we can write custom code. In this example we use a simple Python function to flatten the data.

Finally the credit card information is written to a MapR FS directory. The destination directory path can be parameterized with field values or timestamps that facilitate loading the data into partitioned Hive tables.

The second route in our pipeline processes the order information. We will convert the weight of the ordered product to metric units and calculate an appropriate tax rate. Again we develop this custom functionality using the Jython Evaluator processor. Finally we’ll use the Field Remover processor to make sure no credit card information makes it into the directories where order data is stored.

Before starting the pipeline, we may want to make sure our data flow is designed correctly and any custom code we’ve developed is working well.

The preview mode in StreamSets Data Collector allows inspecting the state of the data as it moves through the pipeline. You can click on any stage within the canvas and see the input and output of the stage, examining the transformation that occurs.

You can also make in situ edits to the input data. It is a useful technique to inject incorrect data into the pipeline in order to observe the downstream impact without actually sending the data to the destination. For example, you can edit the credit card number to have 19 digits instead of the more typical 15 or 16 and see if your pipeline correctly handles this condition.

This visual debugging feature is very useful to make sure incorrect data does not make it into the datastore, hence avoiding costly cleanup jobs.

Another important feature is the ability to monitor and alert on changes to the state of data. For example if the number of bad records entering the pipeline exceeds a certain threshold, you can choose to be notified via email so you can look into the problem.

Data Collector allows you to configure metric rules for monitoring pipeline and stage level statistics, data rules to monitor data statistics and data drift rules to monitor changes in the schema.

StreamSets Data Collector also allows you to gracefully handle errors when they happen. You can specify a pipeline level configuration to route error records to a disk file, a secondary pipeline or to another MapR Streams topic.

Routing errors to a secondary location lets you handle error records without needing to stop your primary pipeline and impact downstream analytics jobs.

After you’ve created and debugged your pipelines, hit Start and the pipeline starts up and switches the StreamSets Data Collector UI into execution mode. At this point data starts flowing to the respective MapR FS directories.

Execution mode provides fine-grained metrics about the data flow that can be viewed in the UI or be sent to any JMX-aware tool.

These pipelines are designed to be continuously running: As data is generated at the origin stages, it is automatically processed and sent to destinations.

StreamSets Data Collector provides the right infrastructure to implement and operate a set of streaming data pipelines that feed data reliably in real-time to batch or interactive processing systems such as the MapR Converged Data Platform.

Summary: Real-time Retail Analytics

Today big data systems can store and process an incredible amount of data, but ingesting data into them can be a technical and resource-intensive challenge. Retail operations deal with a dizzying set of data that varies frequently and requires constant upkeep. StreamSets Data Collector allows organizations to quickly build and reliably operate data ingest pipelines that can gracefully adapt to change.[/vc_column_text]