June 2018 marked the fourth anniversary of StreamSets’ founding; here’s a look back at the past four years of StreamSets and the Data Collector product, from the early days in stealth-startup mode, to the recent release of StreamSets Data Collector 3.4.0.

June 2018 marked the fourth anniversary of StreamSets’ founding; here’s a look back at the past four years of StreamSets and the Data Collector product, from the early days in stealth-startup mode, to the recent release of StreamSets Data Collector 3.4.0.

Girish Pancha and Arvind Prabhakar founded StreamSets on June 27th, 2014. Girish had been at Informatica for many years, rising to the level of Chief Product Officer; Arvind had held technical roles at both Informatica and Cloudera. Between them, they had realized that the needs of enterprises were not being met by existing data integration products – for instance, the best practice for many customers ingesting data into Hadoop was laborious manual coding of data processing logic and orchestration using low-level frameworks like Apache Sqoop. They also realized that this would be true for other proliferating data platforms such as Kafka (Kafka Connect), Elastic (Logstash) and cloud infrastructure (Amazon Glue, etc.). The main obstacle they identified was ‘data drift’, the inescapable evolution of data structure, semantics and infrastructure, making data integration difficult and solutions brittle. Data drift exists in the traditional data integration world, but grows and accelerates when dealing with modern data sources and platforms. The founding vision for StreamSets was to ”solve the data drift problem for all modern data architectures”.

Girish and Arvind spent over a year talking to dozens of enterprises across the world to validate the problem, find product-market fit, and define the feature set for what would become StreamSets Data Collector. The initial version, 1.0.0, was released to a subset of these enterprises while StreamSets was still in stealth mode.

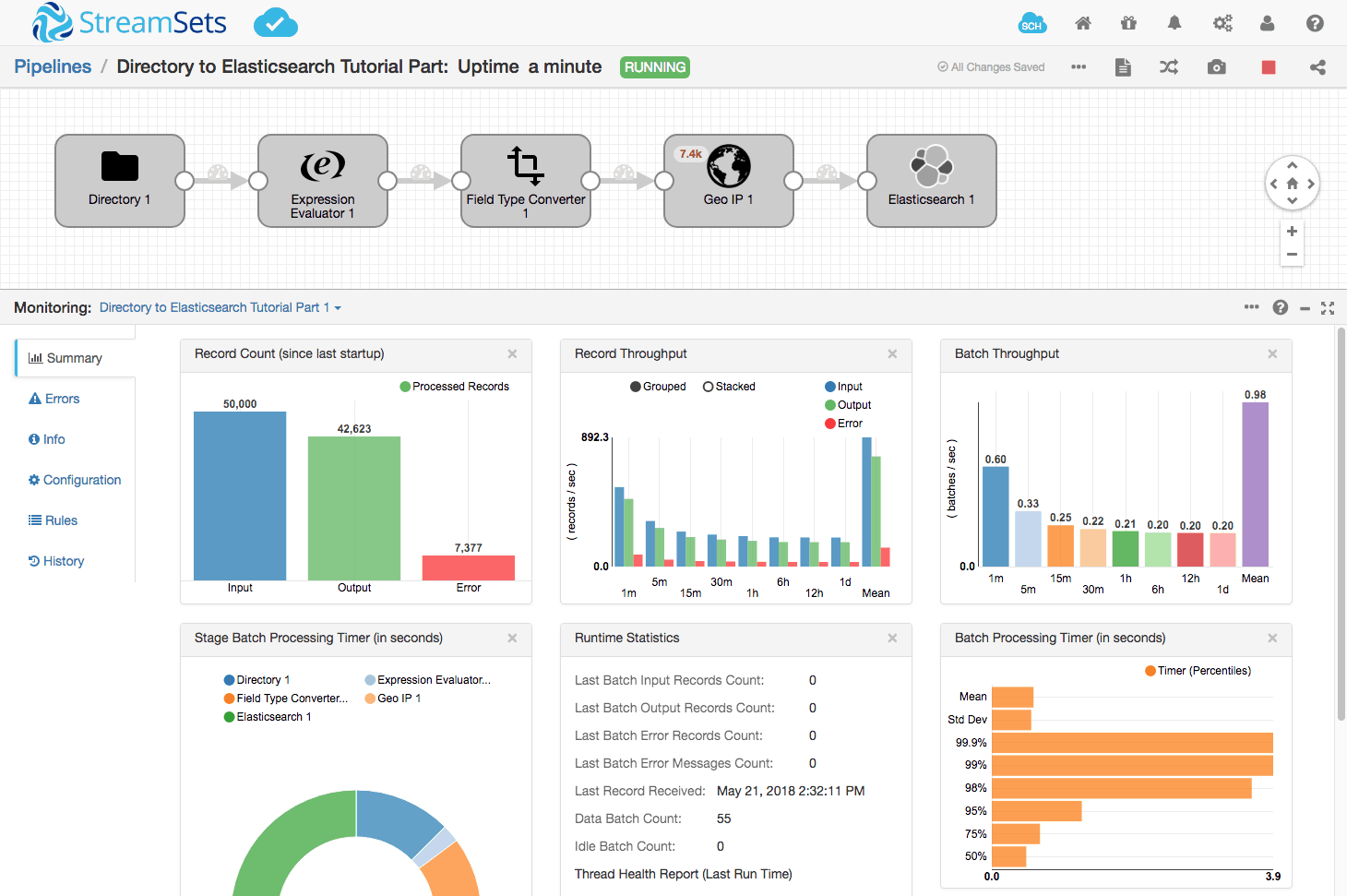

That first version of Data Collector defined the architecture for the product, processing continuous streams of data in micro-batches, and introduced Data Collector’s signature user interface, allowing users to easily create, run and monitor data pipelines by dragging and dropping pipeline elements, or stages. Underneath the covers, this implementation used Dataflow Sensors, which lie at the heart of the solution to data-drift. These dataflow sensors enabled continuous monitoring and control over every aspect of dataflows while operating in production environments.

StreamSets Data Collector 1.0.0 shipped with an impressive array of connectors, including origins reading data from Amazon Kinesis, Apache Kafka, Directory, File Tail, HTTP, JDBC and MongoDB, and destinations targeting Amazon Kinesis, Apache Cassandra, Apache HBase, Apache Kafka, Elasticsearch, Local FS and JDBC.

In addition, multiple versions of both the Cloudera and Hortonworks Hadoop distributions were supported, allowing users to read and write data to the Hadoop distributed file system. StreamSets’ innovative architecture allowed data pipelines to not only target multiple versions of platforms such as Kafka and Hadoop, but to do so within the same pipeline, allowing users to build pipelines to migrate from one version to another, or even to write to two versions of a platform simultaneously.

Aside from connectivity, the initial release of Data Collector also allowed users to apply many transformations to data as it flowed through the pipeline. That initial set of processors included Expression Evaluator, Field Remover, Field Masker, Geo IP (IP address to geographic data), Record Deduplicator and Stream Selector.

Recognizing that not every use case can be solved with off-the-shelf components, version 1.0.0 included JavaScript and Jython (Python on the JVM) script evaluators, allowing users to implement custom logic without needing to go all the way to writing a custom processor in Java.

Even in that initial release, dataflow pipelines could run standalone, or on a cluster, leveraging YARN and MapReduce to read data from Hadoop FS, and Spark Streaming to read data in parallel from multiple Kafka partitions.

Finally, demonstrating StreamSets’ commitment to DataOps from its very beginnings, the RESTful API allowed data pipeline operations to be automated.

The big reveal was in September 2015, with a blog post, Start with Why: Data Drift, and StreamSets Data Collector 1.1.0, released as open source under the Apache 2.0 license.

This first public version tidied up the 1.0.0 code, adding the necessary license headers and acknowledgements, a command-line interface, and more pipeline stages, including an Amazon S3 origin, Field Merger, Field Renamer, Apache Hive Streaming destination and Java Message Service (JMS) origin.

Data Collector 1.1.0 was quickly picked up by data engineers, data scientists and application developers looking to solve their data ingest problems, and there was a burst of activity on the sdc-user Google Group as users got to work building data pipelines.

The rapidly growing StreamSets product team released new versions of Data Collector every few weeks – far too many to list here in detail. Here are just some of the highlights…

- 1.3.0.0 (April 2016) – the Groovy script evaluator gave more flexibility in implementing custom logic, and new stages provided connectivity to Apache Kudu, InfluxDB, and support for the MapR Converged Data Platform.

- 1.5.0.0 (June 2016) – support for HashiCorp Vault for secure management of credentials.

- 2.0.0.0 (September 2016) – integration with the newly released StreamSets Dataflow Performance Manager (DPM) and the StreamSets Cloud, and an all-new Oracle CDC origin.

- 2.2.0.0 (December 2016) – connectivity to Azure Data Lake Store, Google BigTable, Salesforce (via both an origin and a destination), and a new MySQL binlog origin. This release also saw the debut of Dataflow Triggers, allowing pipeline events, such as closing an output file, to trigger actions, such as triggering a MapReduce job.

- 2.3.0.0 (February 2017) – the HTTP Server and JDBC Multitable Consumer origins were the first origins to allow multithreaded pipelines.

- 2.7.0.0 (August 2017) – a bumper crop of pipeline stages, including Google BigQuery, Google Pub/Sub (origin and destination), OPC UA, SQL Server CDC and SQL Server Change Tracking.

- 3.0.0.0 (November 2017) – support for OpenJDK 8, as well as the Oracle JDK; the initial release of StreamSets Data Collector Edge, and many more connectors, including Amazon SQS, Google Cloud Storage (origin and destination), WebSockets and Kinetica.

- 3.2.0.0 (April 2018) – new origins for Hadoop FS and MapR-FS allowing standalone pipelines running outside the cluster to read data from these distributed file systems.

- 3.3.0 (May 2018) – marked a return to three digit version numbers, and included support for SSL/TLS and Kerberos authentication in cluster streaming pipelines reading from Apache Kafka.

The past four years have seen over 2 million downloads of Data Collector and its components, and over 400,000 pulls of the Docker images. While, because it is open source, it is difficult to ascertain exactly who has downloaded Data Collector, we have been able to identify over 2000 enterprises across all industries and government, including over ⅓ of the Fortune 500 and ⅔ of the Fortune 100.

Finally, bringing the story right up to date, StreamSets Data Collector 3.4.0, released in July 2018, includes several major new features:

- Amazon Elastic MapReduce (EMR) – run pipelines on Amazon’s managed Hadoop platform

- CDH 6.0 – support for the new major version of Cloudera’s Hadoop distribution.

- Whole File Transformer processor – a new feature allowing efficient whole-file conversions such as Avro to Parquet.

- Microservice pipelines – you can now build your own RESTful web services with Data Collector

Over the past four years, StreamSets Data Collector has evolved from a data ingest tool to the workhorse of the StreamSets DataOps Platform, joined by DPM, Control Hub, Data Collector Edge and, most recently, Data Protector. The StreamSets community has grown in parallel from a handful of users to many hundreds of data engineers, data scientists and developers across the sdc-user Google Group, the StreamSets Slack community channel, and the Ask StreamSets Q&A site.

Many thanks to our customers and community for their support over these past four years. If you’re among our users, join us in toasting this milestone; if not, take a look at Data Collector, and see what all the fuss is about!