In theory, data lakes or warehouses serve distinct roles in a modern data architecture. Your data platform facilitates seamless data staging and storage between sources and destinations. And your infrastructure responds and grows with evolving use cases, business priorities, and technologies.

But in the real world, schema, semantics, and infrastructure change constantly. Keeping up with planned changes is hard enough, but it’s nearly impossible to respond to unplanned changes that often go undetected.

Clearly, data engineers need all the tools they can get to build and operate data pipelines in a constantly changing environment of both data lakes and data warehouses.

This is why the question really isn’t—and never has been—data lake or data warehouse. The question is: how do we leverage both data lakes and warehouses in a modern data architecture so that the most data is ready (and stays ready) to answer any question analysts may want to ask?

Platforms like StreamSets make all that possible, but before we dive into how, let’s review what they are, and how they work together.

What Is a Data Warehouse?

A data warehouse is a repository for relational data from transactional systems, operational databases, and line of business applications, to be used for reporting and data analysis. It’s often a key component of an organization’s business intelligence practice, storing highly curated data that’s readily available for use by data developers, data analysts, and business analysts.

A Brief History of Data Warehouses

Data warehouses came about to address the high costs of extracting and analyzing data from an organization’s operational systems. Before data warehouses, data was extracted and stored in various places to serve independent business units.

Because these business units all operated independently, this created expensive redundancy in data extraction, processing, storage, and management.

By providing structure and a central repository, data warehouses enabled businesses to integrate data more effectively. Until the early 2000s, this model was mostly fine.

But then things got even more complicated. Data volume, velocity, and variety exploded. And data warehouses were not well equipped to make use of this massive amount of unstructured and semi-structured data.

Enter the data lake.

What Is a Data Lake?

A data lake is a storage platform for semi-structured, structured, unstructured, and binary data, at any scale, with the specific purpose of supporting the execution of analytics workloads. Data is loaded and stored in “raw” format in a data lake, with no indexing or prepping required. This allows the flexibility to perform many types of analytics—exploratory data science, big data processing, machine learning, and real-time analytics—from the most comprehensive dataset, in one central repository.

Data Lake as Complement to Data Warehouse

Far from replacing data warehouses, data lakes enhanced the utility of data warehouses.

Data lakes allow organizations to stage swathes of unstructured, semi-structured and structured data from multiple sources that they can then route to multiple purpose-built data warehouses.

This makes it possible, among other things, to more easily and cost-efficiently prepare data for transformation and explore possibilities for still-to-be-discovered use cases.

But as rosy as this all sounds in theory, implementation and management of so many different sources and destinations doesn’t play out so easily in the real world.

Migration to Cloud Data Platforms

The development of cheap cloud storage and high performance processing has given rise to new data solution concepts such as the data cloud and the data lakehouse. These innovations are blending features and functionality of data warehouses with those of data lakes.

The rise of data clouds and lakehouses underpins a larger movement from siloed, on-premises workloads to cloud data platforms. Yet simply lifting and shifting workloads from on-premises to cloud data platforms is not enough.

To keep up with change and the sheer magnitude of data, companies need to leverage smart data pipelines. By abstracting away the “how” of implementation, smart data pipelines make it easy to connect to any database, data warehouse, or data lake service, and provide quick value by delivering useful, reliable, and current data.

Shifting the Focus: From the How to the What of Data Integration

Ultimately, data engineering is an exercise in getting to value-producing data products as quickly and cost-effectively as possible.

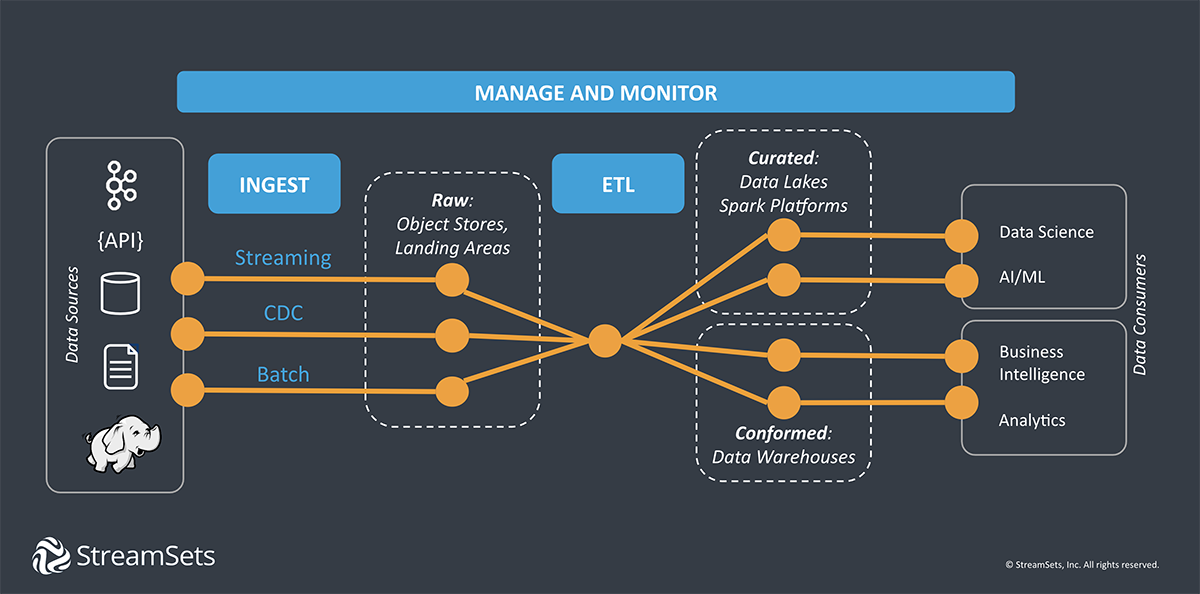

Doing so requires making streaming and batch data available across hybrid and multi-cloud platforms. So the requisite tools (i.e. data lakes, data warehouses) and integration patterns (i.e. ETL, ELT, streaming, CDC, batch) depend on the platform and use cases.

As one user explains in this Reddit thread, “You’re essentially designing around data sources, ingestion methods, storage, processing, consumption, and platform management.”

StreamSets was built from the ground up to address this monster of a design problem by providing:

- A wide breadth of connectivity and data platform support

- Built-in understanding of data sources, destinations, and underlying processing platforms

- Powerful extensibility for sophisticated data engineering

- Templates and reusable components to help empower ETL developers

- On-premises and cloud deployment options

And by providing a single, cloud-based interface through which you can see and manage your entire infrastructure, StreamSets DataOps Platform unlocks the power of modern data architectures.

With the right data integration platform you can keep up with the latest innovations and land your data where ever it needs to be. The question data warehouse vs data lake becomes a moot point.