I run StreamSets Data Collector on my MacBook Pro. In fact, I have about a dozen different versions installed – the latest, greatest 2.5.0.0, older versions, release candidates, and, of course, a development ‘master’ build that I hack on. Preparing for tonight’s St Louis Hadoop User Group Meetup, I downloaded Cloudera’s CDH 5.10 Quickstart VM so I could show our classic ‘Taxi Data Tutorial‘ and Drift Synchronization with Hadoop FS and Apache Hive. Spinning up the tutorial pipeline, I was surprised to see an error:

I run StreamSets Data Collector on my MacBook Pro. In fact, I have about a dozen different versions installed – the latest, greatest 2.5.0.0, older versions, release candidates, and, of course, a development ‘master’ build that I hack on. Preparing for tonight’s St Louis Hadoop User Group Meetup, I downloaded Cloudera’s CDH 5.10 Quickstart VM so I could show our classic ‘Taxi Data Tutorial‘ and Drift Synchronization with Hadoop FS and Apache Hive. Spinning up the tutorial pipeline, I was surprised to see an error: HADOOPFS_13 - Error while writing to HDFS: com.streamsets.pipeline.api.StageException: HADOOPFS_58 - Flush failed on file: '/sdc/taxi/_tmp_sdc-847321ce-0acb-4574-8d2c-ff63529f25b8_0' due to 'org.apache.hadoop.ipc.RemoteException(java.io.IOException): File /sdc/taxi/_tmp_sdc-847321ce-0acb-4574-8d2c-ff63529f25b8_0 could only be replicated to 0 nodes instead of minReplication (=1). There are 1 datanode(s) running and 1 node(s) are excluded in this operation. I’ll explain what this means, and how to resolve it, in this blog post.

So what’s going on? The clue is that There are 1 datanode(s) running and 1 node(s) are excluded in this operation. This is a single node CDH, so we would expect only one node to be running, but why is it excluded? By default, the CDH Quickstart VM runs in NAT mode – it has its own private IP address, in my case 10.0.2.15, with port forwarding configured so that Hadoop FS, Hive etc are available from the host machine. I have quickstart.cloudera set to 127.0.0.1 (localhost) in my /etc/hosts so I can use that hostname to access services in the VM. What’s happening is that SDC’s request to the Hadoop name node is forwarded correctly, and the name node returns the data node’s location. By default, the Hadoop client library tries to connect to the name node’s IP address, 10.0.2.15, on port 50075, but this fails, since that address is inside the VM NAT, and not accessible from the host.

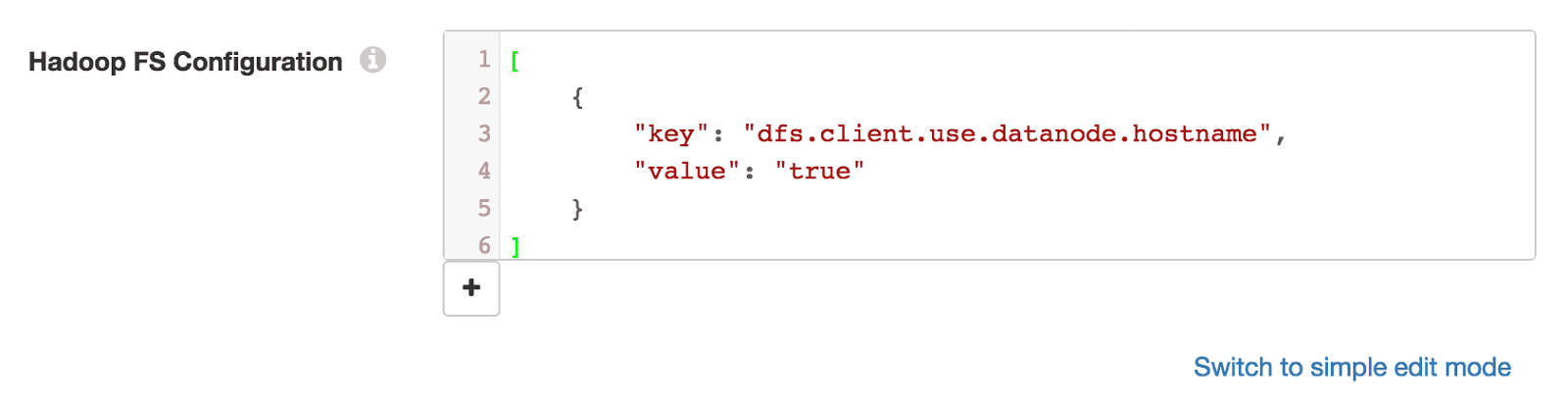

How do we resolve the problem? One way would be to reconfigure the VM to use bridged networking, so the VM’s IP address is directly accessible from the host, but I chose a quicker, easier fix. Setting dfs.client.use.datanode.hostname to true in the Hadoop FS destination configuration tells the Hadoop client to connect using the hostname, quickstart.cloudera, rather than the IP address.

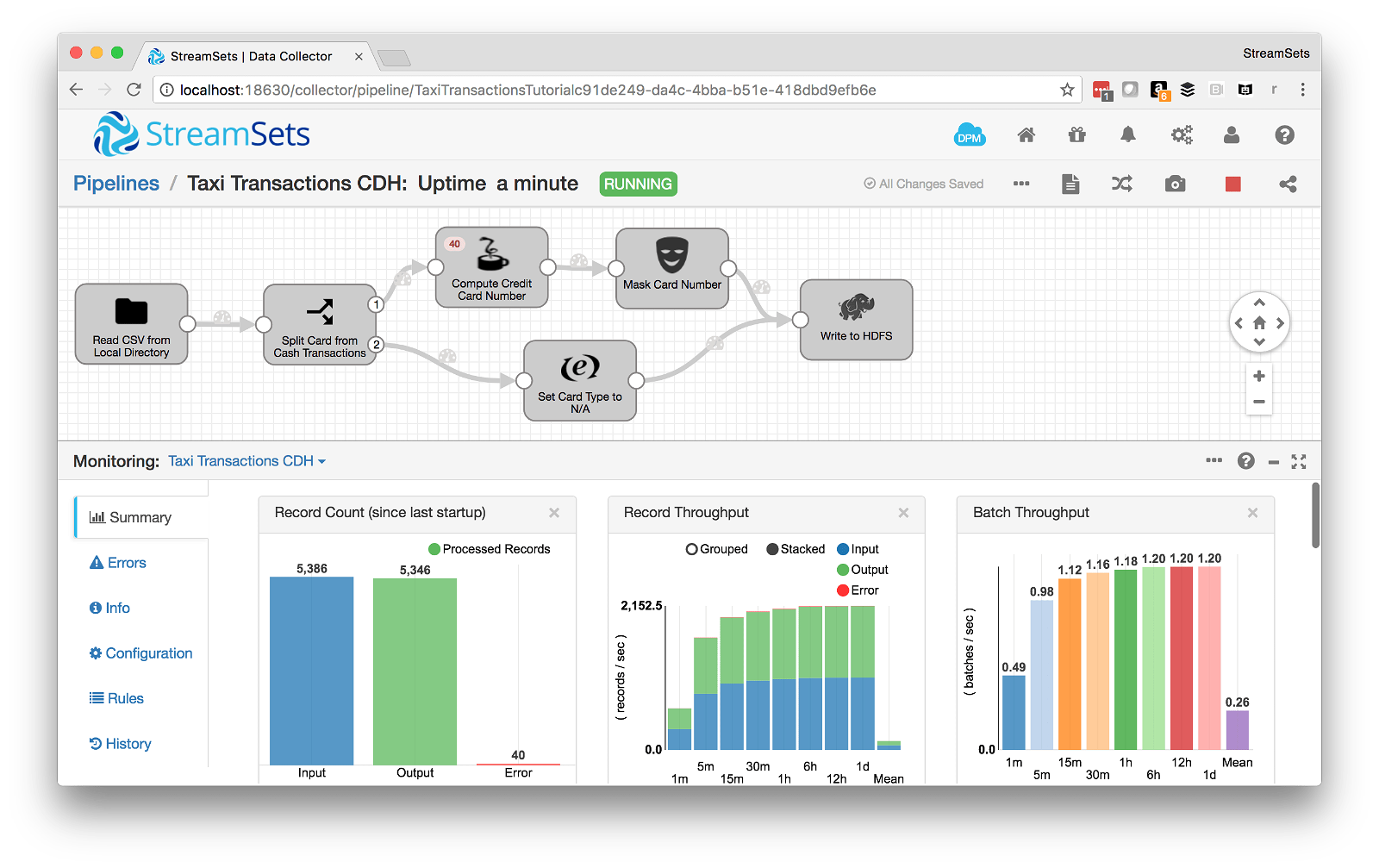

Now requests to the name node are forwarded to the VM, and my pipeline runs:

What’s your favorite SDC tip? Let us know in the comments!