Wrong data is a data quality issue. The data is incomplete, incorrect, or full of duplicates. But what happens when it’s the right data in the wrong column? Or the right data in an unrecognized format? That’s called data drift. And it happens all the time.

This isn’t to say data drift is a more significant issue than data quality. But data drift is often misdiagnosed as a data quality issue… which is a problematic disconnect in the world of data engineering.

With that disconnect in mind, in the sections below, we’ll illustrate the differences between data drift and data quality. Before we do that though, it’s important to clarify what we’re talking about when we talk about data drift.

Data Drift and Concept Drift in Machine Learning

In machine learning, data drift is defined as the difference between the distribution of training data and production data. While this type of data drift is a critical concept in machine learning applications, it’s not our focus (for now).

In machine learning data drift only refers to changes in input data, whereas our more general definition refers to data drift caused by data sources or destinations.

Another term, also from machine learning, that’s often conflated with data drift is concept drift. Elena Samuylova, Co-founder and CEO Evidently AI explains it best:

Concept drift occurs when the patterns the model learned no longer hold.

In contrast to the data drift, the distributions (such as user demographics, frequency of words, etc.) might even remain the same. Instead, the relationships between the model inputs and outputs change.

In essence, the very meaning of what we are trying to predict evolves. Depending on the scale, this will make the model less accurate or even obsolete.

What Is Data Drift Outside of ML?

Outside of machine learning, data drift is simply unexpected and undocumented changes to data structure, semantics, and infrastructure. This type of data drift breaks processes and corrupts data.

For example, a transition from 10-digit to 12-digit ID numbers affects thousands of applications. Or a change in IP address format disrupts data to a BI dashboard and goes undetected for months.

Different types of data drift may be caused by:

- Data extraction process changes, i.e. a sensor is replaced that changes measurement units from Fahrenheit to Celsius

- Data source schema changes

- Data destination schema changes

The biggest challenge with data drift isn’t fixing the problem, but quickly and correctly identifying and reacting to the problem.

Common Data Quality Issues

Data drift is distinct from data quality. It occurs regardless of data quality.

In other words, data drift can occur even when all the data entering and processed through a system is correct. Hence, right data, wrong column.

Data quality is concerned with whether the data is “right” or “wrong”. Common sources of data quality issues (or data being “wrong”) include:

- The data was entered incorrectly.

- Quality control failed to root out data quality problems.

- Duplicate records were created.

- The data isn’t used or interpreted correctly.

- All known data about an object wasn’t integrated.

- The data is too old to be useful anymore.

As you can imagine, data drift and poor data quality cause a similar problem. But the way you address them is vastly different.

How Do You Handle Data Drift?

Modern enterprises rely on thousands of integrations of specialized applications across many platforms as the primary engine of their business logic. The result is a system with chain-link logic, which means the system fails when any one of these integrations doesn’t work.

The key to avoiding data drift is to depart from data integration processes that ignore the changing nature of relationships between integrations. Instead, embrace the practice of DataOps, which assumes change is constant. By building for change, you not only manage data drift but you can also begin to harness its power.

Other, more tactical steps you can take to minimize the chances of a broken link in the chain are:

- Reduce dependency on custom code

- Minimize schema specification

- Require fully instrumented pipelines

- Decouple data pipelines from infrastructure

- Build for intent instead of semantics

- Assume multiple platforms

- Abstract away the “how” and focus on the “what”

Architect for Change with StreamSets

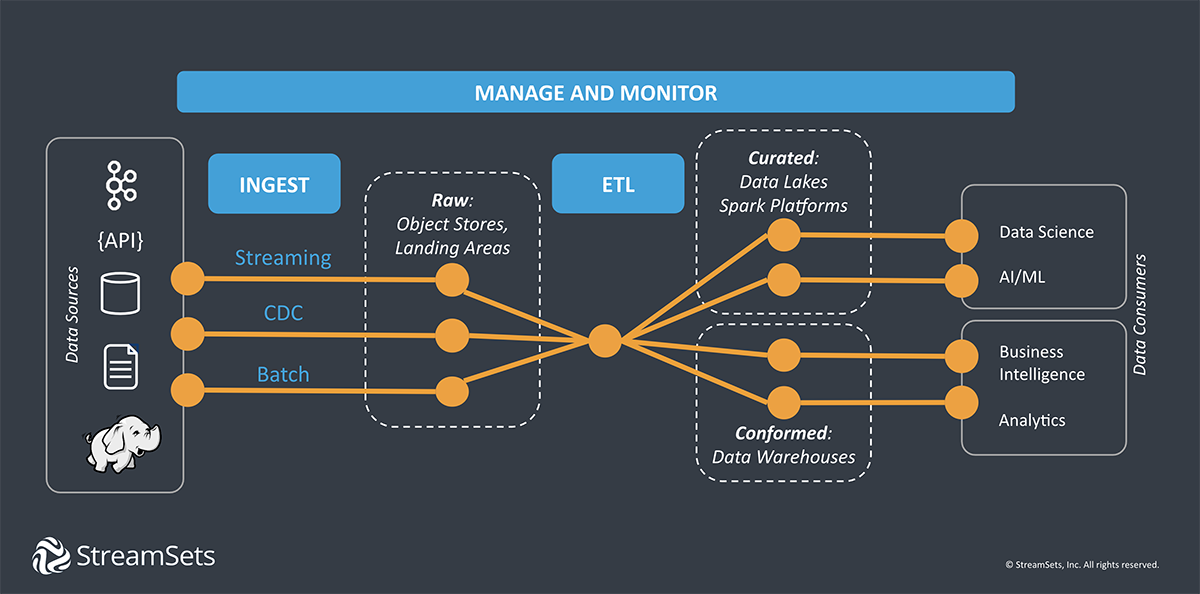

StreamSets was built from the ground up to address this monster of a design problem by providing:

- A wide breadth of connectivity and data platform support

- Built-in understanding of data sources, destinations, and underlying processing platforms

- Powerful extensibility for sophisticated data engineering

- Templates and reusable components to help empower ETL developers

- On-premises and cloud deployment options

And by providing a single, cloud-based interface through which you can see and manage your entire infrastructure, StreamSets DataOps Platform unlocks the power of modern data architectures.

With the right data integration platform you can keep up with the latest innovations and land your data where ever it needs to be. The question data warehouse vs data lake becomes a moot point.