Yannick Jaquier is a Database Technical Architect at STMicroelectronics in Geneva, Switzerland. Recently, Yannick started experimenting with StreamSets Data Collector Engine‘s Oracle CDC Client origin, building pipelines for Oracle replication–replicating data to a second Oracle database, a JSON file, and a MySQL database. Yannick documented his journey very thoroughly, and kindly gave us permission to repost his findings from his original blog entry.

Yannick Jaquier is a Database Technical Architect at STMicroelectronics in Geneva, Switzerland. Recently, Yannick started experimenting with StreamSets Data Collector Engine‘s Oracle CDC Client origin, building pipelines for Oracle replication–replicating data to a second Oracle database, a JSON file, and a MySQL database. Yannick documented his journey very thoroughly, and kindly gave us permission to repost his findings from his original blog entry.

Preamble

I came across a nice overview of Oracle CDC with StreamSets by Franck Pachot and shared it with a few teammates; they were all interested in the StreamSets Data Collector Engine. One of the main reasons is the obvious cost cutting versus Oracle GoldenGate; we have implemented GoldenGate in a project deployed worldwide. The Data Collector product is free and can handle INSERT, UPDATE, SELECT_FOR_UPDATE, and DELETE DML operations for one or more tables in a database. To handle DDL you have to check the “Produce Events” checkbox and handle the generated events in an appropriate target; this is a little bit more complex and outside the scope of this blog post.

I decided to try the product out and implement it for what we currently do with GoldenGate: building a reporting environment of our production database. The target is also an Oracle database but might in future be MySQL.

My testing environment for Oracle replication comprises three servers:

server1.domain.com(192.168.56.101) is the primary database server.server2.domain.com(192.168.56.102) is the secondary database server. The server hosting the databases (Oracle & MySQL) where data should land.server4.domain.com(192.168.56.104) is the StreamSets Data Collector server.

Oracle database release is 18c Enterprise Edition Release 18.3.0.0.0.Data Collector is release 3.4.1. MySQL release is 8.0.12 MySQL Community Server.

The three servers are in fact VirtualBox guests running Oracle Linux Server release 7.5.

StreamSets Data Collector installation

I first created a Linux streamsets account (in users group) with a /streamsets dedicated filesystem:

[streamsets@server4 ~]$ id uid=1001(streamsets) gid=100(users) groups=100(users) [streamsets@server4 ~]$ pwd /streamsets [streamsets@server4 ~]$ ll total 246332 -rw-r--r-- 1 streamsets users 248202258 Aug 2 15:32 streamsets-datacollector-core-3.4.1.tgz

[streamsets@server4 streamsets]$ tar xvzf streamsets-datacollector-core-3.4.1.tgz [streamsets@server4 ~]$ /streamsets/streamsets-datacollector-3.4.1/bin/streamsets dc Can't find java, please set JAVA_HOME pointing to your java installation

I installed Java SE Development Kit 8 (jdk-8u181-linux-x64.rpm). Only release 8 is supported so far

[streamsets@server4 streamsets]$ /streamsets/streamsets-datacollector-3.4.1/bin/streamsets dc Java 1.8 detected; adding $SDC_JAVA8_OPTS of “-XX:+UseConcMarkSweepGC -XX:+UseParNewGC -Djdk.nio.maxCachedBufferSize=262144” to $SDC_JAVA_OPTS Configuration of maximum open file limit is too low: 1024 (expected at least 32768). Please consult https://goo.gl/LgvGFl

At the end of /etc/security/limits.conf I added:

streamsets soft nofile 32768 streamsets hard nofile 32768

Now I can launch the process with:

[streamsets@server4 streamsets]$ /streamsets/streamsets-datacollector-3.4.1/bin/streamsets dc Java 1.8 detected; adding $SDC_JAVA8_OPTS of "-XX:+UseConcMarkSweepGC -XX:+UseParNewGC -Djdk.nio.maxCachedBufferSize=262144" to $SDC_JAVA_OPTS Logging initialized @1033ms to org.eclipse.jetty.util.log.Slf4jLog Running on URI : 'http://server4:18630'

Then from any browser (at this url for me, http://server4.domain.com:18630/) you should get this login window (admin/admin as default username/password):

Once logged in you see:

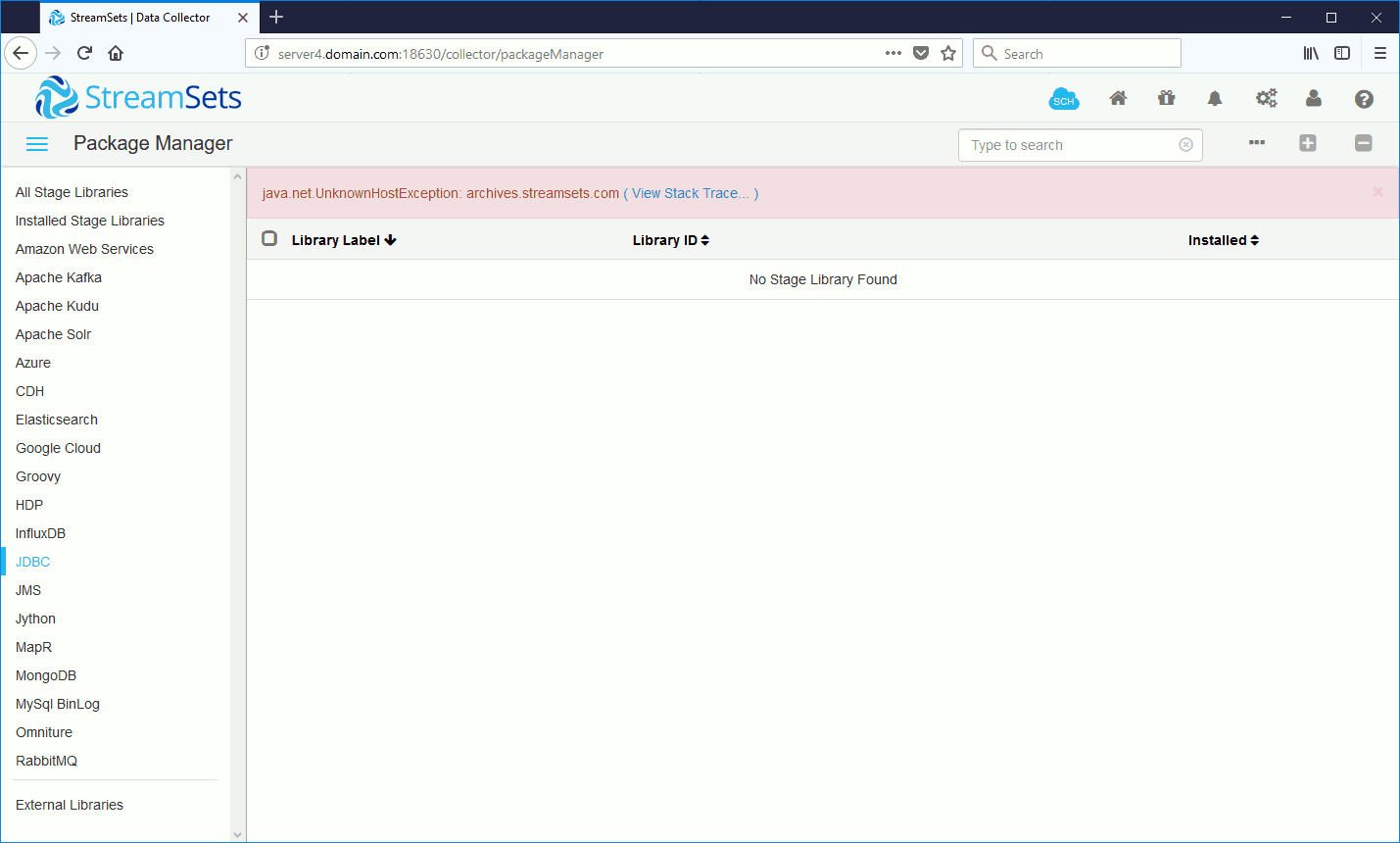

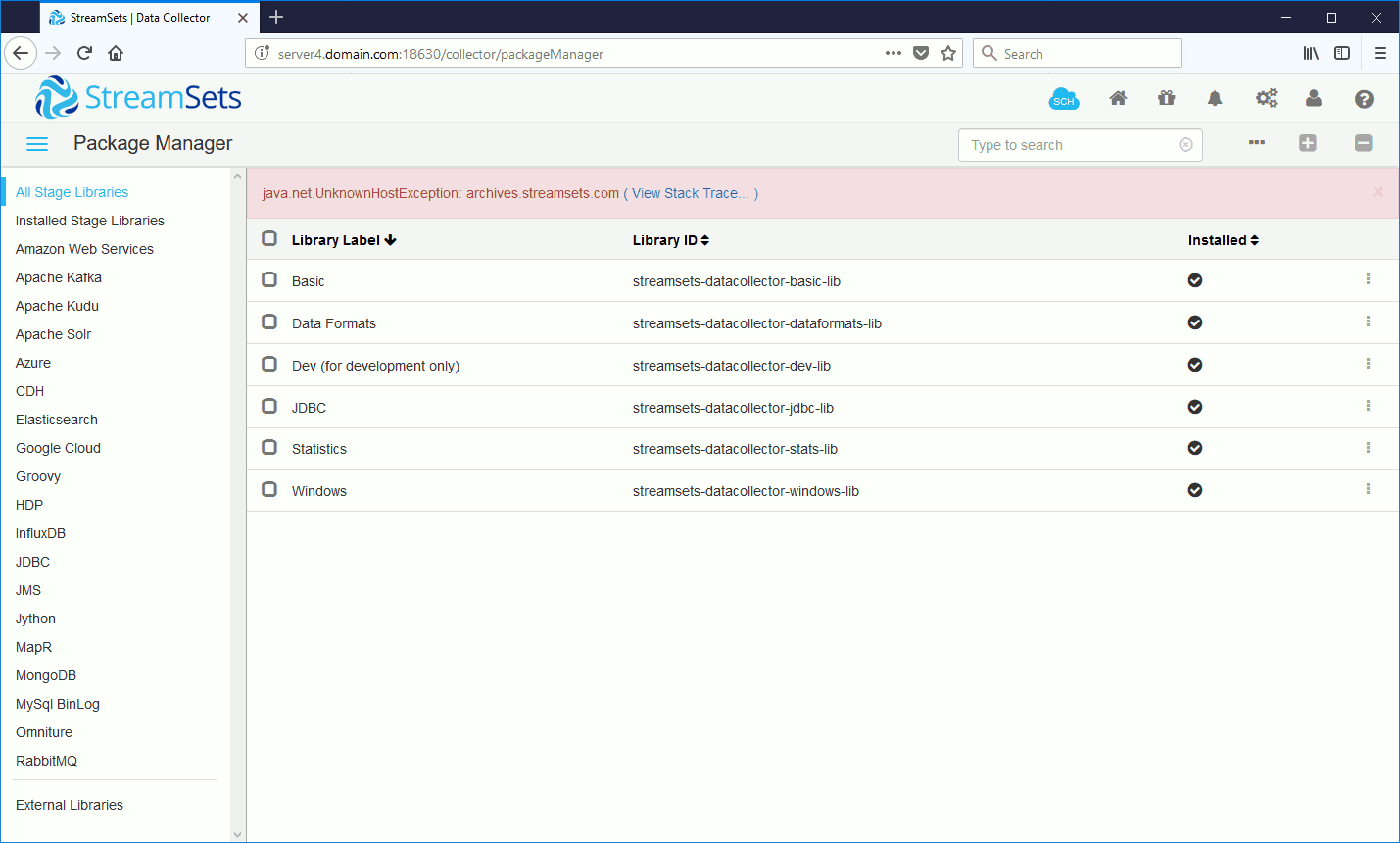

By default, the Oracle CDC (Change Data Capture) origin requires the Oracle JDBC thin driver. Click on Package Manager in the top right tool bar (third icon). If you go in JDBC you see that nothing is there (the error message is because I’m behind a company proxy):

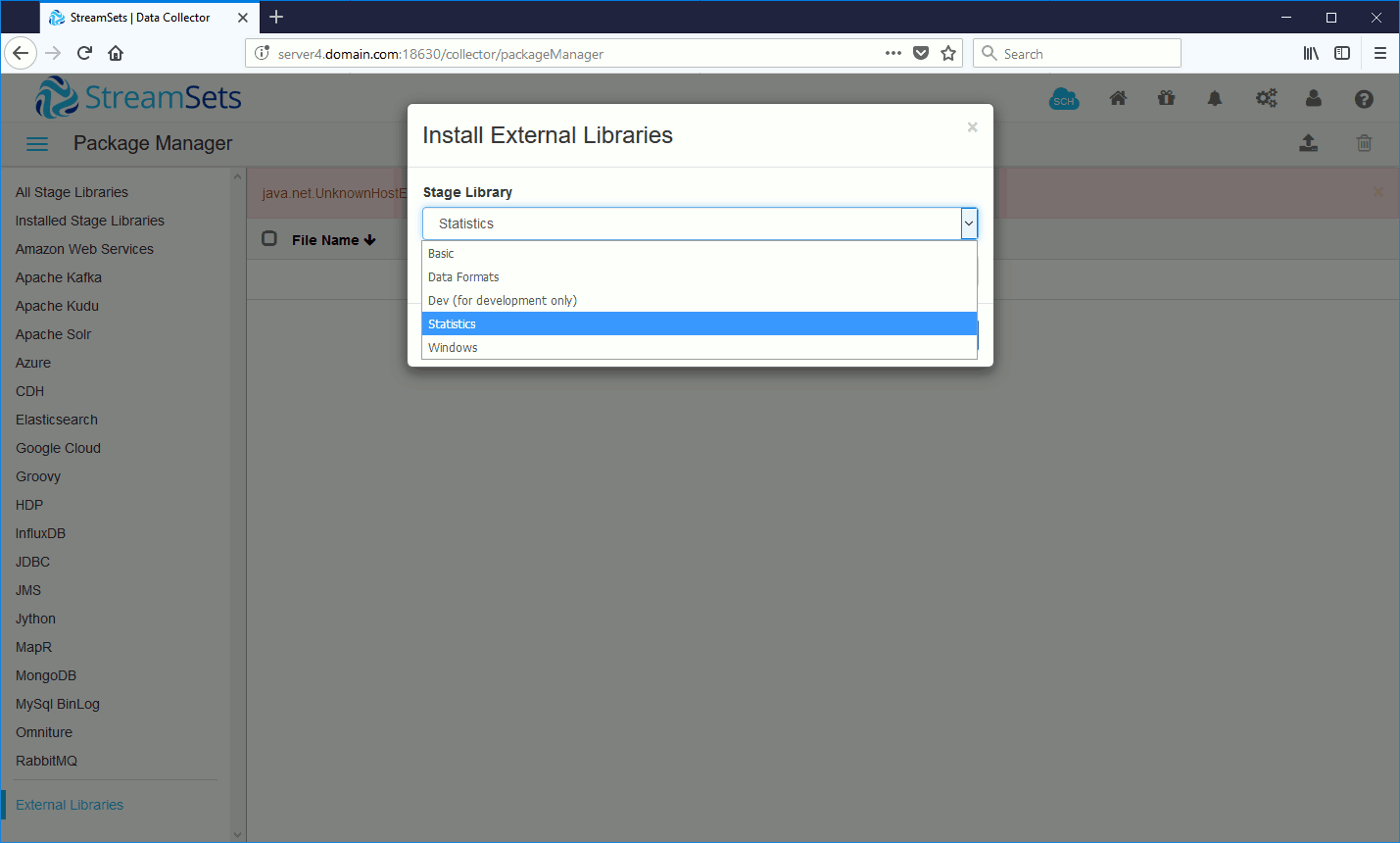

When I tried to import my JDBC thin driver file (ojdbc8.jar, the name for 18c (18.3) is the same as for 12.2.0.1, but the size differs) I saw that the JDBC category was not there:

I spent a bit of time before discovering that all of the instructions were on the StreamSets Data Collector product page:

[streamsets@server4 ~]$ /streamsets/streamsets-datacollector-3.4.1/bin/streamsets stagelibs -list

curl: (6) Could not resolve host: archives.streamsets.com; Unknown error

Failed! running curl -s -f https://archives.streamsets.com/datacollector/3.4.1/tarball/stage-lib-manifest.properties.sha1

-SL -o /tmp/sdc-setup-20988/stage-lib-manifest.properties.sha1 in /home/streamsets

[streamsets@server4 ~]$ export https_proxy='http://proxy_account:proxy_password@proxy_host:proxy_port'

[streamsets@server4 ~]$ echo $https_proxy

http://proxy_account:proxy_password@proxy_host:proxy_port

[streamsets@server4 streamsets]$ /streamsets/streamsets-datacollector-3.4.1/bin/streamsets stagelibs -list

StreamSets Data Collector

Stage Library Repository: https://archives.streamsets.com/datacollector/3.4.1/tarball

ID Name Installed

=================================================================================================================

streamsets-datacollector-aerospike-lib Aerospike 3.15.0.2 NO

streamsets-datacollector-apache-kafka_0_10-lib Apache Kafka 0.10.0.0 NO

streamsets-datacollector-apache-kafka_0_11-lib Apache Kafka 0.11.0.0 NO

streamsets-datacollector-apache-kafka_0_9-lib Apache Kafka 0.9.0.1 NO

streamsets-datacollector-apache-kafka_1_0-lib Apache Kafka 1.0.0 NO

streamsets-datacollector-apache-kudu_1_3-lib Apache Kudu 1.3.0 NO

streamsets-datacollector-apache-kudu_1_4-lib Apache Kudu 1.4.0 NO

.

.

streamsets-datacollector-jdbc-lib JDBC NO

.

.

[streamsets@server4 streamsets]$ /streamsets/streamsets-datacollector-3.4.1/bin/streamsets stagelibs -install=streamsets-datacollector-jdbc-lib Downloading: https://archives.streamsets.com/datacollector/3.4.1/tarball/streamsets-datacollector-jdbc-lib-3.4.1.tgz ######################################################################## 100.0% Stage library streamsets-datacollector-jdbc-lib installed

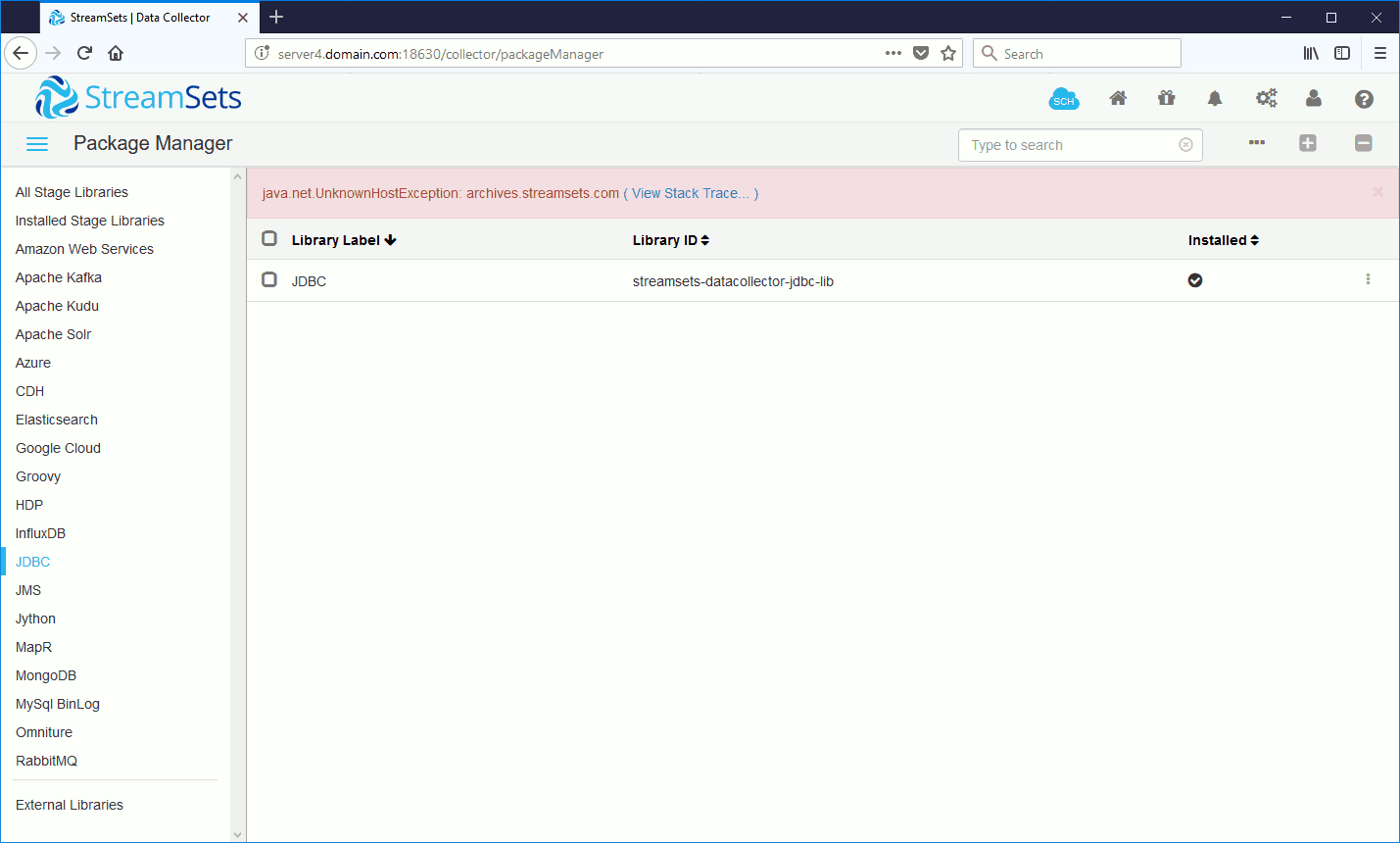

Relaunching Streamsets Data Collector and returning to the web interface I now saw JDBC as a possible library:

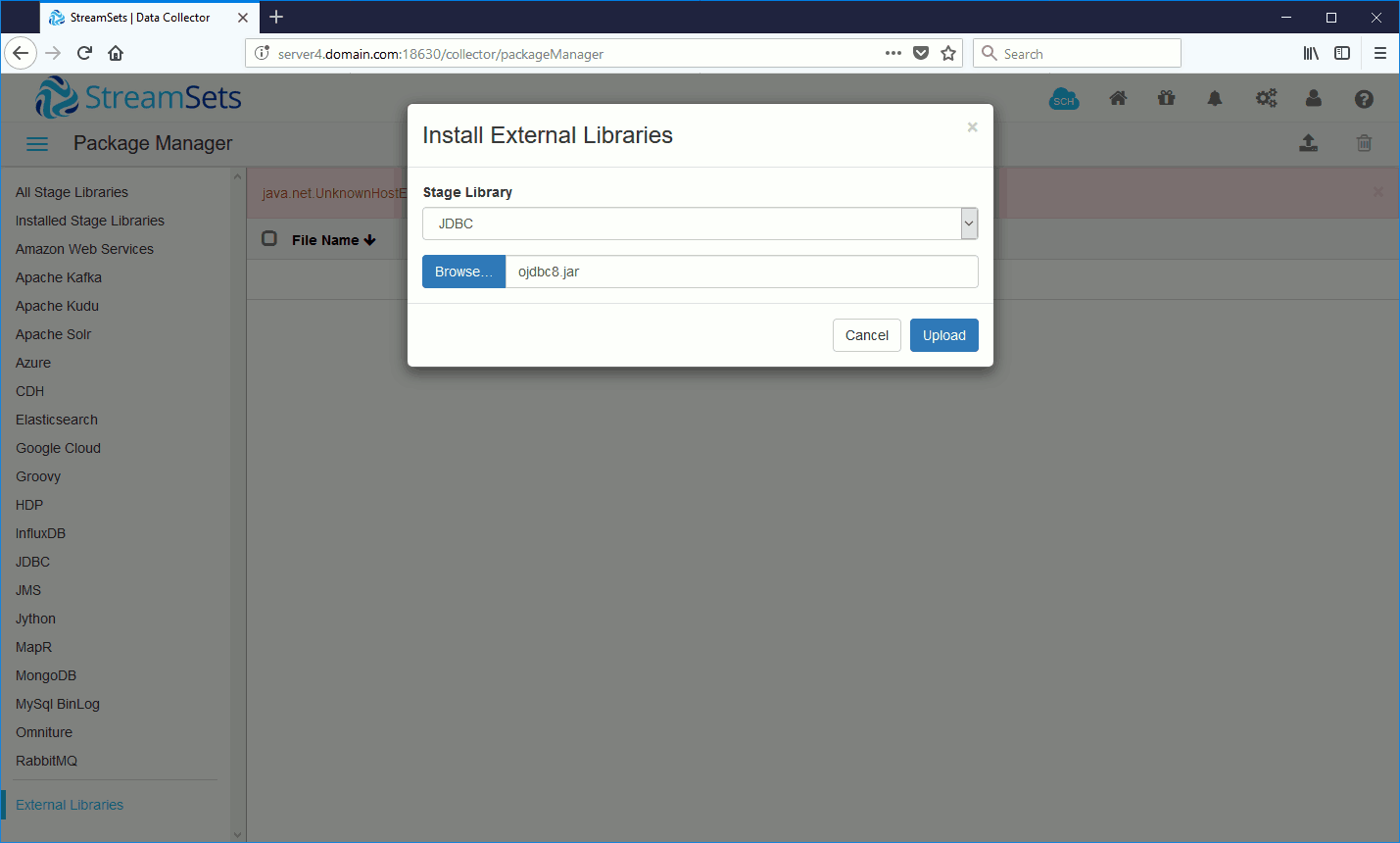

Next I imported the JDBC driver:

You should be prompted to restart StreamSets Data Collector and see the below screen:

Oracle Database LogMiner Configuration

This source multi-tenant Oracle database is common for the three scenarios I decided to test, so I had to configure it only once. On this source Oracle database I created a global account able to manage LogMiner (global because LogMiner is accessible from the root pluggable database in a multi-tenant architecture):

SQL> ALTER SESSION SET container=cdb$root; SESSION altered. SQL> CREATE USER c##streamsets IDENTIFIED BY "streamsets" container=ALL; USER created. SQL> GRANT CREATE SESSION, ALTER SESSION, SET container, SELECT ANY dictionary, logmining, execute_catalog_role TO c##streamsets container=ALL; GRANT succeeded. SQL> ALTER SESSION SET container=pdb1; SESSION altered.

Change the source Oracle database to archivelog mode and activate the default supplemental log:

SQL> shutdown IMMEDIATE; DATABASE closed. DATABASE dismounted. ORACLE instance shut down. SQL> startup mount; ORACLE instance started. Total SYSTEM Global Area 1048575184 bytes Fixed SIZE 8903888 bytes Variable SIZE 729808896 bytes DATABASE Buffers 301989888 bytes Redo Buffers 7872512 bytes DATABASE mounted. SQL> ALTER DATABASE archivelog; DATABASE altered. SQL> ALTER DATABASE OPEN; DATABASE altered. SQL> SELECT supplemental_log_data_min, supplemental_log_data_pk, supplemental_log_data_all FROM v$database; SUPPLEME SUP SUP -------- --- --- NO NO NO SQL> ALTER DATABASE ADD supplemental LOG data; DATABASE altered. SQL> SELECT supplemental_log_data_min, supplemental_log_data_pk, supplemental_log_data_all FROM v$database; SUPPLEME SUP SUP -------- --- --- YES NO NO SQL> ALTER SYSTEM switch logfile; SYSTEM altered.

I also created a test schema on my pluggable database (pdb1) to handle my test table:

SQL> CREATE USER test01 IDENTIFIED BY test01; USER created. SQL> GRANT CONNECT,RESOURCE TO test01; GRANT succeeded. SQL> ALTER USER test01 quota unlimited ON users; USER altered.

I created a test table and added the supplemental log:

SQL> CREATE TABLE test01.table01 ( id NUMBER NOT NULL, descr VARCHAR2(50), CONSTRAINT table01_pk PRIMARY KEY (id) enable ); TABLE created. SQL> ALTER TABLE test01.table01 ADD supplemental LOG data (PRIMARY KEY) columns; TABLE altered. SQL> SET lines 200 pages 1000 SQL> col table_name FOR a15 SQL> col log_group_name FOR a15 SQL> col owner FOR a15 SQL> SELECT * FROM dba_log_groups WHERE owner='TEST01'; OWNER LOG_GROUP_NAME TABLE_NAME LOG_GROUP_TYPE ALWAYS GENERATED --------------- --------------- --------------- ------------------- ----------- -------------- TEST01 SYS_C007365 TABLE01 PRIMARY KEY LOGGING ALWAYS GENERATED NAME

And inserted a few rows in it to simulate an already existing environment:

SQL> INSERT INTO test01.table01 VALUES(1,'One'); 1 ROW created. SQL> INSERT INTO test01.table01 VALUES(2,'Two'); 1 ROW created. SQL> COMMIT; COMMIT complete.

On the source database I generated a dictionary in the redo log. If you choose to set “Dictionary Source” to Online Catalog then this step is not mandatory. It is also much faster on small deployments to use the Online Catalog so it’s really up to you:

SQL> ALTER SESSION SET container=cdb$root; SESSION altered. SQL> EXECUTE dbms_logmnr_d.build(options=> dbms_logmnr_d.store_in_redo_logs); PL/SQL PROCEDURE successfully completed. SQL> col name FOR a60 SQL> SET lines 200 pages 1000 SQL> SELECT name,dictionary_begin,dictionary_end FROM v$archived_log WHERE name IS NOT NULL ORDER BY recid DESC; NAME DIC DIC ------------------------------------------------------------ --- --- /u01/app/oracle/oradata/ORCL/arch/1_135_983097959.dbf YES YES /u01/app/oracle/oradata/ORCL/arch/1_134_983097959.dbf NO NO /u01/app/oracle/oradata/ORCL/arch/1_133_983097959.dbf NO NO /u01/app/oracle/oradata/ORCL/arch/1_132_983097959.dbf NO NO /u01/app/oracle/oradata/ORCL/arch/1_131_983097959.dbf NO NO /u01/app/oracle/oradata/ORCL/arch/1_130_983097959.dbf NO NO /u01/app/oracle/oradata/ORCL/arch/1_129_983097959.dbf NO NO /u01/app/oracle/oradata/ORCL/arch/1_128_983097959.dbf YES YES /u01/app/oracle/oradata/ORCL/arch/1_127_983097959.dbf NO NO /u01/app/oracle/oradata/ORCL/arch/1_126_983097959.dbf NO NO

StreamSets Data Collector Oracle to Oracle Replication

Oracle Prerequisites

On the target Oracle database I created an account in my target pluggable database (pdb1):

SQL> CREATE USER test01 IDENTIFIED BY test01; USER created. SQL> GRANT CONNECT,RESOURCE TO test01; GRANT succeeded. SQL> ALTER USER test01 quota unlimited ON users; USER altered.

On the source database create an export directory and grant read and write on it to the test01 account:

SQL> ALTER SESSION SET container=pdb1; SESSION altered. SQL> CREATE OR REPLACE DIRECTORY tmp AS '/tmp'; DIRECTORY created. SQL> GRANT read,WRITE ON DIRECTORY tmp TO test01; GRANT succeeded.

Get the current System Change Number (SCN) on the source database with:

SQL> SELECT current_scn FROM v$database;

CURRENT_SCN

-----------

5424515

Finally export the schema with:

[oracle@server1 ~]$ expdp test01/test01@pdb1 dumpfile=table01.dmp directory=tmp tables=table01 flashback_scn=5424515 Export: Release 18.0.0.0.0 - Production on Wed Sep 5 13:02:23 2018 Version 18.3.0.0.0 Copyright (c) 1982, 2018, Oracle and/or its affiliates. All rights reserved. Connected to: Oracle Database 18c Enterprise Edition Release 18.0.0.0.0 - Production Starting "TEST01"."SYS_EXPORT_TABLE_01": test01/********@pdb1 dumpfile=table01.dmp directory=tmp tables=table01 flashback_scn=5424515 Processing object type TABLE_EXPORT/TABLE/TABLE_DATA Processing object type TABLE_EXPORT/TABLE/INDEX/STATISTICS/INDEX_STATISTICS Processing object type TABLE_EXPORT/TABLE/STATISTICS/TABLE_STATISTICS Processing object type TABLE_EXPORT/TABLE/STATISTICS/MARKER Processing object type TABLE_EXPORT/TABLE/TABLE Processing object type TABLE_EXPORT/TABLE/GRANT/OWNER_GRANT/OBJECT_GRANT Processing object type TABLE_EXPORT/TABLE/CONSTRAINT/CONSTRAINT . . exported "TEST01"."TABLE01" 5.492 KB 2 rows Master table "TEST01"."SYS_EXPORT_TABLE_01" successfully loaded/unloaded ****************************************************************************** Dump file set for TEST01.SYS_EXPORT_TABLE_01 is: /tmp/table01.dmp Job "TEST01"."SYS_EXPORT_TABLE_01" successfully completed at Wed Sep 5 13:04:25 2018 elapsed 0 00:01:09 [oracle@server1 ~]$ ll /tmp/table01.dmp -rw-r----- 1 oracle dba 200704 Sep 5 13:04 /tmp/table01.dmp [oracle@server1 ~]$ scp /tmp/table01.dmp server2.domain.com:/tmp The authenticity of host 'server2.domain.com (192.168.56.102)' can't be established. ECDSA key fingerprint is SHA256:hduqTIePPHF3Y+N/ekuZKnnXbocm+PNS7yU/HCf1GEw. ECDSA key fingerprint is MD5:13:dc:e3:27:bc:4b:08:b8:bf:53:2a:15:3c:86:d7:c4. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'server2.domain.com' (ECDSA) to the list of known hosts. oracle@server2.domain.com's password: table01.dmp 100% 196KB 19.0MB/s 00:00

Import the schema on the target database with something like (in the pdb1 pluggable database):

[oracle@server2 ~]$ impdp test01/test01@pdb1 file=table01.dmp directory=tmp Import: Release 18.0.0.0.0 - Production on Wed Sep 5 13:06:46 2018 Version 18.3.0.0.0 Copyright (c) 1982, 2018, Oracle and/or its affiliates. All rights reserved. Connected to: Oracle Database 18c Enterprise Edition Release 18.0.0.0.0 - Production Legacy Mode Active due to the following parameters: Legacy Mode Parameter: "file=table01.dmp" Location: Command Line, Replaced with: "dumpfile=table01.dmp" Master table "TEST01"."SYS_IMPORT_FULL_01" successfully loaded/unloaded Starting "TEST01"."SYS_IMPORT_FULL_01": test01/********@pdb1 dumpfile=table01.dmp directory=tmp Processing object type TABLE_EXPORT/TABLE/TABLE Processing object type TABLE_EXPORT/TABLE/TABLE_DATA . . imported "TEST01"."TABLE01" 5.492 KB 2 rows Processing object type TABLE_EXPORT/TABLE/GRANT/OWNER_GRANT/OBJECT_GRANT ORA-39083: Object type OBJECT_GRANT failed to create with error: ORA-01917: user or role 'C##STREAMSETS' does not exist Failing sql is: GRANT SELECT ON "TEST01"."TABLE01" TO "C##STREAMSETS" Processing object type TABLE_EXPORT/TABLE/CONSTRAINT/CONSTRAINT Processing object type TABLE_EXPORT/TABLE/INDEX/STATISTICS/INDEX_STATISTICS Processing object type TABLE_EXPORT/TABLE/STATISTICS/TABLE_STATISTICS Processing object type TABLE_EXPORT/TABLE/STATISTICS/MARKER Job "TEST01"."SYS_IMPORT_FULL_01" completed with 1 error(s) at Wed Sep 5 13:07:47 2018 elapsed 0 00:00:46

Follow Along and Begin Building Your Pipeline in StreamSets

StreamSets enables data engineers to build end-to-end smart data pipelines. Spend your time building, enabling and innovating instead of maintaining, rewriting and fixing.

StreamSets Data Collector Configuration

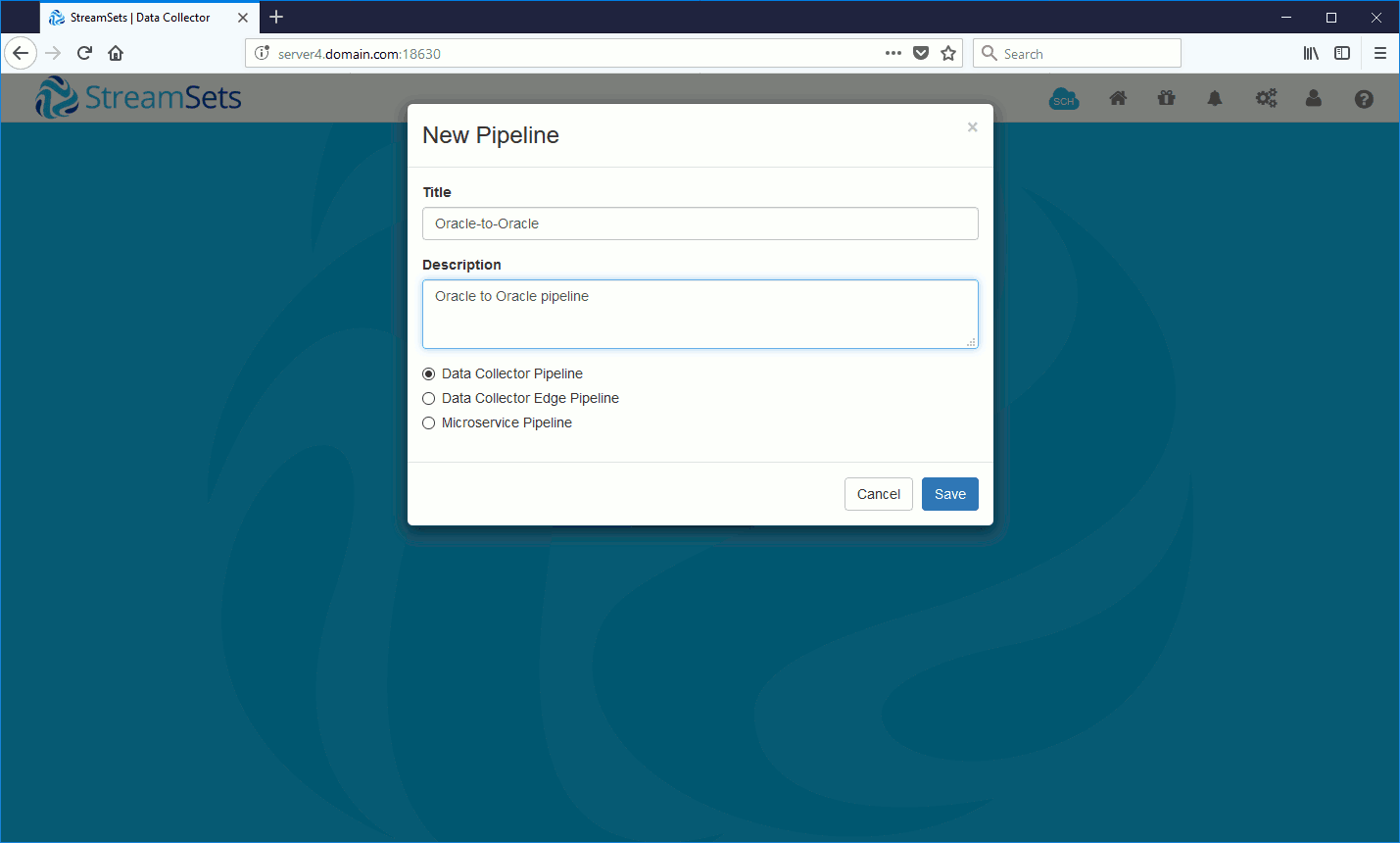

Create the pipeline:

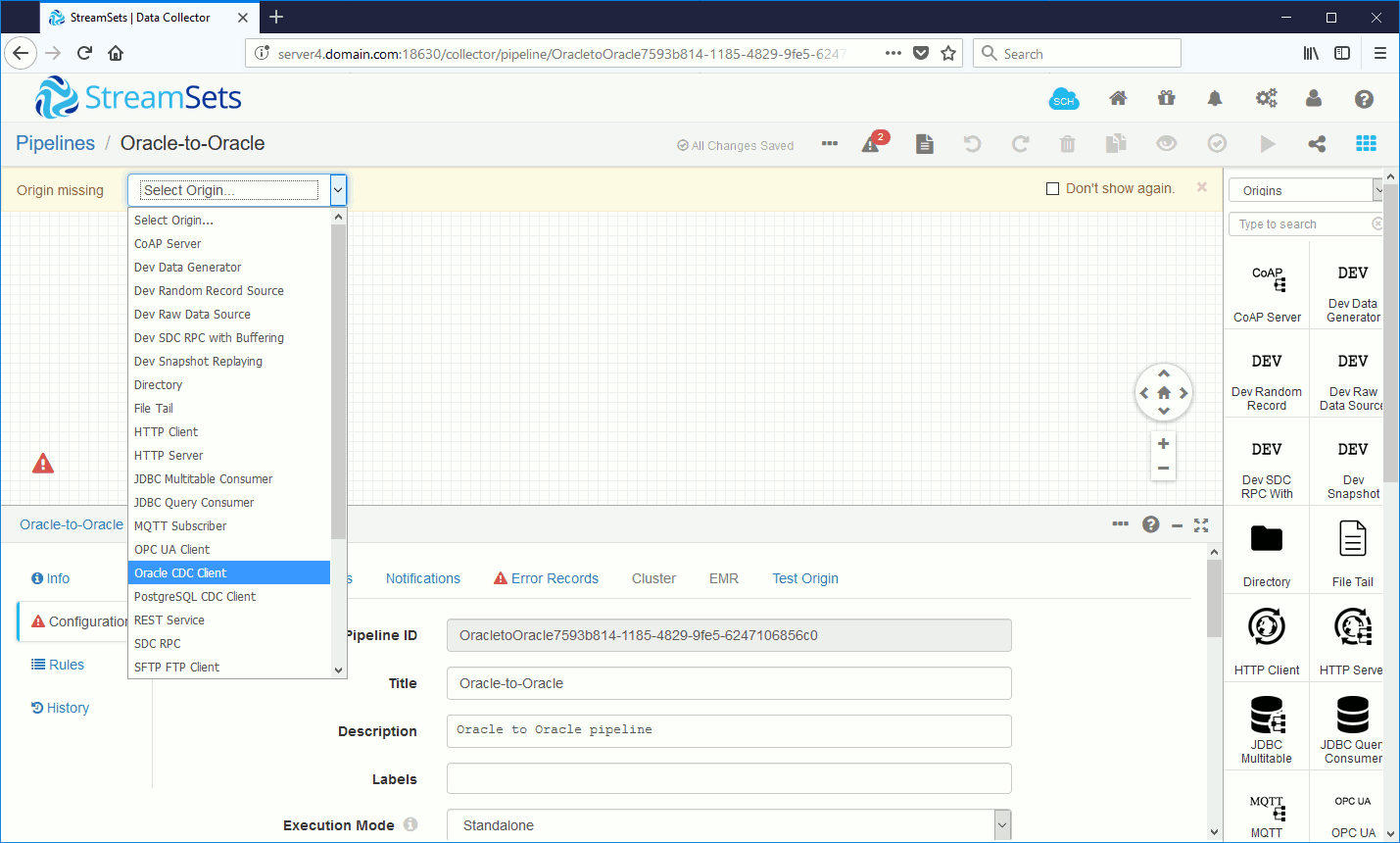

Choose Oracle CDC Client as the origin (for Oracle replication from the Origin this is the de facto option to choose):

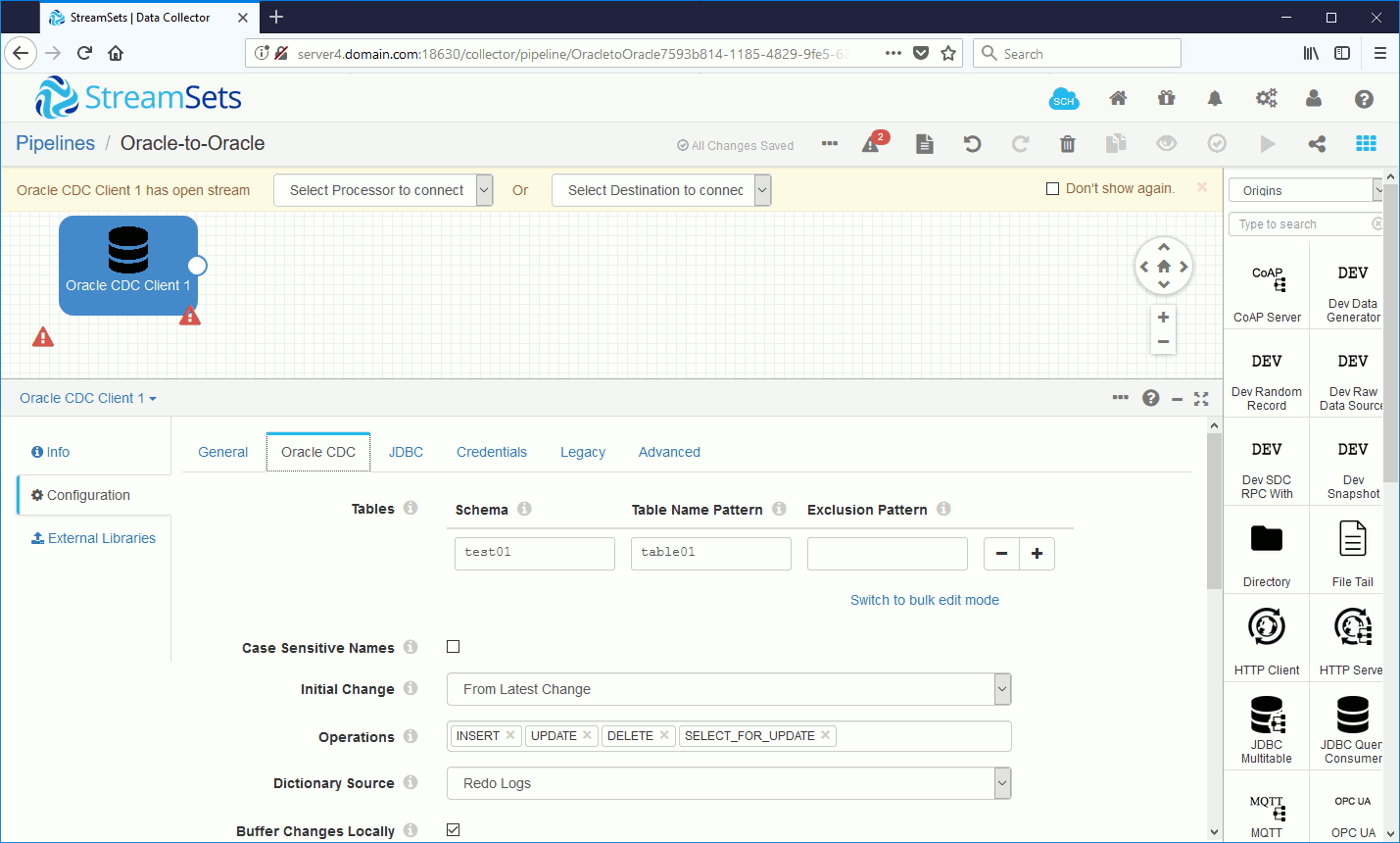

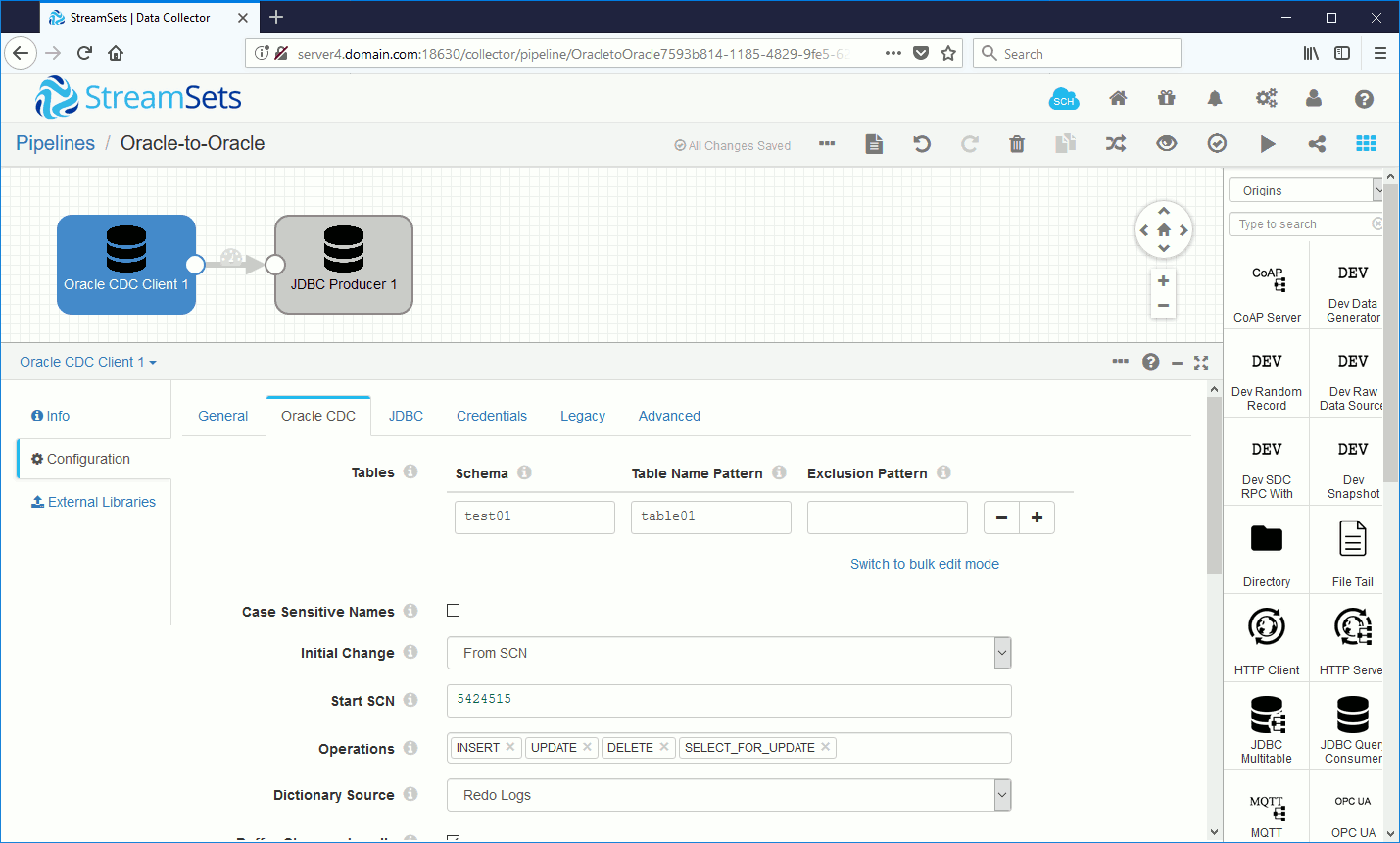

Configure all parameters. I have chosen the most reliable solution to get dictionary from redo logs in case we want to test schema changes (DDL). As the simplest test I have chosen ‘From Latest Change’ – this processes changes that arrive after you start the pipeline. I will re-configure it after the database settings:

Below query can help you to choose correct database time zone:

SQL> SELECT DBTIMEZONE FROM DUAL; DBTIME ------ +00:00

Set the JDBC connection string (I am in a multi-tenant configuration):

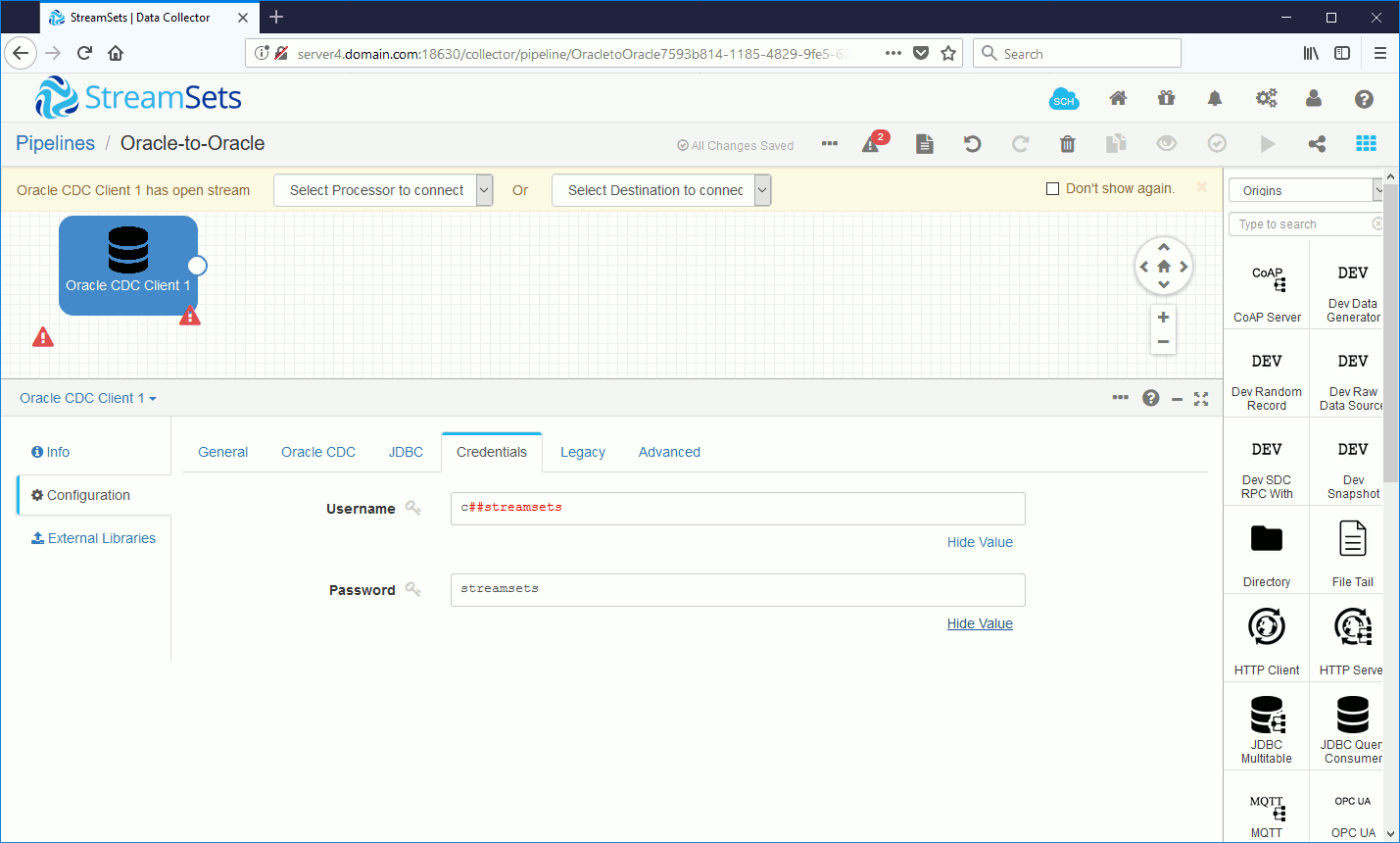

Use the global account we defined earlier:

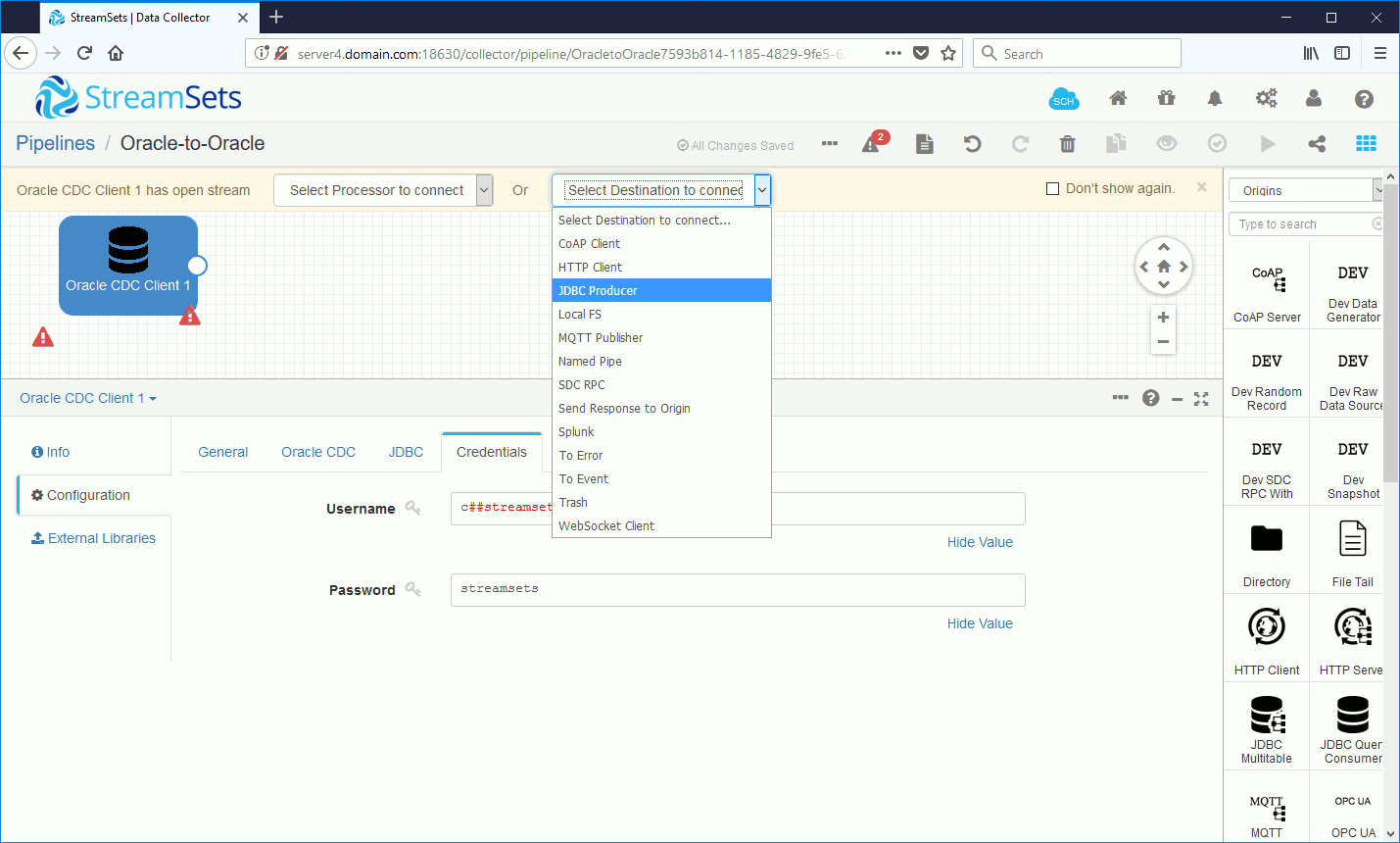

I didn’t add a processor to modify data between the source and target but obviously this is possible:

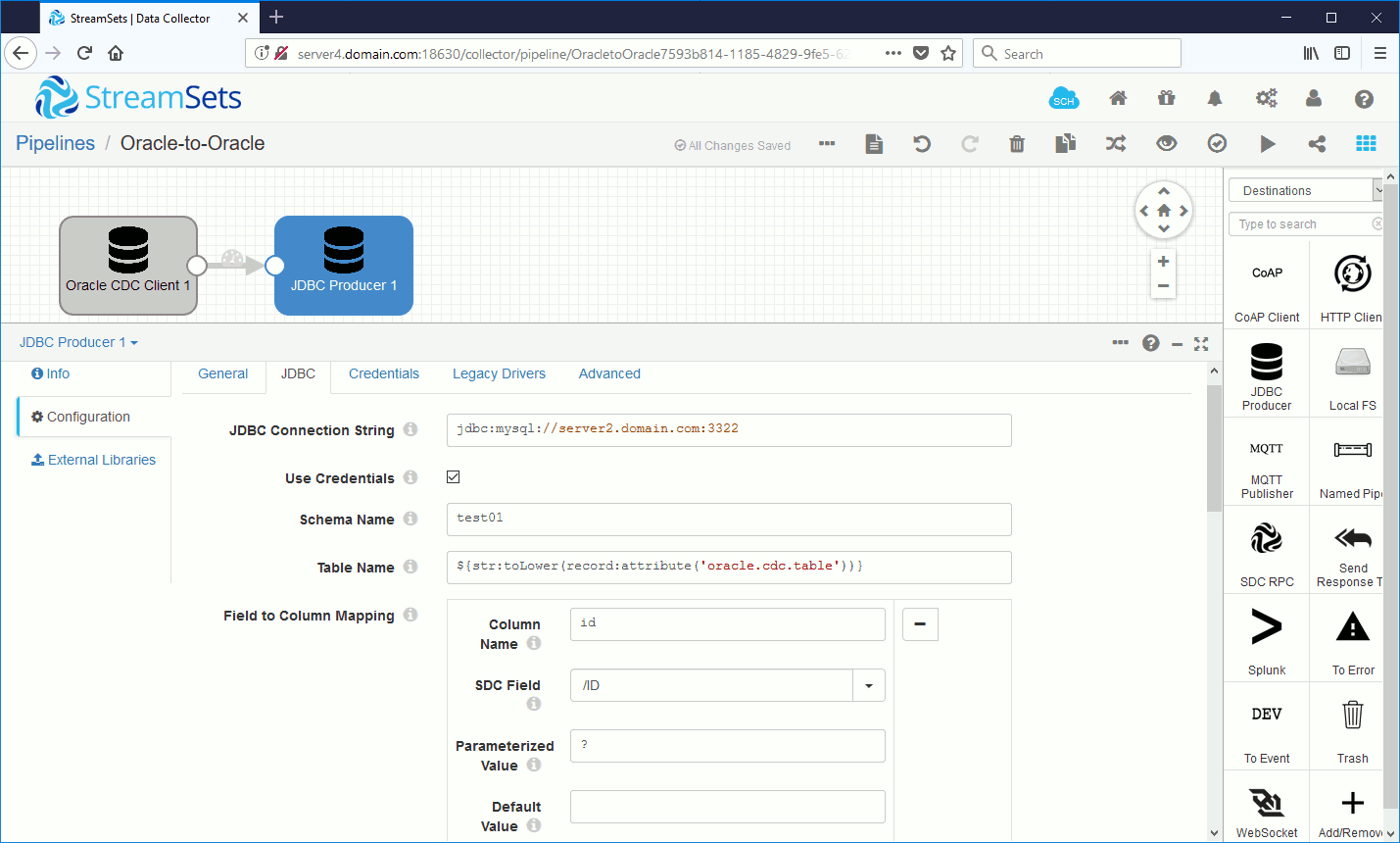

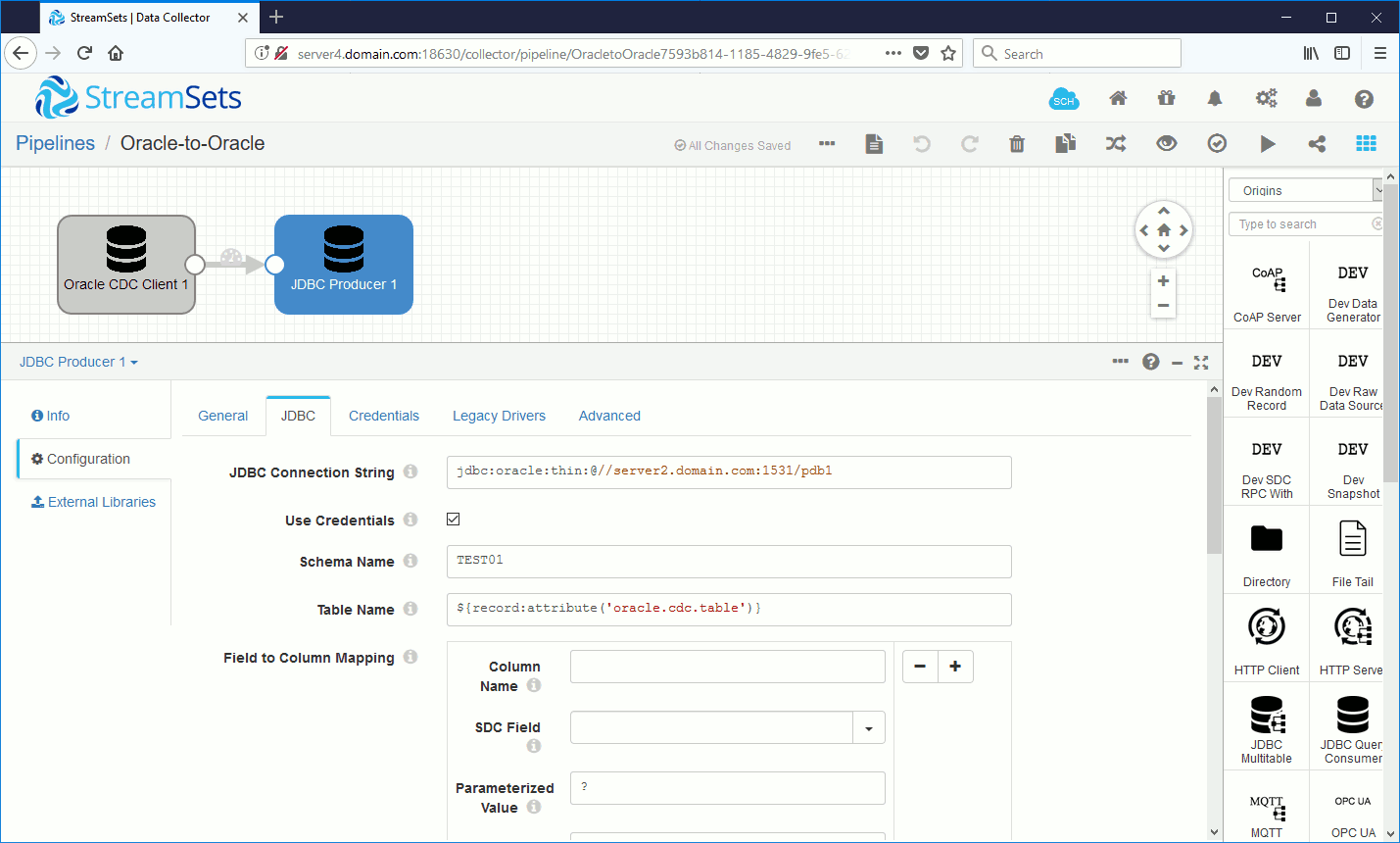

Now I set the JDBC connection string in the JDBC Producer destination. I am in a multi-tenant configuration but here I just connected to the pluggable destination database directly:

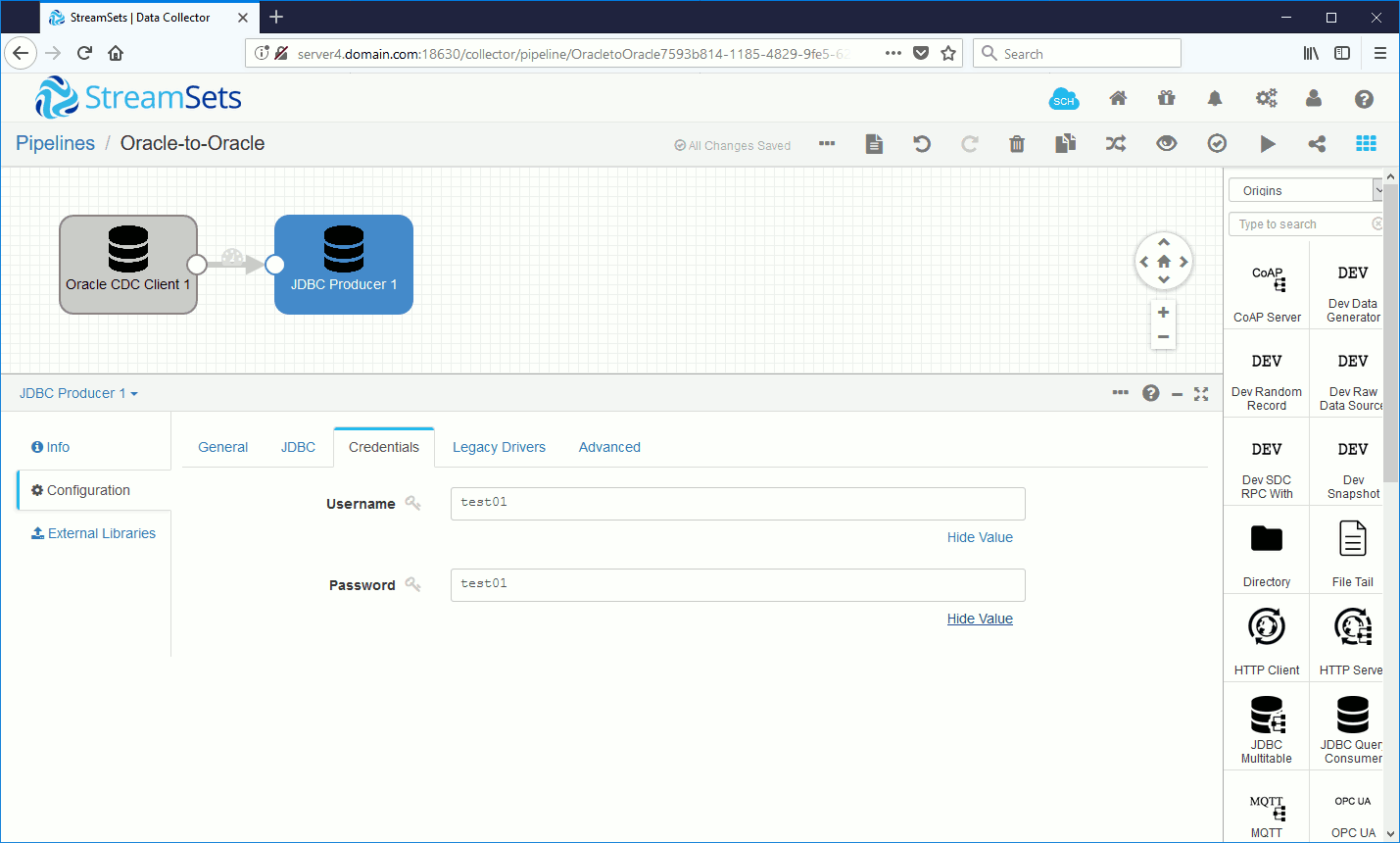

Local pluggable database user to connect with:

In the StreamSets configuration I configured the Oracle CDC Client to start at a particular SCN (the one I extracted above):

StreamSets Data Collector Engine Oracle to Oracle replication testing

Then on source database I started inserting new data:

SQL> ALTER SESSION SET container=pdb1; SESSION altered. SQL> INSERT INTO test01.table01 VALUES(3,'Three'); 1 ROW created. SQL> COMMIT; COMMIT complete.

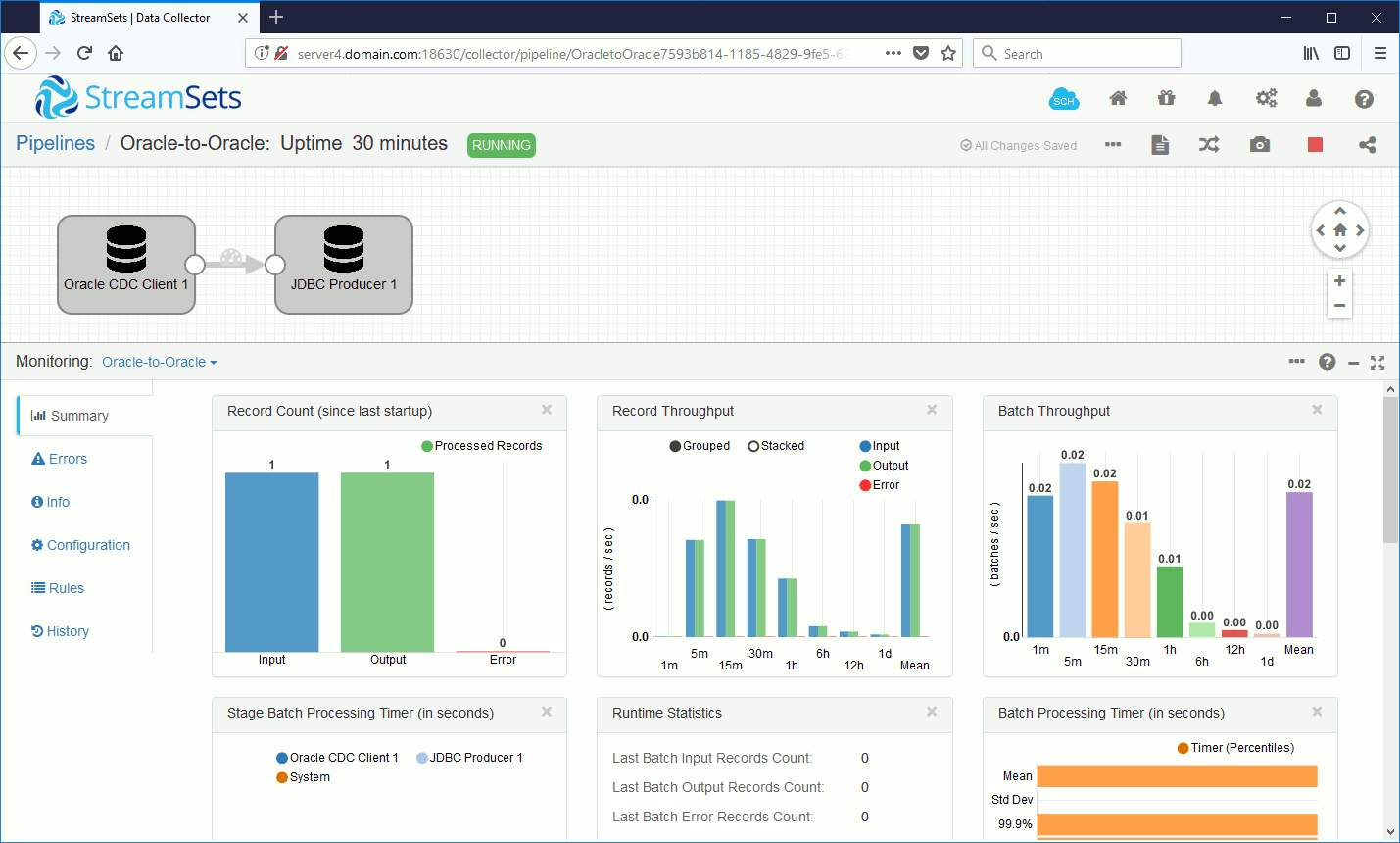

The data appeared on the target database, visible via a nice monitoring screen for the pipeline:

Then to generate a bit of traffic I used the below PL/SQL script (number of inserted rows is up to you; I have personally done multiple tests):

DECLARE

max_id NUMBER;

i NUMBER;

inserted_rows NUMBER:=10000;

BEGIN

SELECT MAX(id) INTO max_id FROM test01.table01;

i:=max_id+1;

LOOP

INSERT INTO test01.table01 VALUES(i,dbms_random.string('U', 20));

COMMIT;

i:=i+1;

EXIT WHEN i>max_id + inserted_rows;

END LOOP;

END;

/

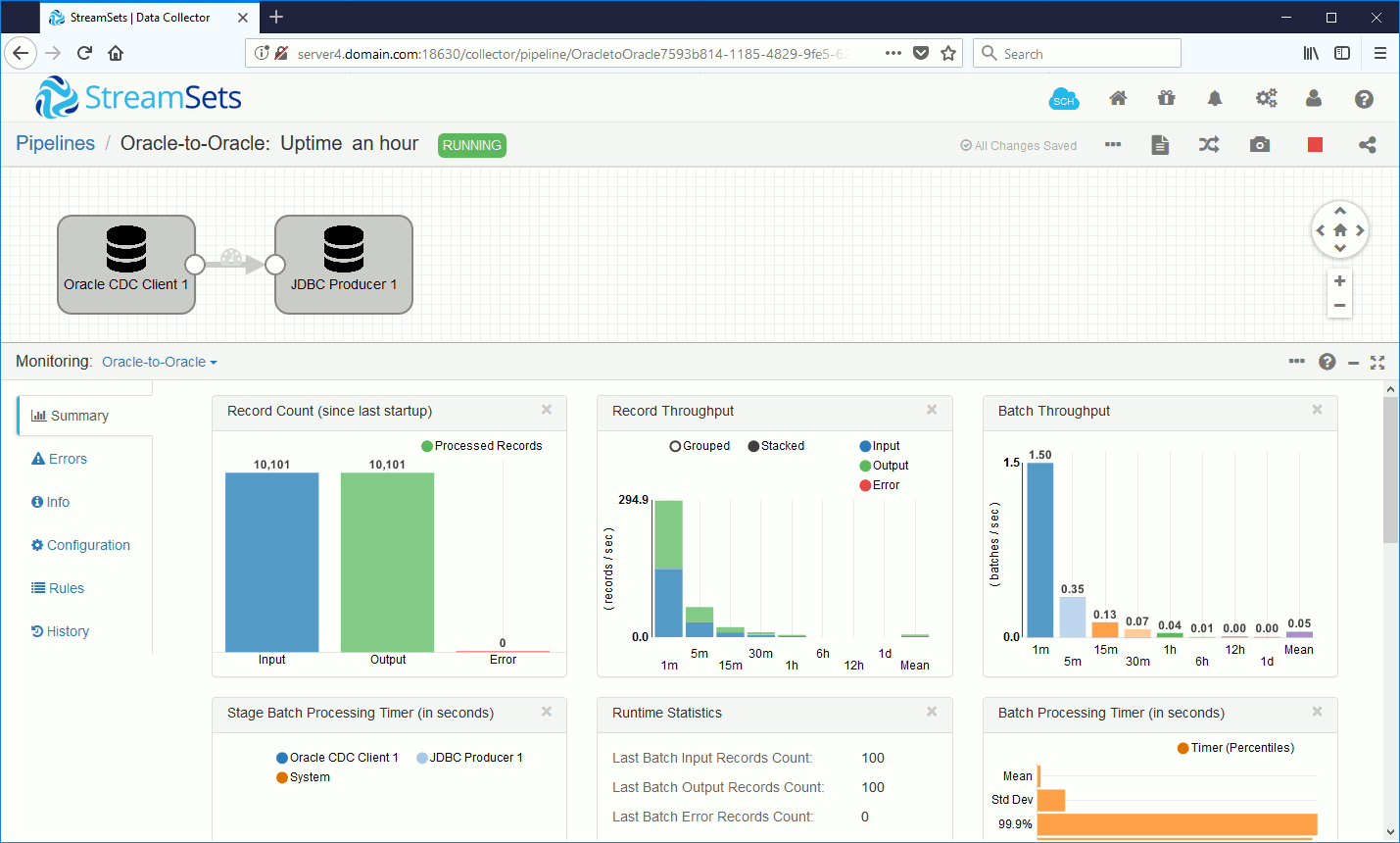

If you capture the monitoring screen while it’s running you should be able to see transfer rate figures:

JSON File Generation for Oracle Replication

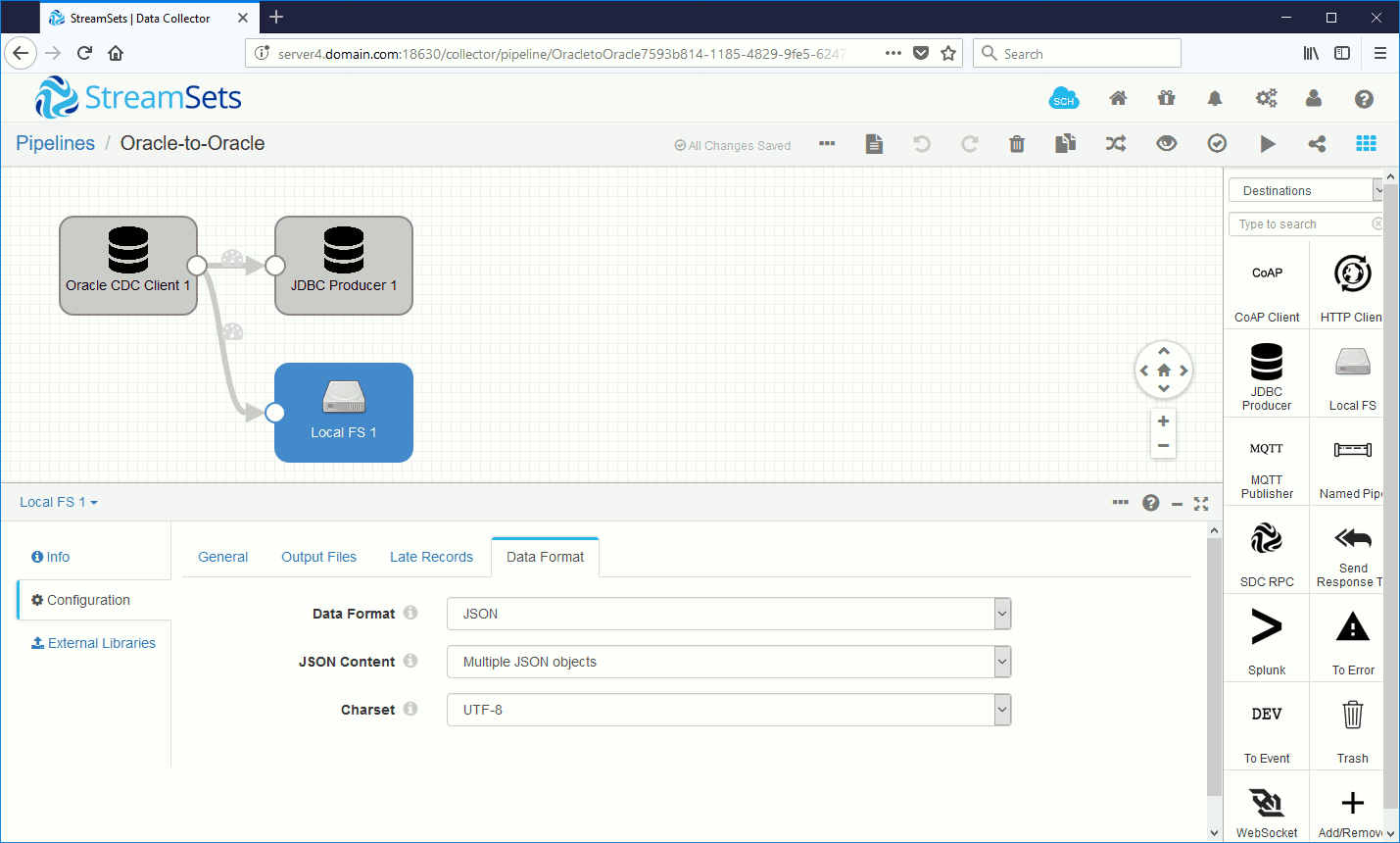

As well as JDBC insertion in a target Oracle database I also wanted to test generation of a JSON file. I started by adding a new destination called Local FS and then used the mouse in the GUI interface to draw a new line between the Oracle CDC Client origin and the Local FS destination. The only parameter I changed was generated Data Format to JSON:

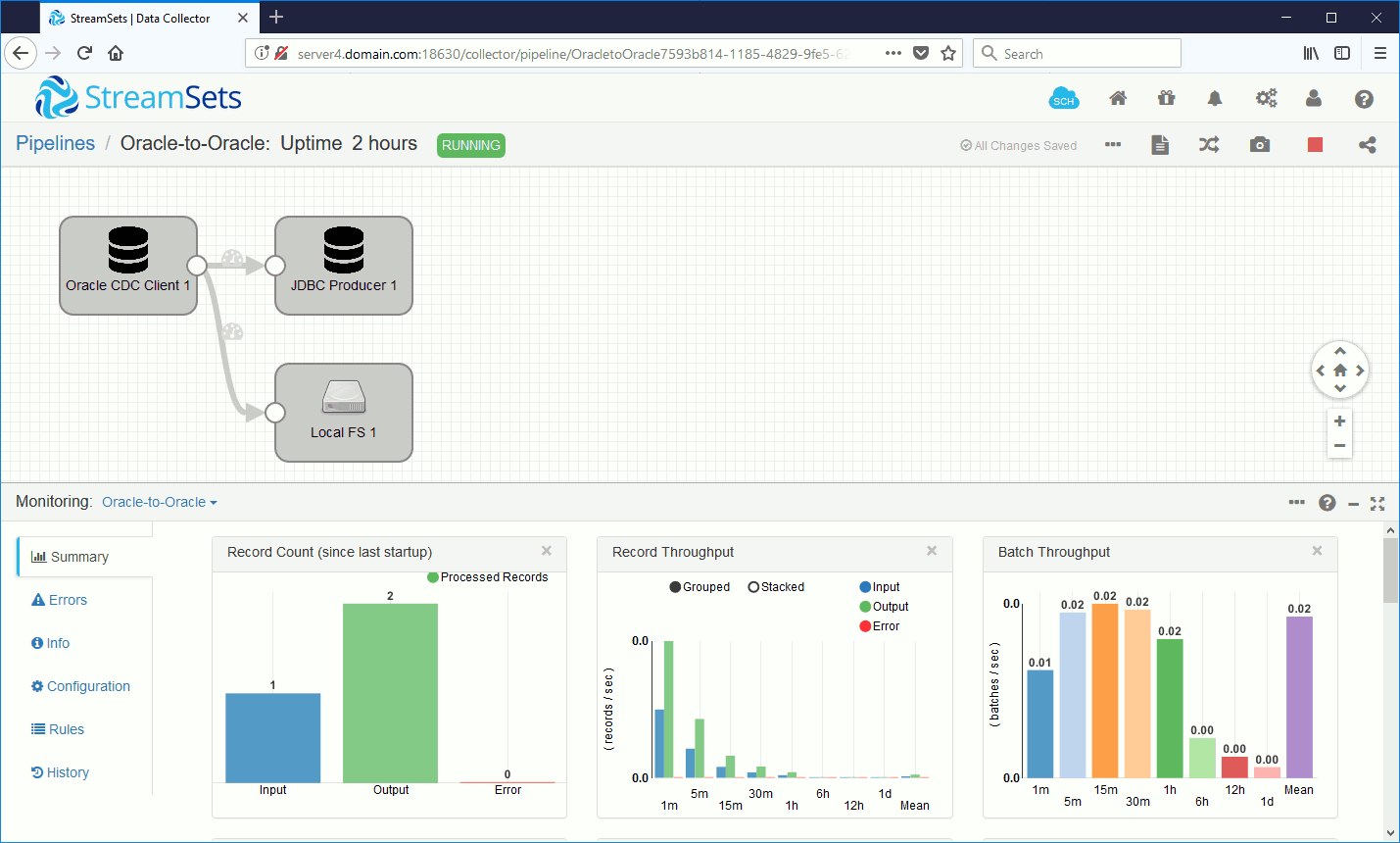

For each row in the source table (I restarted from an empty table) the record was duplicated in:

- The same target Oracle database as above.

- A text file, on the StreamSets Data Collector server, in JSON format.

We can see the output generated records is equal to two:

The output file (located on the server where StreamSets Data Collector is running i.e. server4.domain.com):

[root@server4 ~]# cat /tmp/out/2018-09-11-15/_tmp_sdc-2622d297-ac69-11e8-bf06-e301dabcb2ba_0

{"ID":1,"DESCR":"One"}

StreamSets Data Collector Oracle to MySQL Replication

I created a small MySQL 8 instance, used my personal account to connect to it and created a test01 database to map the schema name of the source Oracle pluggable database:

mysql> create user 'yjaquier'@'%' identified by 'secure_password'; Query OK, 0 rows affected (0.31 sec) mysql> grant all privileges on *.* to 'yjaquier'@'%' with grant option; Query OK, 0 rows affected (0.33 sec)

mysql> create database if not exists test01

-> CHARACTER SET = utf32

-> COLLATE = utf32_general_ci;

Query OK, 1 row affected (0.59 sec)

mysql> show databases;

+--------------------+

| Database |

+--------------------+

| information_schema |

| mysql |

| performance_schema |

| sys |

| test01 |

+--------------------+

5 rows in set (0.00 sec)

I created the target table the same as one of the source Oracle tables:

mysql> create table test01.table01 (

-> id int not null,

-> descr varchar(50) null,

-> primary key (id));

Query OK, 0 rows affected (1.30 sec)

Remark:

I started from an empty table, but if that’s not the case for you then you should export and import pre-existing data.

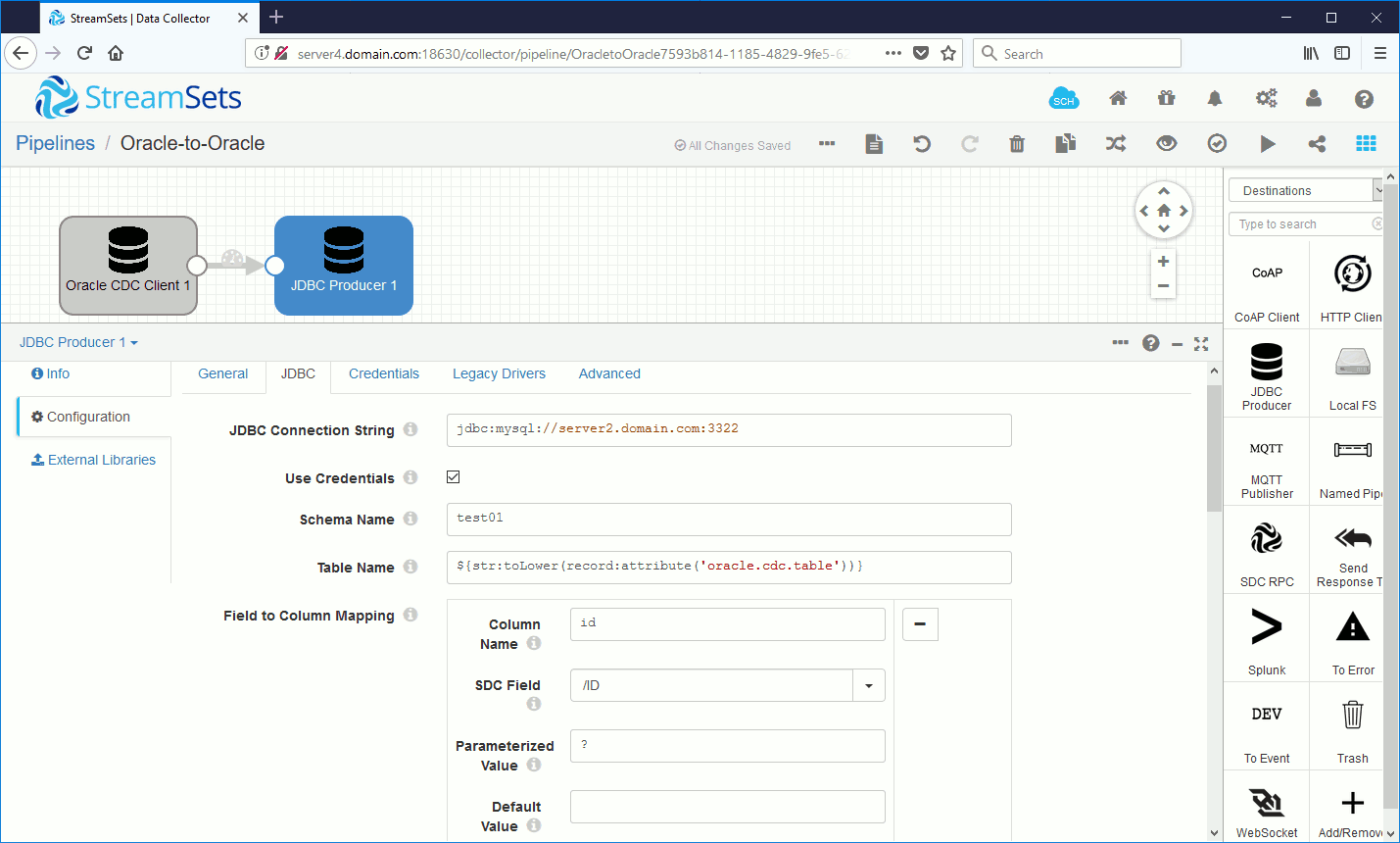

The JDBC connect string for MySQL is (3322 is my MySQL listening port):

jdbc:mysql://server2.domain.com:3322

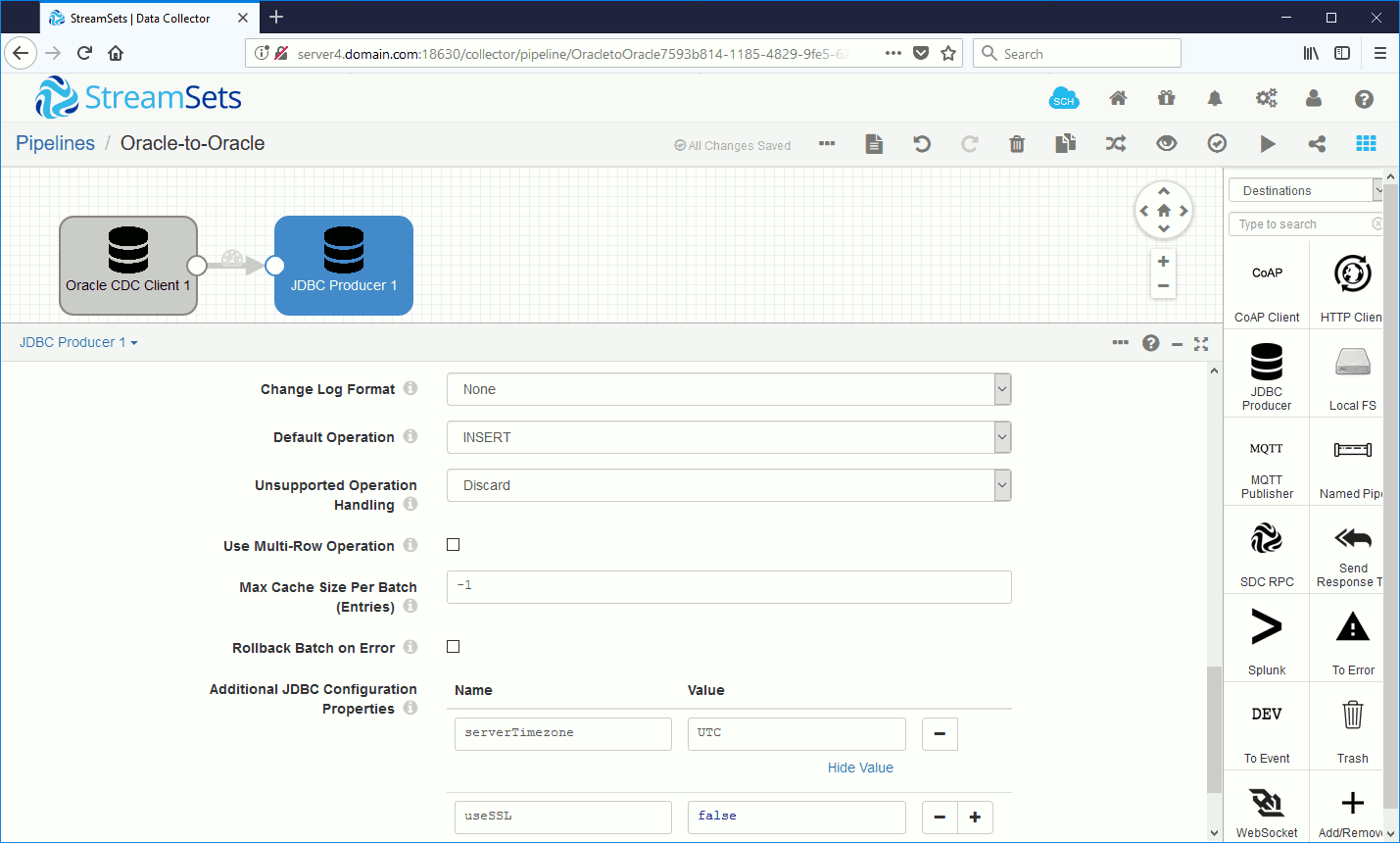

I also set two JDBC parameters (see Errors encountered section):

The Schema Name parameter for MySQL must be inserted in lower case, so test01 in my case, and Table Name must also be in lowercase so use below formula (str:toLower function) to convert uppercase Oracle table name to lower case:

${str:toLower(record:attribute('oracle.cdc.table'))}

We can see that rows have been inserted in the MySQL target table:

mysql> select * from test01.table01; +----+-------+ | id | descr | +----+-------+ | 1 | One | | 2 | Two | | 3 | Three | +----+-------+ 3 rows in set (0.00 sec)

Errors encountered during Oracle Replication

JDBC_52 – Error starting LogMiner

In sdc.log or in View Logs of the user interface you might see something like:

LOGMINER - CONTINUOUS_MINE - failed to add logfile /u01/app/oracle/oradata/ORCL/arch/1_4_983097959.dbf because of status 1284 2018-08-07T17:19:02.844615+02:00

It was a mistake from my side where I deleted the archived log file directly from the disk. I recovered the situation with:

RMAN> crosscheck archivelog all;

released channel: ORA_DISK_1

allocated channel: ORA_DISK_1

channel ORA_DISK_1: SID=277 device type=DISK

validation failed for archived log

archived log file name=/u01/app/oracle/oradata/ORCL/arch/1_4_983097959.dbf RECID=1 STAMP=983191889

validation failed for archived log

.

.

RMAN> list archivelog all;

using target database control file instead of recovery catalog

List of Archived Log Copies for database with db_unique_name ORCL

=====================================================================

Key Thrd Seq S Low Time

------- ---- ------- - ---------

1 1 4 X 02-AUG-18

Name: /u01/app/oracle/oradata/ORCL/arch/1_4_983097959.dbf

.

.

RMAN> delete noprompt expired archivelog all;

allocated channel: ORA_DISK_1

channel ORA_DISK_1: SID=271 device type=DISK

List of Archived Log Copies for database with db_unique_name ORCL

=====================================================================

Key Thrd Seq S Low Time

------- ---- ------- - ---------

1 1 4 X 02-AUG-18

Name: /u01/app/oracle/oradata/ORCL/arch/1_4_983097959.dbf

I encountered another similar error:

JDBC_44 - Error while getting changes due to error: com.streamsets.pipeline.api.StageException: JDBC_52 - Error starting LogMiner

It was simply because no archived log file contained a dictionary log. This can happen when you purge archived log files. Generate one with:

SQL> execute dbms_logmnr_d.build(options=> dbms_logmnr_d.store_in_redo_logs); PL/SQL procedure successfully completed.

JDBC_44 – Error while getting changes due to error: java.sql.SQLRecoverableException: Closed Connection: getBigDecimal

When validating pipeline I got:

JDBC_44 - Error while getting changes due to error: java.sql.SQLRecoverableException: Closed Connection: getBigDecimal

In sdc.log:

2018-08-31 10:53:35,754 [user:*admin] [pipeline:Oracle-to-Oracle/OracletoOracle7593b814-1185-4829-9fe5-6247106856c0] [runner:] [thread:preview-pool-1-thread-4] WARN OracleCDCSource - Error while stopping LogMiner java.sql.SQLRecoverableException: Closed Connection

From what I have seen it looks like my test server is too slow; if you get this you might need to increase the timeout parameters in the Advanced tab of Oracle CDC client.

ORA-01291: missing logfile

This one kept me busy for a while:

106856c0-Oracle-to-Oracle] INFO JdbcUtil - Driver class oracle.jdbc.OracleDriver (version 18.3)

2018-09-03 17:50:48,069 [user:*admin] [pipeline:Oracle-to-Oracle/OracletoOracle7593b814-1185-4829-9fe5-6247106856c0] [runner:0] [thread:ProductionPipelineRunnable-OracletoOracle7593b814-1185-4829-9fe5-6247

106856c0-Oracle-to-Oracle] INFO HikariDataSource - HikariPool-1 - is starting.

2018-09-03 17:50:49,354 [user:*admin] [pipeline:Oracle-to-Oracle/OracletoOracle7593b814-1185-4829-9fe5-6247106856c0] [runner:] [thread:ProductionPipelineRunnable-OracletoOracle7593b814-1185-4829-9fe5-62471

06856c0-Oracle-to-Oracle] INFO OracleCDCSource - Trying to start LogMiner with start date: 31-08-2018 10:40:33 and end date: 31-08-2018 12:40:33

2018-09-03 17:50:49,908 [user:*admin] [pipeline:Oracle-to-Oracle/OracletoOracle7593b814-1185-4829-9fe5-6247106856c0] [runner:] [thread:ProductionPipelineRunnable-OracletoOracle7593b814-1185-4829-9fe5-62471

06856c0-Oracle-to-Oracle] ERROR OracleCDCSource - SQLException while trying to setup record generator thread

java.sql.SQLException: ORA-01291: missing logfile

ORA-06512: at "SYS.DBMS_LOGMNR", line 58

ORA-06512: at line 1

at oracle.jdbc.driver.T4CTTIoer11.processError(T4CTTIoer11.java:494)

at oracle.jdbc.driver.T4CTTIoer11.processError(T4CTTIoer11.java:446)

at oracle.jdbc.driver.T4C8Oall.processError(T4C8Oall.java:1052)

at oracle.jdbc.driver.T4CTTIfun.receive(T4CTTIfun.java:537)

at oracle.jdbc.driver.T4CTTIfun.doRPC(T4CTTIfun.java:255)

at oracle.jdbc.driver.T4C8Oall.doOALL(T4C8Oall.java:610)

at oracle.jdbc.driver.T4CCallableStatement.doOall8(T4CCallableStatement.java:249)

at oracle.jdbc.driver.T4CCallableStatement.doOall8(T4CCallableStatement.java:82)

at oracle.jdbc.driver.T4CCallableStatement.executeForRows(T4CCallableStatement.java:924)

at oracle.jdbc.driver.OracleStatement.doExecuteWithTimeout(OracleStatement.java:1136)

at oracle.jdbc.driver.OraclePreparedStatement.executeInternal(OraclePreparedStatement.

One reason I have identified is because StreamSets starts LogMiner two hours in the past even when you choose from latest changes:

2018-08-31 12:53:00,846 [user:*admin] [pipeline:Oracle-to-Oracle/OracletoOracle7593b814-1185-4829-9fe5-6247106856c0] [runner:] [thread:ProductionPipelineRunnable-OracletoOracle7593b814-1185-4829-9fe5-62471 06856c0-Oracle-to-Oracle] INFO OracleCDCSource - Trying to start LogMiner with start date: 31-08-2018 10:40:33 and end date: 31-08-2018 12:40:33

I suspect it occurs because Oracle CDC Client LogMiner Session Window parameter is set to 2 hours you must have the archived log files from last 2 hours available when starting the pipeline. So never ever purge archivelog file with:

RMAN> delete noprompt archivelog all;

But use:

RMAN> delete noprompt archivelog all completed before 'sysdate-3/24';

Even when applying this I also noticed StreamSets was always starting LogMiner from the time where the pipeline has failed or when you have stopped it. This is saved in the offset.json file:

[root@server4 0]# pwd

/streamsets/streamsets-datacollector-3.4.1/data/runInfo/OracletoOracle7593b814-1185-4829-9fe5-6247106856c0/0

[root@server4 0]# ll

total 472

-rw-r--r-- 1 streamsets users 100 Sep 3 18:20 offset.json

-rw-r--r-- 1 streamsets users 257637 Sep 3 18:20 pipelineStateHistory.json

-rw-r--r-- 1 streamsets users 20316 Sep 3 18:20 pipelineState.json

[root@server4 0]# cat offset.json

{

"version" : 2,

"offsets" : {

"$com.streamsets.datacollector.pollsource.offset$" : "v3::1535715633::3661001::1"

}

}

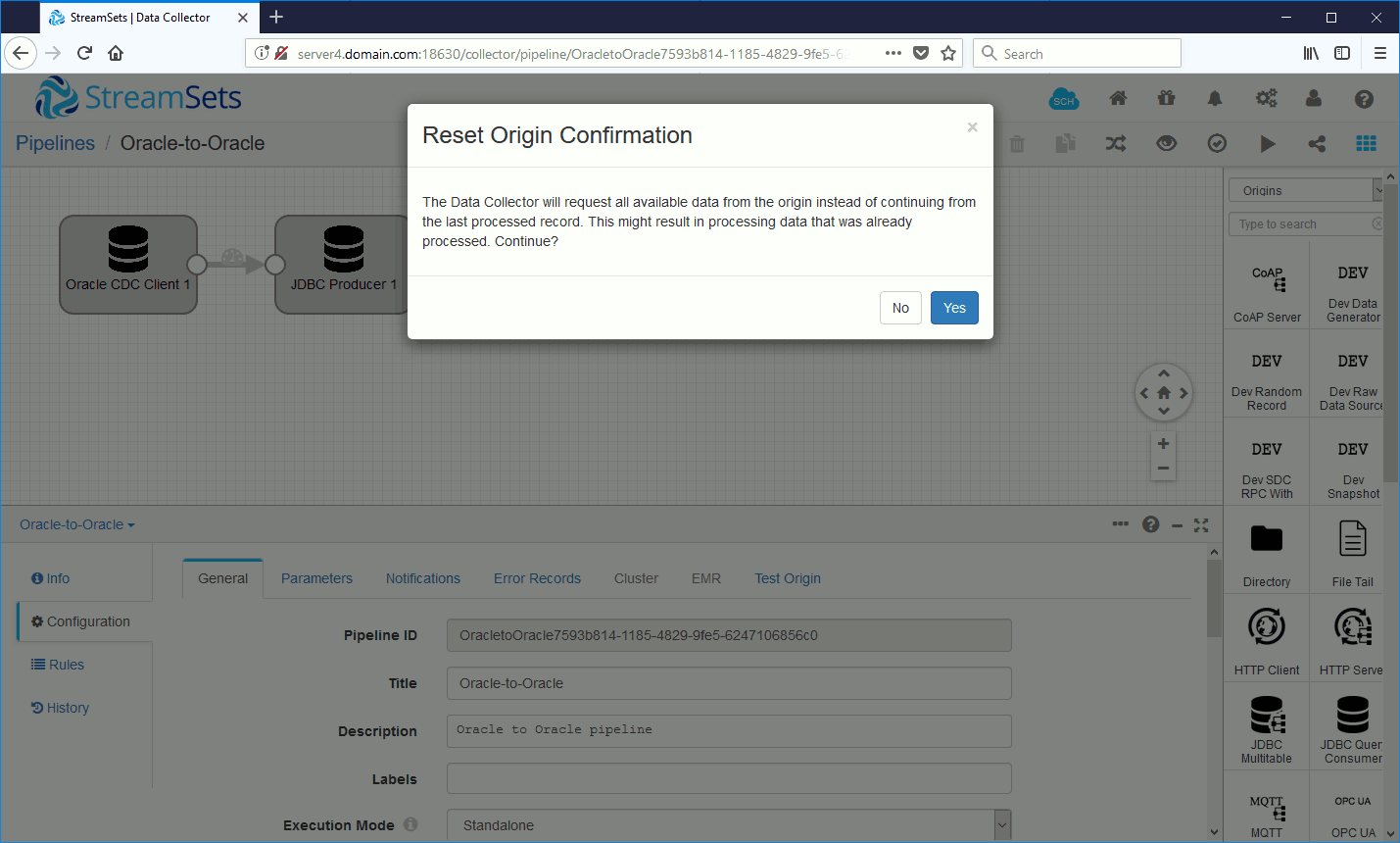

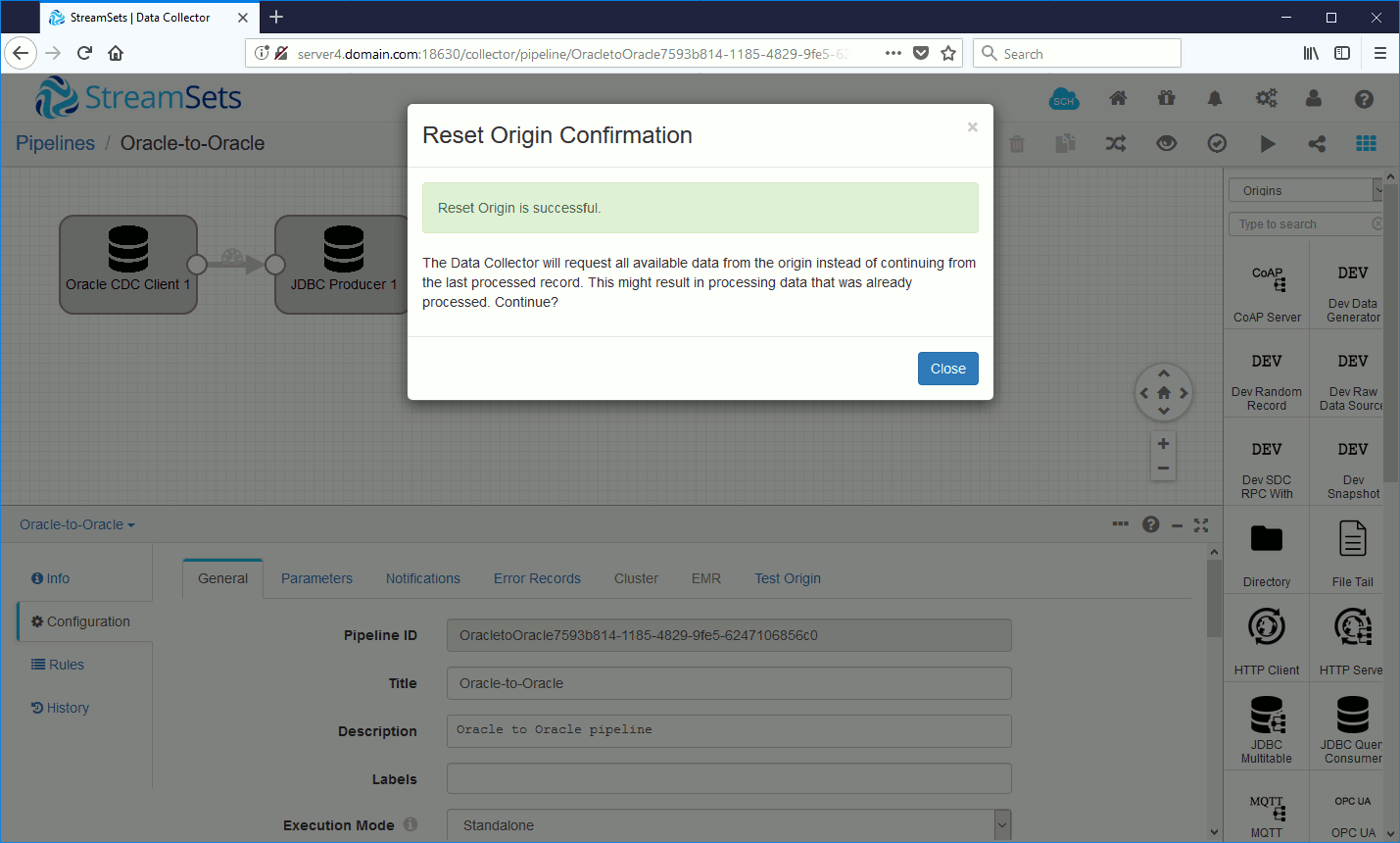

If this is expected and you know what you are doing (first setup, testing, …) you can reset the pipeline with the graphical interface:

Confirm that you know what you are doing:

This results in an empty offset.json file:

[root@server4 0]# cat offset.json

{

"version" : 2,

"offsets" : { }

}

JDBC_16 – Table ” does not exist or PDB is incorrect. Make sure the correct PDB was specified

In JDBC Producer I replaced the Table Name field:

${record:attribute('tablename')}

with

${record:attribute('oracle.cdc.table')}

Then it failed with:

JDBC_16 - Table 'TABLE01' does not exist or PDB is incorrect. Make sure the correct PDB was specified

In JDBC Producer Errors section I noticed:

oracle.cdc.user: SYS

Because I inserted the record on master database with SYS account, I tried with TEST01 account but it failed for exact same error…

I finally found the solution when setting Schema Name field to TEST01, in uppercase, because as suggested in Oracle CDC Client documentation – Oracle uses all caps for schema, table, and column names by default.

JDBC_00 – Cannot connect to specified database: com.zaxxer.hikari.pool.PoolInitializationException: Exception during pool initialization: The server time zone value ‘CEST’ is unrecognized or represents more than one time zone.

The full error message also contains:

You must configure either the server or JDBC driver (via the serverTimezone configuration property) to use a more specific time zone value if you want to utilize time zone support.

I had to add an additional JDBC property to set the server time zone:

serverTimezone = UTC

Establishing SSL connection without server’s identity verification is not recommended

Thu Sep 13 09:53:39 CEST 2018 WARN: Establishing SSL connection without server's identity verification is not recommended. According to MySQL 5.5.45+, 5.6.26+ and 5.7.6+ requirements SSL connection must be established by default if explicit option isn't set. For compliance with existing applications not using SSL the verifyServerCertificate property is set to 'false'. You need either to explicitly disable SSL by setting useSSL=false, or set useSSL=true and provide truststore for server certificate verification.

To solve this set in JDBC driver parameters:

useSSL = false

JdbcGenericRecordWriter – No parameters found for record with ID

Complete error message is:

2018-09-12 15:26:41,696 [user:*admin] [pipeline:Oracle-to-Oracle/OracletoOracle7593b814-1185-4829-9fe5-6247106856c0] [runner:0] [thread:ProductionPipelineRunnable-OracletoOracle7593b814-1185-4829-9fe5-6247 106856c0-Oracle-to-Oracle] WARN JdbcGenericRecordWriter - No parameters found for record with ID 0x0000bc.0001bec8.0018 ::0; skipping 2018-09-12 15:41:31,650 [user:*admin] [pipeline:Oracle-to-Oracle/OracletoOracle7593b814-1185-4829-9fe5-6247106856c0] [runner:0] [thread:ProductionPipelineRunnable-OracletoOracle7593b814-1185-4829-9fe5-6247 106856c0-Oracle-to-Oracle] WARN JdbcGenericRecordWriter - No parameters found for record with ID 0x0000bd.000057e7.0010 ::1; skipping

For Oracle to MySQL replication I had to do explicit column mapping like this: