Download and Install StreamSets Transformer

How to download and install Transformer to build Apache Spark and ETL data pipelines

Quick Start Guide and ETL Software Installation Video

StreamSets Transformer download is only available to existing users. If you are new to StreamSets, we encourage you to try our cloud-native platform for free.

- Use your StreamSets Account and download the tarball.

- Download and install Apache Spark 2.4.7

- Extract the Apache Spark tarball by entering this command in the terminal window: tar xvzf spark-2.4.7-bin-without-hadoop.tgz

- Extract the Transformer tarball by entering this command in the terminal window: tar xvzf streamsets-transformer-all-<VERSION>.tgz

- Change the folder to the root of the Transformer installation. For example: cd streamsets-transformer-<VERSION>

- Change directory to libexec folder underneath the root of the Transformer installation and edit the transformer-env.sh file. Add the below line to the transformer-env.sh file to set the environment variable for SPARK-HOME: export SPARK_HOME=<SPARK_PATH>

- Run this command in the terminal window: bin/streamsets transformer

- In your browser, enter the URL shown in the terminal window. For example, http://10.0.0.100:19360

- Log in to start using StreamSets Transformer.

Note: Replace <VERSION> with current version and <SPARK_PATH> with the full path to Apache Spark.

Getting Started with ETL Videos

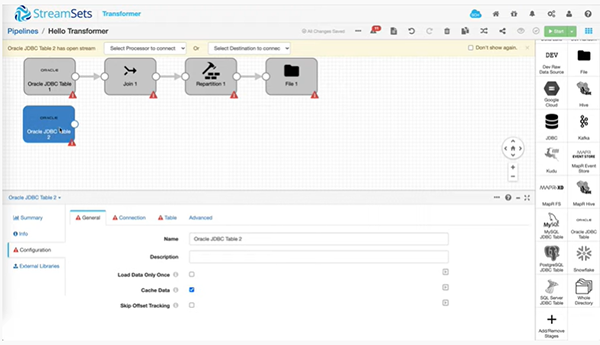

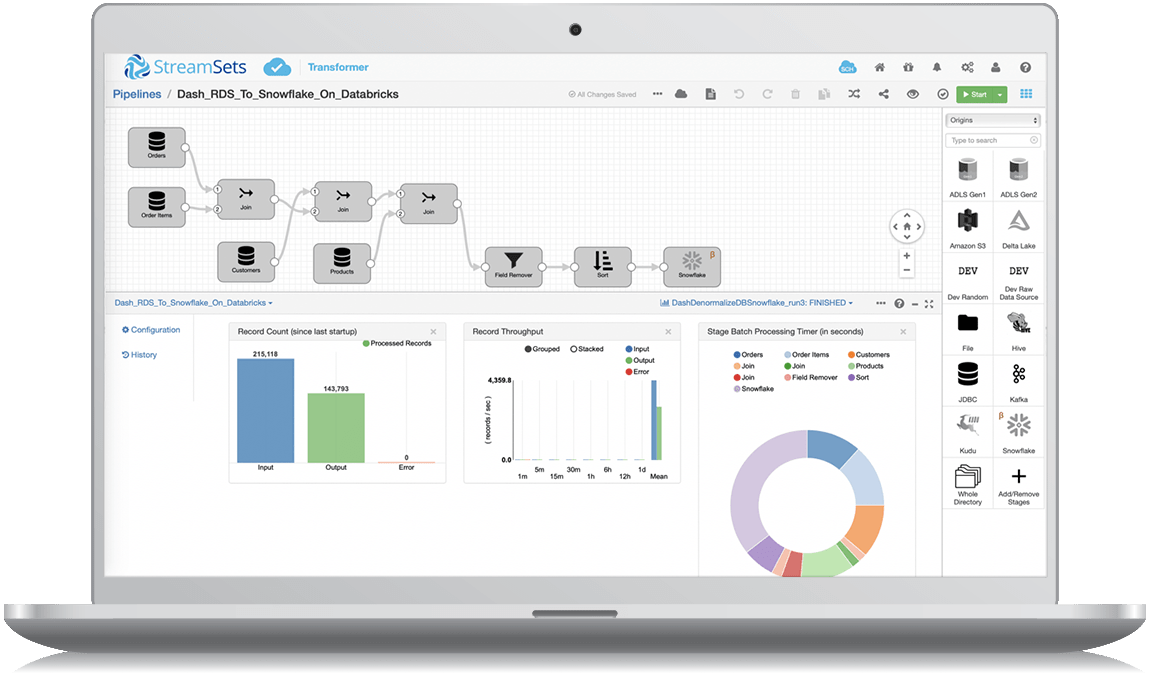

Learn how to build your first data pipeline using StreamSets Transformer in a few easy steps.

Learn how to build, preview, and run your data pipeline in a few easy steps on a Spark cluster or where ever you have Spark installed.

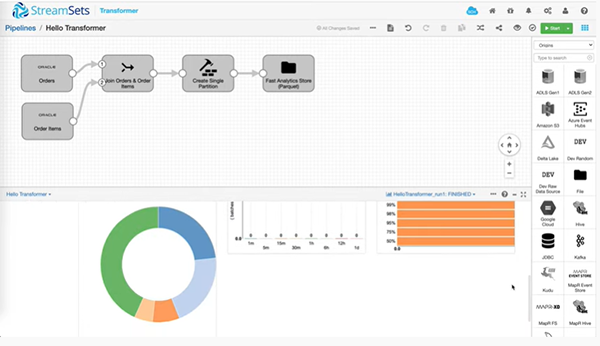

Build a data pipeline in StreamSets Transformer for clickstream analysis on Amazon EMR, Amazon Redshift and Elasticsearch.

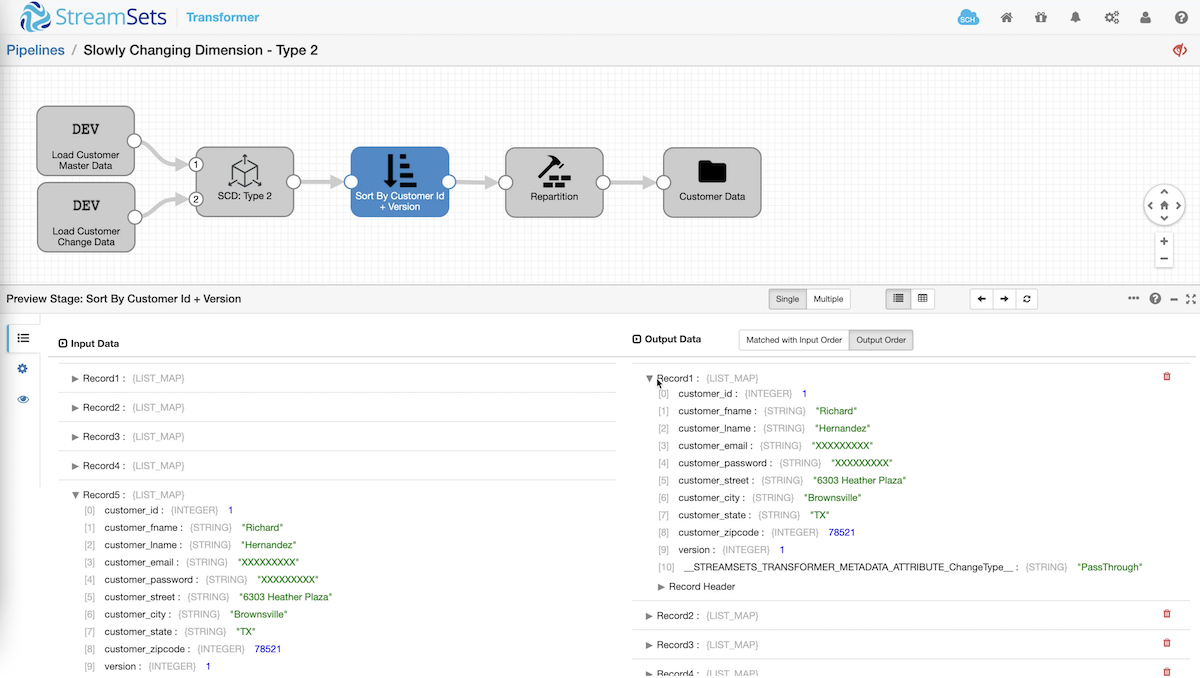

Pipeline preview helps ensure data integrity and data quality, and makes debugging easier.

Build, run, monitor, and manage data pipelines for any design pattern with one log in.

What Is a Transformer?

StreamSets Transformer Engine is an execution engine that runs data processing pipelines on Apache Spark. Spark ETL data pipelines can perform transformations that require heavy processing on the entire data set in batch or in streaming mode. You can install a Transformer Engine on any environment running Apache Spark.

Whether your data sources are on-prem, cloud-to-cloud or on-prem-to-cloud, use the pre-built connectors and native integrations to configure your Spark ETL pipeline without hand coding.