Instant notifications, product recommendations and updates, and fraud detection are practical use-cases of stream processing. With stream processing, data streaming and analytics occur in real-time, which helps drive fast decision-making. However, building an effective streaming architecture to handle data needs can be challenging because of the multiple data sources, destinations, and formats involved with event streaming.

- The Basics of Apache Kafka

- Kafka-Python Processing

- Setting Up Kafka-Python Stream Processing

- Summary

- Where StreamSets, Kafka, and Python Come Together

The Basics of Apache Kafka

Apache Kafka is an open-source distributed (pub/sub) messaging and event streaming platform that helps two systems communicate effectively, making data exchange more accessible. Kafka deploys to Virtual Machines (VMs), hardware, or on the cloud and consists of clients and servers communicating via a TCP protocol. With Apache Kafka, consumers subscribe to a topic, and producers post to these topics for consumers’ consumption. Kafka employs a decoupling method whereby the producers operate and exist independently of the consumer, i.e., producers only need to worry about publishing topics, not how those topics get to the consumers.

Currently, over 39,000 companies use Kafka for their event streaming needs, a 7.24% increase in adoption from 2021. These companies include eBay, Netflix, Target, amongst others. Apache Kafka is fast, can process millions of messages daily, and is highly scalable with high throughput and low latency.

However, because Kafka was built on Java and Scala, Python engineers found it challenging to process data and send it to Kafka Streams. This challenge led to the development of the kafka-python client, which enables engineers to process Python in Kafka. This article introduces Kafka, its benefits, setting up a Kafka cluster, and stream processing with kafka-python.

Essential Kafka Concepts

Some essential concepts you’ll need to know for streaming Python with Kafka include:

Topics

Topics act as a store for events. An event is an occurrence or record like a product update or launch. Topics are like folders with files as the events. Unlike traditional messaging systems that delete messages after consumption, Kafka lets topics store events for as long as defined in a per-topic configuration. Kafka topics are divided into partitions and replicated across multiple replicas in brokers so that various consumers can consume these topics simultaneously without affecting throughput.

Producers

Producers refer to applications that publish messages to Kafka. Kafka decouples producers from consumers, which helps with scalability. With Kafka, producers need only post messages, with no concern for how Kafka handles these topics.

Consumers

Consumers consume the topics produced by the producers.

Clusters

A cluster refers to a collection of brokers. Clusters organize Kafka topics, brokers, and partitions to ensure equal workload distribution and continuous availability.

Brokers

Brokers exist within a cluster. A cluster contains one or more brokers working together. A single broker represents a Kafka server.

Zookeeper

A zookeeper acts as the record that stores information about a Kafka cluster. It houses information like user info, naming configuration, and access control lists for topics.

Partition

Brokers contain one or more partitions. Each partition holds a topic replicated across other partitions in the brokers. There exists a leader partition, which coordinates writes to a topic. If the leader fails, the replica takes over. This principle of partitioning across brokers helps make Kafka fault-tolerant.

Kafka provides three primary services to its users:

- Publish messages to subscribers

- Message store while storing in order of arrival.

- Perform analysis of real-time data streams.

Benefits of Apache Kafka

Fault Tolerance and High Availability

In Kafka, a replication factor specifies the number of copies of partitions needed for a topic. In the event of a node failure in one broker, replicas in other partitions pick up the load and ensure messages get delivered. This feature helps ensure fault tolerance and high availability.

High Throughput and Low-Latency

Kafka ensures high throughput and low latency of as low as 2ms while handling high volume and high-velocity data streams.

Scalability and Reliability

The decoupled and independent nature of producers and consumers makes it easier to scale Kafka seamlessly. Additionally, nodes within a cluster can be scaled up/down to accommodate growing demands.

Real-Time Data Streaming and Processing

Kafka can quickly identify data changes by utilizing change data Capture(CDC) approaches like triggers and logs. This method helps reduce compute load whereby traditional batch systems load the data each time to identify changes. Kafka also performs aggregations, joins, and other data processing methods on event streams.

Ease of Integration Through Multiple Connections

Kafka uses its unique connectors to connect and integrate with multiple event sources and sinks like ElasticSearch, AWS S3, and Postgres.

Setting Up a Kafka Instance

A Kafka instance represents a single broker in a cluster. Let’s set up Kafka on our local machine and create a Kafka instance.

1. Download Kafka and extract the folder.

Note: You must have java8+ installed to use Kafka.

2. Navigate to the Kafka folder.

cd kafka_2.13-3.2.0

3. Start the zookeeper service

bin/zookeeper-server-start.sh config/zookeeper.properties

This is the result:

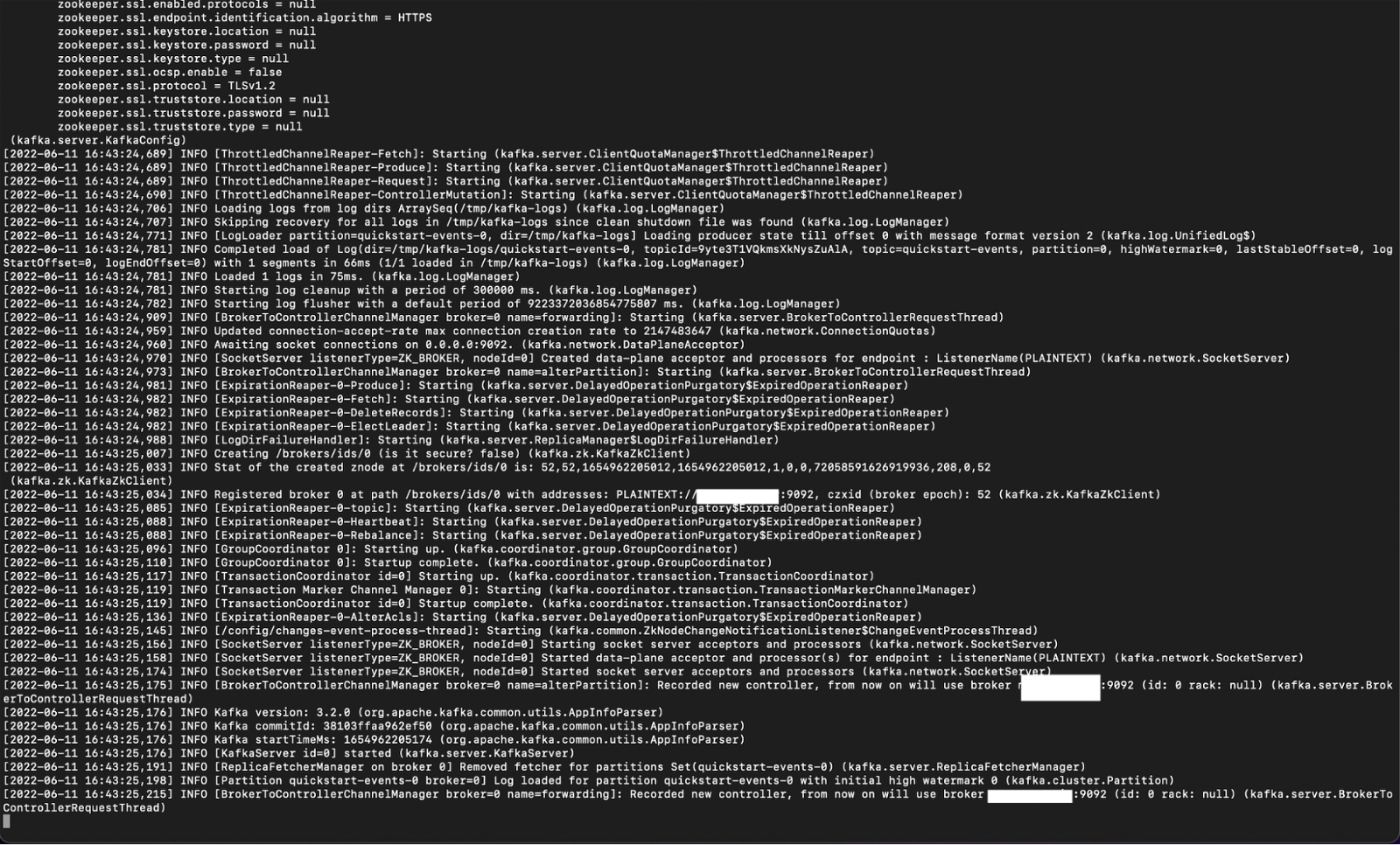

4. Open another terminal session and start the broker service.

bin/kafka-server-start.sh config/server.properties

This starts up a single broker server. Our broker uses localhost:9092 for connections.

Create a Kafka Topic

To publish messages to consumers, we need to create a topic to store our messages.

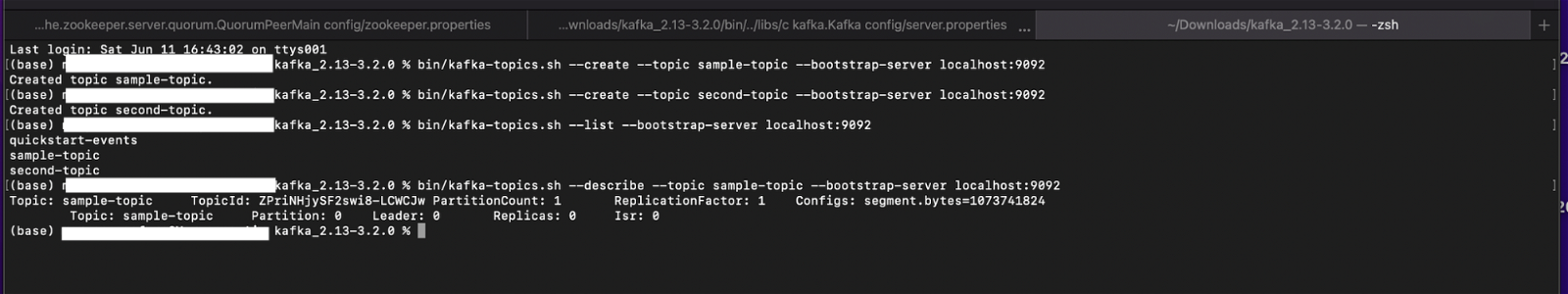

- Start another terminal session

- Create two topics called sample topic and second-topic

bin/kafka-topics.sh --create --topic sample-topic --bootstrap-server localhost:9092 bin/kafka-topics.sh --create --topic second-topic --bootstrap-server localhost:9092

A message confirms the topic has been successfully created

Created topic sample-topic. Created topic second-topic.

- Run the following command to get a list of all the topics in your cluster

bin/kafka-topics.sh --list --bootstrap-server localhost:9092

- Use the describe argument to get more details on a topic

Kafka-Python Processing

For most data scientists and engineers, Python is a go-to language for data processing and machine learning because it is:

- Easy to learn and use: Python uses a simplified syntax, making it easier to learn and use.

- Flexible: Python uses dynamic typing, making it easy to build and use in applications.

- Supported by a large open-source community and excellent documentation: The Python community ranges from beginner to expert level users. Python also has a robust documentation guide to help its developers maximize the language.

As mentioned earlier, since Kafka was built on Java and Scala, it can pose a challenge for data scientists who want to process data and stream it to brokers with Python. Kafka-python was built to solve this problem, with Kafka-Python acting like the java client, but with additional pythonic interfaces, like consumer iterators.

Setting Up Kafka-Python Stream Processing

Prerequisites

- Python3. Follow this guide to help install python3

- Kafka – already installed from the previous step.

Installing Kafka-Python

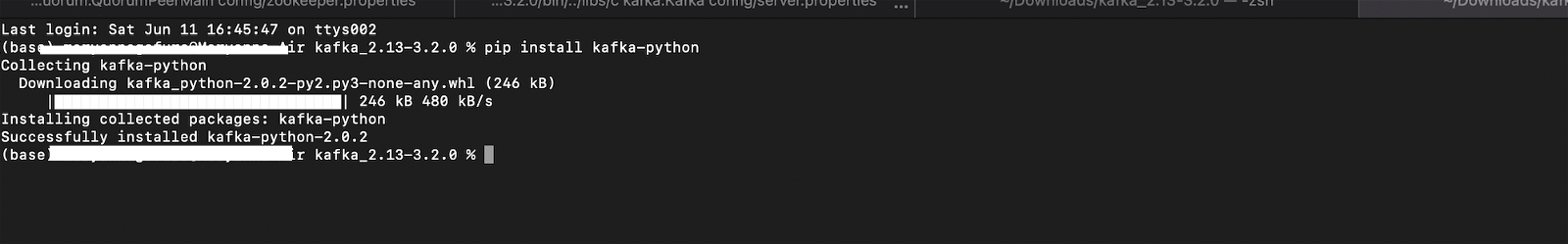

Let’s set up Kafka for streaming in python.

- Open another terminal session.

- Run the command to install Kafka-python

pip install kafka-python

This shows a successful installation.

Next, we write and process a CSV file and write it to a consumer.

Writing to a Producer

KafkaProducer functions similarly to the java client, and sends messages to clusters.

For our sample, we’ll use python to convert each line of a csv file(data.csv) to json and sends to a topic(sample-topic)

Python code

from kafka import KafkaConsumer, KafkaProducer import json from json import loads from csv import DictReader # Set up for Kafka Producer bootstrap_servers = ['localhost:9092'] topicname = 'sample-topic' producer = KafkaProducer(bootstrap_servers = bootstrap_servers) producer = KafkaProducer() # iterate over each line as a ordered dictionary and print only few column by column name with open('data.csv','r') as new_obj: csv_dict_reader = DictReader(new_obj) for row in csv_dict_reader: ack = producer.send(topicname, json.dumps(row).encode('utf-8')) metadata = ack.get() print(metadata.topic, metadata.partition)

This is our result

Writing to a Kafka Consumer

Next, we check Kafka Consumer to see if the topic was successfully posted.

Python code

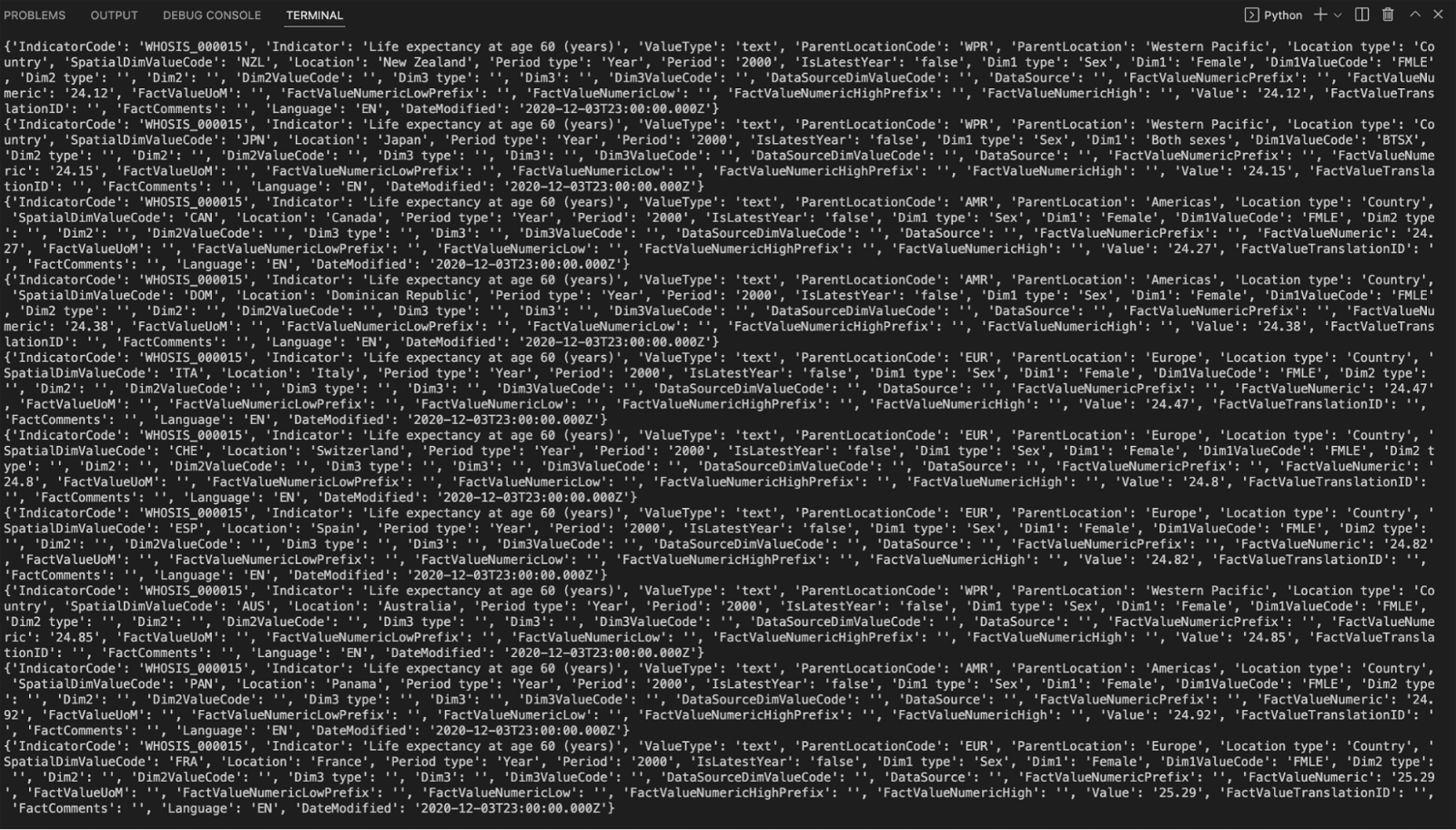

from kafka import KafkaConsumer, KafkaProducer import sys import json from json import loads ### Setting up the Python consumer bootstrap_servers = ['localhost:9092'] topicName = 'sample-topic' consumer = KafkaConsumer (topicName,bootstrap_servers = bootstrap_servers, auto_offset_reset = 'earliest',value_deserializer=lambda x: loads(x.decode('utf-8'))) ## You can also set it as latest ### Read message from consumer try: for message in consumer: print (message.value) except KeyboardInterrupt: sys.exit()

Here’s the output from our python file displaying our file now in json format.

Summary

The services provided by Kafka enable organizations to act instantly on event streams, build data pipelines, and seamlessly distribute messages, all of which drive vital business decision-making. Kafka is scalable, fault-tolerant, and can stream millions of messages daily. It employs multiple connectors, allowing for various event sources to connect to it.

The Kafka-Python client is a Python client for Kafka which helps data scientists process and send streams to Kafka. With the Kafka-Python client, data engineers can now process data streams and send them to Kafka for consumption or storage, improving data integration. The StreamSets data integration platform further improves this by providing an interface allowing organizations to stream data from multiple origins to various destinations.

Where StreamSets, Kafka, and Python Come Together

StreamSets allows organizations to make the most out of Kafka services. Using StreamSets, organizations can build effective data pipelines that process messages from producers to send to multiple consumers without writing code. For instance, with the use of Kafka Origin, engineers can process and send large messages to multiple destinations like AWS S3, ElastiSearch, Google storage, and TimescaleDB.

StreamSets also eases the burden of building stream processing pipelines from scratch by providing an interactive interface that allows engineers to connect to Kafka, stream to destinations and build effective and reusable data pipelines. This feature empowers your engineers and developers to do more with your data.