To ETL or ELT? For many a data engineer, that is the question.

On the one hand, the thinking goes, the newer ELT is flexible, easy to get started, and perfect for on-demand data. It’s the resilient alternative to the rigid, brittle ETL.

Yet all that glitters about ELT is not gold.

Left unchecked, it leaves out important details—making data sources hard to track or monitor, potentially running afoul of compliance requirements. Massive amounts of unstructured, untransformed data turned data lakes into un-consumable data swamps.

And while the rise of ELT has empowered non-technical personnel to use their own tools, it has also encouraged new data silos. As use of these tools proliferate throughout an organization, IT can neither see, track, nor follow data through an increasingly complex and disconnected infrastructure.

But what if you didn’t have to choose between the two? What if, instead of committing resources to the requisite tools, people, and processes for a given technique, you could have the proverbial data cake and eat it too?

Modern data platforms like StreamSets have turned this ‘what if’ into reality. But before we dive into how you can say yes to both ETL and ELT, a quick review of each technique is in order.

What Is ETL?

ETL stands for extract, transform, load. It’s a technique used to pull and clean up data from multiple sources so they’re ready to be used for analytics and brought into a centralized database.

Before the rise of the modern cloud, ETL was necessary because storage and computing power was limited and costly. ETL enabled organizations to use fewer of their scarce technological resources. But for all you could save in bandwidth and storage you had to spend gratuitously on ETL developers.

The essential problem was a complete lack of generalization. Using ETL meant data pipelines needed to be designed and built based on each data source and destination. That meant you had to have ETL developers with the right skills to build and maintain data pipelines, even as technologies changed and evolved.

What Is ELT?

ELT stands for extract, load, transform. It’s a data ingestion technique in which data is pulled from multiple sources into a data lake or cloud object storage. From there, the data can be transformed for various business purposes as needed.

ELT’s utility took off as the variability, velocity, and volume of data exploded. And it’s enabled by an increasingly affordable cloud infrastructure.

Moreover, unlike ETL, ELT requires fewer engineers to operate. With its separation of extraction and transformation, ELT enables analysts, rather than engineers, to handle transformations with SQL. This idea is key to ELT’s success because it allows more departments in large organizations to self-service their data access.

A big part of the reason ELT is so much less labor-intensive is that it allows for greater generalization.

Engineers can build on premade extract-and-load solutions for popular data sources. And they can leverage the specialized expertise of external providers for more complicated data sources.

In contrast to ETL’s lack of generalization, ELT makes it possible for data engineers to outsource and automate rather than hand code pipelines that will need to be rewritten anyway.

But, as mentioned in the introduction, this shift from the developer-centric ETL to the more self-service oriented ELT, brings its own new challenge: an unknowably complex, opaque data infrastructure.

Shifting the Paradigm from ETL to ELT

Much has been written about how ELT is disrupting ETL. If you’d like to dive a little deeper into the details, here’s a good Reddit thread on the topic. The basic idea is that ELT is better suited to the needs of modern enterprises.

Underscoring this point is that the primary reason ETL existed in the first place was that target systems didn’t have the computing or storage capacity to prepare, process and transform data. But with the rise of cloud data platforms, that’s no longer the case. And, except for certain niche applications, there’s no compelling reason to build the custom software needed to transform data as it’s extracted.

So for many—but not all—organizations, ELT is poised to render ETL obsolete.

To be sure, ETL will still have its uses. Among other things, ETL leveraging the processing power of Apache Spark can handle massive amounts of data and make it consumable to downstream applications.

Happiness Means Not Having to Choose

For engineers and analysts, happiness means not having to choose where or when you do data transformations.

While ETL and ELT tool vendors will espouse the virtues of one paradigm versus the other, the truth is you don’t have to choose. Instead, you can adopt an approach that goes like this:

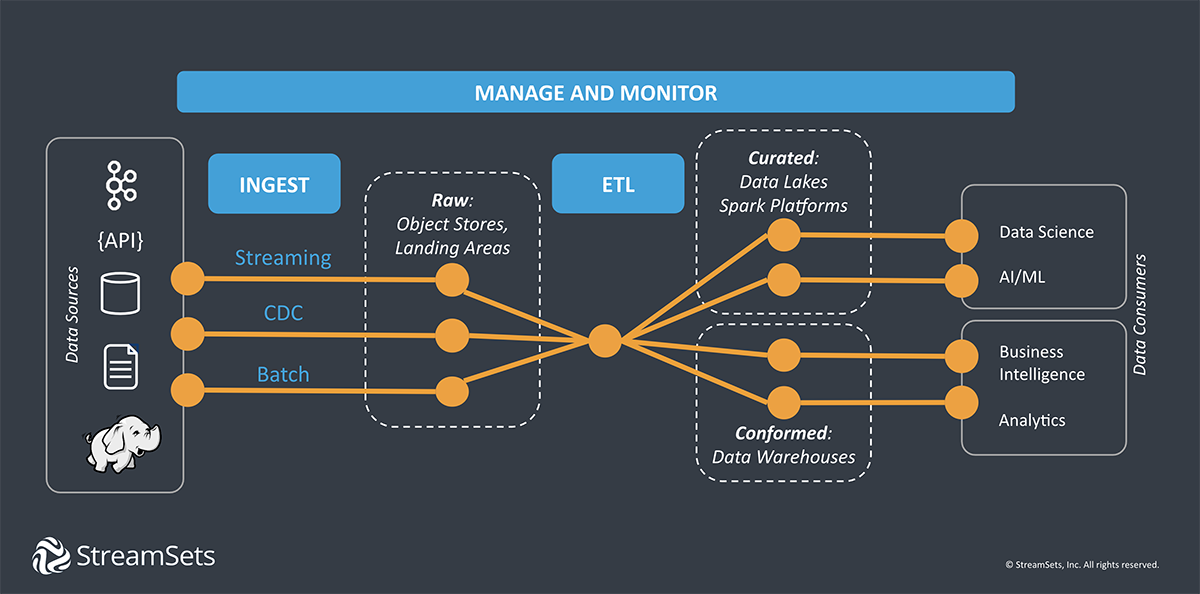

- Extract data from multiple sources

- Perform preprocessing transformations, i.e. to mask private data

- Load data into a repository

- Transform data as needed based on business requirements

StreamSets was built from the ground up with this dual paradigm at its core. With dozens of pre-load transformation processors, you can drag and drop in a graphical user interface, many of the most time-consuming, complex engineering processes are abstracted away.

At the same time, StreamSets enables developers to write their own custom processors in their preferred language. So while it provides the ease of use and flexibility of ELT it doesn’t stop you from extending your pipelines for more specialized use cases.

And by providing a single interface through which you can see and manage all your data pipelines for ETL or ELT, StreamSets fully unlocks the power of modern data architectures.

To put it simply, when you ask, “ETL or ELT?” StreamSets says yes.