Why is a Data Migration Strategy Important?

A data migration strategy is important because it minimizes cost, data loss, and downtime and maximizes utility for the users of data systems that depend on the migration. Poorly designed data migration strategies unfailingly lead to business disruption and/or suboptimal performance of the newly migrated system.

Just like a software engineer building a consumer application, data engineers must create data services that are as user-friendly, reliable, and accurate as possible. And like a team of software developers, data engineers rely on detailed infrastructure and migration planning to ensure accurate, reliable, and accessible data.

What is Data Migration?

Data migration is the process of moving data from one or multiple systems to a different system. It may involve migrating data from an on-premises database to a cloud data lake or from one cloud system to another. A common reason for data migration is the need to migrate data from a legacy system into a new system, very likely in the cloud. Often, data migration consolidates data from many cloud and on-premises source systems to one centralized repository to eliminate data silos and establish organization-wide access to information. Most often today, that central repository is a cloud data lake or cloud data warehouse.

Organizations today can’t ignore the benefits of the cloud as they look to scale up and down quickly according to business demand. Data migration is a critical component in an enterprise cloud migration strategy and plays a vital role in on-premise to cloud migration. Organizations transitioning to a cloud computing environment, such as hybrid cloud, public cloud, private cloud, or multi-cloud, need a data migration strategy to ensure a secure, streamlined and cost-efficient migration process to transfer data to their new environment.

Key Considerations of a Data Migration Strategy

Enterprises need a solid data migration strategy regardless of the specific driver behind the migration. Successful data migration mitigates the potential risk of inaccurate and redundant data. These risks can occur even if the source data is viable, and any problems that already exist within the source data are magnified when moved into the new system.

Some important cloud migration considerations include:

- Quantity of data: There are many tools to migrate data to the cloud. If your data needs are relatively modest, say a few hundred rows and columns in a single data set without a whole bunch of sub tables, your cloud provider (AWS, GCP, etc.) will have a tool for you to port your data over blindly (we call this “dumb” replication). Your data lands exactly as it was in your source system. But, when you’re migrating massive amounts of data – for example, an organization-wide move to the cloud, or even one significant system – you need a more sophisticated tool. The more data that you’re trying to move, the more complicated it is going to be. You need visibility and control to ensure data quality and a tool that can transform data as it moves to the cloud. This leads us to the next point…

- Making your data “fit for purpose”: If you’re moving data from an on-premise system (A) to the cloud (B), since B may operate very differently from A, you often need to transform/conform/reshape the data while migrating it so that it works in the way system B needs it to. A lot of teams who are new to the cloud and under pressure to migrate quickly are just copying what they did on-prem into the cloud. But that doesn’t allow you to take advantage of the benefits of cloud architectures. To do that, your data migration must include making sure the data is optimized for its new destination system.

- Migration time frame: How long data migration takes depends on the amount of data and the type of tool you use. It’s important to realize that while a migration tool with data pipeline software that push data without any transformation may go more quickly, it’s not worth the tradeoff in lost performance and other benefits of cloud architecture.

- Hybrid cloud: Many organizations are opting for the benefits of a hybrid cloud, which combines a public cloud with a private one. In this cloud model, data and applications constantly move across the two different cloud environments. This flexibility allows enterprises to use a unified, individual IT infrastructure that facilitates orchestration and management across the cloud environments.

- Multi-cloud: A multi-cloud computing environment allows organizations to benefit from private and public clouds via platform-as-a-service (PaaS) or infrastructure-as-a-service (IaaS) from multiple cloud service providers. This model provides the flexibility of cloud services from different providers based on the right mixture of price, performance, security, and compliance and minimizes the potential of unplanned downtime.

- Big Bang and Trickle Strategies: Most data migration strategies are either big bang or trickle migrations. During a big bang data migration, organizations accomplish the entire transfer in a short timeframe. The live systems will go through a downtime period when the data goes through the extract, load, transform (ETL) process and moves to the new destination. But there are risks of very costly failures, and the requisite downtime can negatively impact customers.The smartest migration strategy is a trickle data migration strategy because data today needs to be continuous. During a trickle data migration, an organization completes the migration in manageable stages over a more extended time. The original and new systems run in parallel throughout the implementation process. This process ensures that downtime and corresponding disruptions can be avoided, and real-time operations are consistently maintained.

The Risks of Migrating Data

Migrating data can be a complex process with several potential risks. These risks include:

- Data loss: Migrating large data sets comes with the risk that some data may be corrupted or lost in transit. This can happen due to software errors, hardware failures, or problems during the data transfer process. Proper backups before migration are crucial.

- Downtime and business disruption: There may be periods during the migration where applications and services cannot access the data, leading to potential downtime or disruptions. Planning for appropriate maintenance windows is essential.

- Data integrity issues: Data may become corrupted, transformed incorrectly, or loaded improperly at the destination. Testing and validating data after migration is critical.

- Security/compliance risks: Data is especially vulnerable during migrations, so you must take extra security precautions. Also, data subject to regulations may require special handling.

- Loss of metadata/relationships: When moving data between systems, metadata, links, ownerships, permissions, etc., may fail to carry over correctly.

- Conflicting data: If not managed properly, data from old and new systems can temporarily co-exist, leading to conflicts that need reconciliation.

- Scaling issues: If data volumes or performance needs spike on the new platform, the system may not scale as expected initially. Capacity planning and load testing help.

- Cost overruns: Migrations often take longer and require more resources than anticipated, driving costs higher than budgeted if not managed carefully.

Proper planning, testing, backup/restoration ability, strong change management, and roll-back contingency plans can help mitigate these risks.

Four Phases of the Data Migration Process

The majority of data migration projects include planning, migration, and post-migration. A critical fourth step that is too often overlooked is ongoing data synchronization, which is included here as well. Depending on the complexity of the specific project, each of these phases might repeat multiple times before the new system can be established as completely validated and deployed.

- Data migration plan – During the data migration plan phase, the enterprise chooses the data and applications that have to be migrated depending on the particular business, project, and technical needs and dependencies. The organization should analyze the bandwidth and hardware requirements for its data migration project, and formulate scenarios for practical migration, including corresponding tests, mappings, automation scripts, and techniques. It also needs to select and build the migration architecture and roll out change management procedures. Additionally, the enterprise determines what data preparation and transformation frameworks it needs to enhance data quality, prevent any likelihood of redundant data, and ensure data is conformed and optimized for the new system.

- Data migration process – The enterprise needs to customize the planned migration procedure and validate the specific hardware and software requirements during this phase. This might extend to a certain degree of pre-validation testing so that the requirements and settings function as planned. There are two approaches: you can take the time to understand what your origin schema looks like, then recreate it exactly in a new system, or you can use a modern data integration tool that allows you to skip that time-consuming process by automating multi-table updates. Assuming everything is correct, the migration starts, including data extraction from the old system and loading data into the new system.

- Post data migration – Following the migration, data verification is performed to ensure that data is complete, translated correctly, and effectively supports various processes within the new system. A parallel run of both the original and the new system might be necessary to pinpoint any disparities and anticipate data losses. Usually, migration reporting happens, and the organization decommissions any legacy systems.

- Ongoing data synchronization – Once data is migrated, organizations must move on to continuously synchronize data across systems, databases, apps, and devices. This ensures accurate and compliant data for continuous data delivery. Data synchronization allows real-time delivery of data that’s always consistent.

Types of Data Migration

There are a few key categories of data migration, and these may overlap based on the individual data migration case. For example, a data migration project might simultaneously encompass database migration and application migration.

- Analytics platform migration – Many companies today are moving from a legacy analytics platform – an enterprise data warehouse such as Teradata or big data platforms like Hadoop, for example – onto a modern cloud data warehouse or data lake like S3, Redshift, Snowflake, etc.

- Application migration – Application migration involves transferring data from the current computing environment to a different one. This kind of migration is often associated with changing application vendors or software, such as changing the ERP platform to a new one. The data synchronization between the two applications must occur during the application migration process. Also, because every application utilizes a specialized data model, this type of migration generally involves significant transformation and data formatting.

- Database migration – Database migrations involve transferring one database management system (DBMS) to another, which can be complicated in scenarios where the original system and new system leverage different data structures. Database migration can also involve upgrading the existing version of the database software to the most current version, which might demand a physical data migration because the data format might significantly alter.

Data Migration Tools

Enterprises have numerous options for data migration tools that can help optimize the process to gain the benefits of cloud migration. It’s expensive and time-consuming to build and manually code data migration tools, so many organizations rely on point solutions from their cloud provider, which can get the data migrated – and often quickly, at that. BUT, once they want to add or change clouds, or once they do different patterns (say, add streaming to their batch processing), then it’s time again to go find another point solution.

In the end, specific tools will depend on the enterprise’s data migration strategy and required business objectives. Before selecting a data migration tool, enterprises should consider:

- Environment: If the organization intends to plan a data migration from a public to a private cloud, a data migration from on-premise to public/private cloud, or a data migration on-premises in the existing environment.

- Security and compliance requirements: If migrating sensitive or proprietary data with specific compliance requirements, enterprises may want to use a specialized migration tool that accounts for data security and compliance. The organization must securely encrypt data before migration for any data migration approach. If the migration occurs offline, the enterprise should review the shipping services’ security protocols.

- One-time vs. ongoing: It’s rare that an organization migrates data to a new system never to use it again. More often, two systems run in parallel and need to synchronize on an ongoing basis. If the initial migration tool is only designed for a big bulk upload, that means redoing much of the work already completed for the ongoing synchronization. One data integration platform can instead handle multiple use cases, including the migration and ongoing synchronization.

- Data and its use: Enterprises need to identify who is using the data both now and in the future, and how it will be leveraged. Data used for different objectives, such as analytics, have unique formatting and storage needs that must be addressed vs. data held for compliance purposes. For analytics, data integration and transformation will obviously be important on an ongoing basis. By using a data integration platform that includes transformation during the migration process (ensuring data is fit for purpose), you get a lot of reuse/leverage.

- Business needs: It’s important to determine the likely impact of the data migration as soon as possible. For example, how much data loss is tolerable, the impact of possible delays or downtime on the overall business, and the kind of migration time frame required.

Organizations have four main options for data migration:

- Hand-coding is the most time-consuming and least-productive process (hence, most expensive) for data migration, yet is still used. It doesn’t allow teams to keep up with today’s real-time data requirements.

- Built-in Database Replication Tools are often included with a database license and are easy to use. However, they’re typically limited to one-way data replication and don’t include transformation or visibility.

- Data Replication Software lets organizations copy data from one database (or other data store) to another, typically exactly as the data is. This is useful for backup and failover but is highly limiting when you are migrating data into a new system that has different architectural considerations and usage patterns than the original system.

- Data Integration Platforms are responsible for the continuous ingestion and integration of data for use in analytics and operational applications. They allow the data to be transformed and optimized to be used in the destination system.

Regardless of the data migration tool, enterprises should ensure that the solution has specific important capabilities:

- Connectivity: Can the tool support the software and systems that the enterprise is presently using, and changing business needs in the future?

- Transformation: Is the solution capable of optimizing data for its destination system (i.e., transforming data so it’s cloud-ready), and ensuring data quality and ongoing synchronization?

- Multi-modal (batch, CDC & real-time): Does it have the ability to do batch, CDC, and stream-processing to handle different use cases as they occur (i.e., initial load and ongoing synchronization)?

- Scalability: What are the software’s data limits? Will your changing data needs surpass these limits over time?

- Re-usability: Are best practices and design details easily shareable and reusable?

- Portability: Can you easily change sources or destinations with just a couple of clicks (no code and no breakages)?

- Speed: At what speed does the platform support data processing? Keep in mind that fastest doesn’t always equal best. Your solution needs to be fast enough, but other considerations, such as the ability to transform or observe data, may be more important.

- Security: What are the security protocols for the software platform? Is data effectively protected?

- Resilient to Data Drift: Can the solution detect and handle changes in schema, semantics, and infrastructure drift?

- Support for Hybrid and Multi-cloud: Does the solution support on-premise, hybrid, cloud, and multi-cloud environments?

DataOps for Data Migration

DataOps is another factor in successful cloud data migration, whether the enterprise considers private, public, hybrid, or multi-cloud. DataOps ensures continuous integration and delivery of data and the operational visibility required for dynamic, complex cloud architecture. DataOps applies DevOps practices towards data management and data integration to speed the cycle time of data analytics and focuses on automation, monitoring, and collaboration.

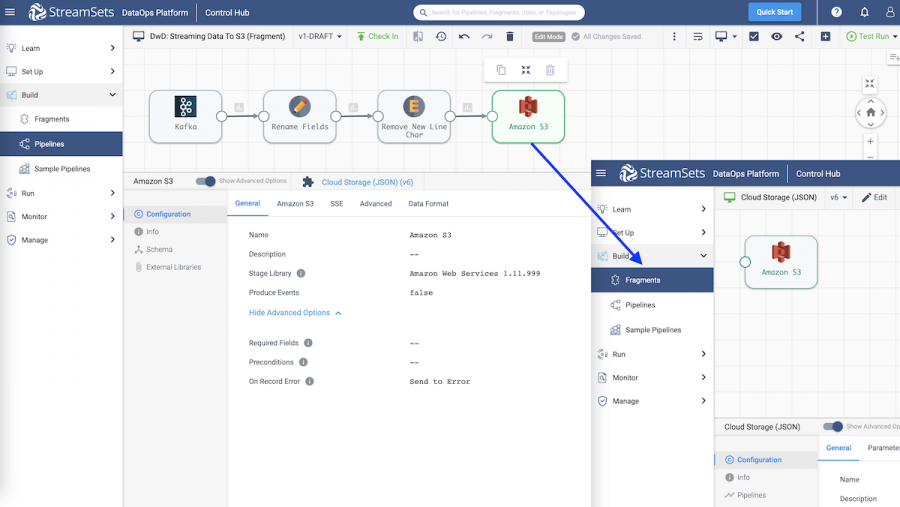

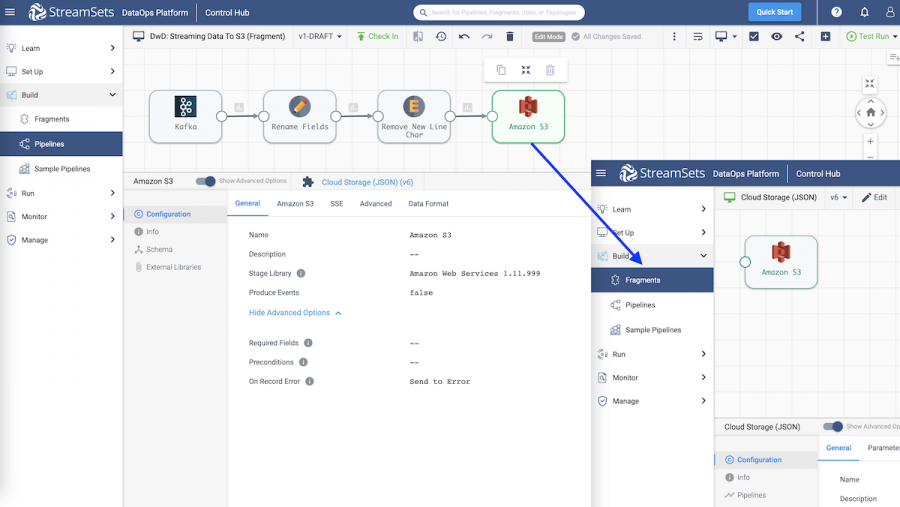

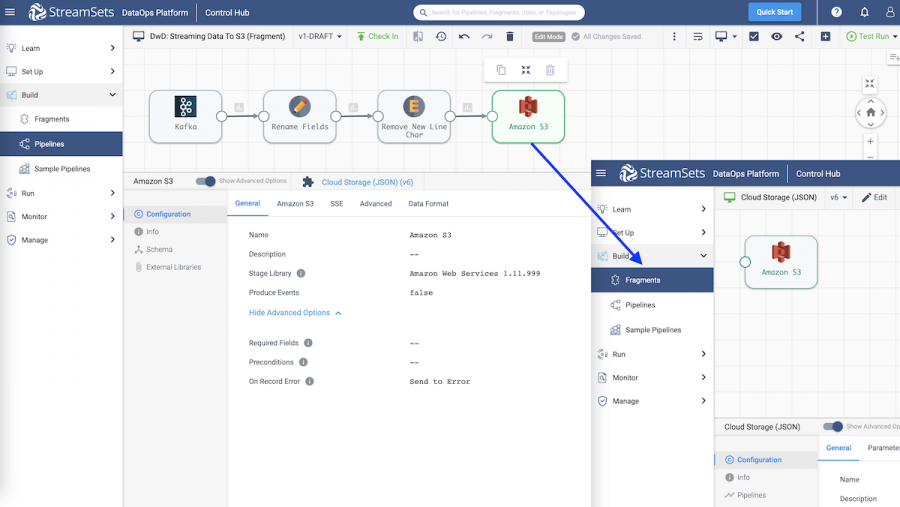

StreamSets DataOps Platform

The StreamSets DataOps Platform is an end-to-end data integration platform that delivers continuous data to the business, a modern requirement for all companies. Architected to solve the data engineer’s data migration challenges, it:

- Quickly and reliably build intent-driven pipelines with a single tool for all design patterns. Available on-premises and across clouds.

- Automates the process of schema migration to new systems with multi-table updates and ongoing data drift detection

- Minimizes the ramp-up time needed on new technologies and easily extend data engineering for more complex migrations

Smart data pipelines abstract away the “how” of implementation so you can focus on the what, who, and where of the data. Start building smart data pipelines for data ingestion across cloud and hybrid architectures today using StreamSets.

Frequently Asked Questions

What is lift and shift data migration?

Lift and shift data migration (also known as rehosting) is a straightforward migration method in which on-premises workloads are moved to the cloud as-is, with no re-architecting. This migration approach is best for low-impact workloads but it means missing out on many of the benefits of cloud computing since the architecture is not optimized for the cloud.

What should be included in a data migration strategy?

The following components, or phases, should be included in a data migration strategy:

- Planning: evaluating systems, design solutions, budget, test, build, and backup data.

- Migration: extracting and loading the data in a way that’s compatible with business requirements.

- Post-migration: verifying accuracy and completeness.

- Data synchronization: maintaining consistency over time.

What are different migration strategies?

There are three main data migration strategies: all at once (bulk data migration), over time (trickle data migration), or a combination of the two. The choice between the two depends on your business requirements.

Generally, bulk data migrations work best if you’re moving from a well-designed, centralized legacy system which you plan to retire. And trickle data migrations work best if the legacy system is decentralized and you plan on making lots of changes to the data you’re going to migrate.

Is data migration an ETL process?

Data migration can be an ETL process, but not always. It depends on whether or not the data migration includes transformation of data, which is not always the case.

For instance, rehosting is a common form of data migration which involves extracting from a source system and loading it into a target system. However, other approaches to data migration may require ETL so that data’s format in the source system is transformed to be compatible with the target system’s format.

What is the difference between data migration and data transfer?

Data migration is a complex process, often involving transformation and integration into a new system or environment. The goal is often strategic, such as improving data management, enhancing system performance, or enabling new functionalities.

In contrast, data transfer is more about moving data from one place to another in its existing form for more immediate or operational needs. The purpose of data transfer is usually immediate and practical, like data backup, synchronization, or sharing information between teams or locations.

Which stakeholders are involved in data migration?

Data migration projects typically involve various stakeholders, each playing a critical role in ensuring the migration process is successful, efficient, and minimizes risks. The involvement of these stakeholders helps in addressing technical, business, and operational aspects of the migration. Key stakeholders involved in data migration projects include:

- Business owners that own the data, oversee business requirements, identify data to migrate to the new system, and validate results post-migration. Ensuring continuity of business operations is a primary concern.

- Project sponsors and managers are responsible for the planning, budgeting, coordination, oversight, and executive visibility needed to make the migration successfully happen across teams.

- IT teams migrate data from old systems into new platforms. DBAs, developers, architects, and DevOps/DataOps engineers manage technical risks and system capabilities.

- Data Analysts analyze data flows, attributes, relationships, volumes, velocity, and quality across old and new systems. They define data transformation rules and conversion requirements.

- Subject matter experts understand the data elements, providing business context and meaning to attributes and validating appropriate data use in the new system.

- Security, Compliance, and Privacy teams assess security, legal, regulatory, and policy requirements for data movement to ensure confidentiality and adherence to data governance mandates.

- Data consumers need to be trained on any data changes and new access methods after the migration is complete, so continuity to data consumption/reporting is maintained.

- Testing and Quality Assurance teams test the completeness, accuracy, and validity of migrated data for intended use cases in the new system or platform.