Create a Custom Expression Language Function for StreamSets Data Collector

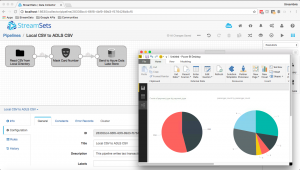

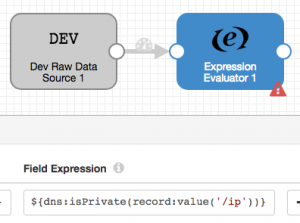

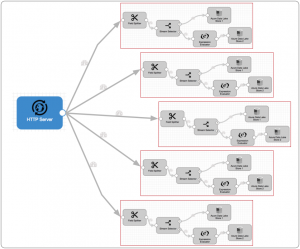

One of the most powerful features in StreamSets Data Collector Engine is support for Expression Language, or ‘EL’ for short. EL was introduced in JavaServer Pages (JSP) 2.0 as a mechanism for accessing Java code from JSP. The Expression Evaluator and Stream Selector stages rely heavily on EL, but you can use Expression Language in configuring almost every SDC stage. In this blog entry I’ll explain a little about EL and show you how to write your own EL functions.

One of the most powerful features in StreamSets Data Collector Engine is support for Expression Language, or ‘EL’ for short. EL was introduced in JavaServer Pages (JSP) 2.0 as a mechanism for accessing Java code from JSP. The Expression Evaluator and Stream Selector stages rely heavily on EL, but you can use Expression Language in configuring almost every SDC stage. In this blog entry I’ll explain a little about EL and show you how to write your own EL functions.

One of the great things about

One of the great things about

The

The