Efficient Splunk Ingest for Cybersecurity

Many StreamSets customers use Splunk to mine insights from machine-generated data such as server logs, but one problem they encounter with the default tools is that they have no way to filter the data that they are forwarding. While Splunk is a great tool for searching and analyzing machine-generated data, particularly in cybersecurity use cases, it’s easy to fill it with redundant or irrelevant data, driving up costs without adding value. In addition, Splunk may not natively offer the types of analytics you prefer, so you might also need to send that data elsewhere.

Many StreamSets customers use Splunk to mine insights from machine-generated data such as server logs, but one problem they encounter with the default tools is that they have no way to filter the data that they are forwarding. While Splunk is a great tool for searching and analyzing machine-generated data, particularly in cybersecurity use cases, it’s easy to fill it with redundant or irrelevant data, driving up costs without adding value. In addition, Splunk may not natively offer the types of analytics you prefer, so you might also need to send that data elsewhere.

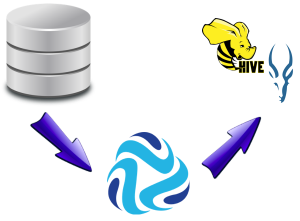

In this blog entry I’ll explain how, with StreamSets Control Hub, we can build a topology of pipelines for efficient Splunk data ingestion to support cybersecurity and other domains, by sending only necessary and unique data to Splunk and routing other data to less expensive and/or more analytics-rich platforms.

Updated November 29, 2021

Updated November 29, 2021 As well as parsing incoming data into records, many

As well as parsing incoming data into records, many